AV simulation testing faces a long and winding road

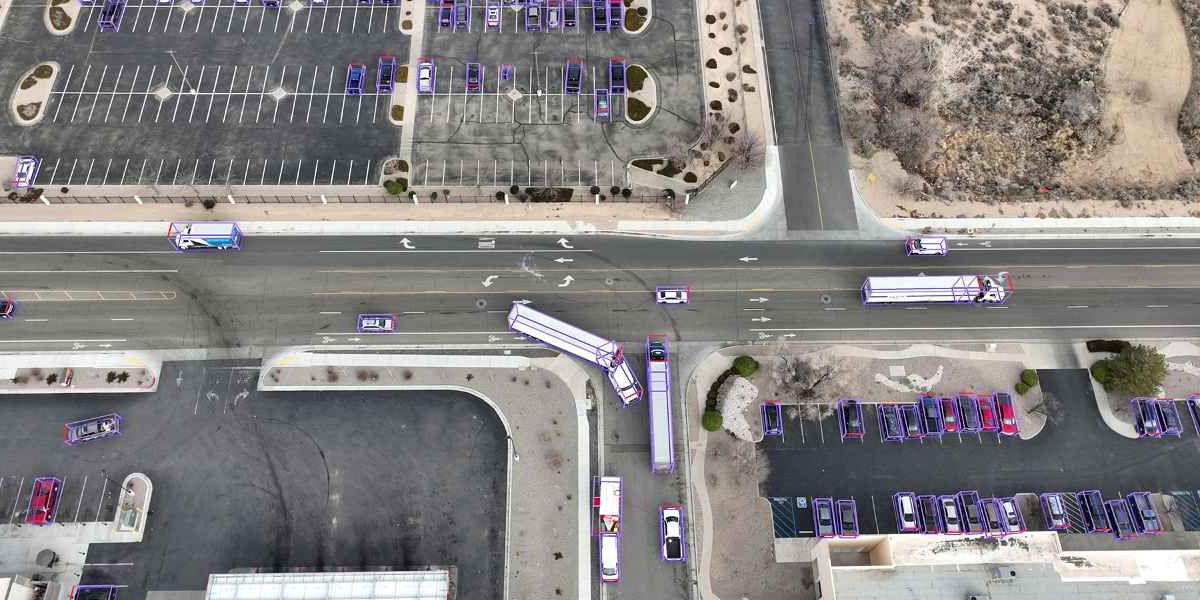

Example output of DeepScenario’s AI software that detects and tracks all moving objects in a scene in a fully automated way. (DeepScenario)

There are many divergent-but-related ideas about how best to leverage simulation to ‘teach’ and test automated vehicles. Global AV simulation experts continue to attack the technology’s persistent obstacles.

This article was first published on

www.sae.orgFREE REPORT: Reducing Human Driver Error and Setting Realistic Expectations with Advanced Driver Assistance Systems

Thousands die or are injured each year in automobile crashes. Bringing automated driving systems technologies into the advanced driver assist systems (ADAS) and connected vehicle space will help humans drive more safely and better prepare us for automated vehicles (AVs).

Learn more about ADAS and ADS implementation with the goal of reaching zero deaths and serious injuries by downloading this FREE report from SAE International (valued at $50).

The great testing debate

Right now, millions of virtual vehicles are driving millions of virtual miles. Their long-term digital mission is to make high-level vehicle automation a reality. Settling on the best methods to test all of these AVs is part of a healthy debate throughout the industry, as engineers can’t agree on ways to explore different simulation and sensor models.

AV startups around the world are busy writing the code that defines the rules of these virtual test beds. Even when there’s agreement on some aspects – everyone knows AVs need to be safe – there are legitimate questions about how to get there.

Imagry: a focus on real-world miles

Even companies that are happy to explore the mysterious world of AV neural networks have found there are real limits to the tests that can be accomplished in a server box. Imagery is one such entity.

Imagry’s mapless Level 3 and Level 4 automated-driving software was built on the idea that neural networks and AI are the only way to truly solve self-driving vehicles.

“We believe strongly in the singularity of AI,” Imagry CEO Eran Ofir told SAE Media at the IAA Mobility 2023 exposition in Munich, Germany. “Basically, that AI would be better than humans in [driving] tasks.”

Imagry was founded in 2015 and is headquartered in San Jose, California with an office in Haifa, Israel. The company has two business lines, one that offers perception and motion-planning software to OEMs and Tier 1s for passenger vehicles and a second that provides autonomous passenger-bus technology. This software is already being used in three projects in Israel and Imagry has bids for similar bus routes in the Netherlands, France, Portugal and Dubai that should be announced in 2024.

Imagry points to three main ways its AV software differs from its competitors. First, it’s mapless, so it doesn’t need an onboard or cloud-based HD map to assist the vehicle as it drives itself. Second, similarly, it’s location-independent because it functions even on roads that haven’t been pre-approved. Third, it’s hardware-agnostic, so OEMs and Tier 1s can use the software no matter what chipset they’re using in an AV.

The core element of Imagry’s perception system is an array of neural networks that process, in parallel and in real-time, different types of objects while the vehicle is in motion. The vehicle does not need a connection to offboard data or compute power, other than navigation information, to get from point A to point B. Instead, multiple neural networks are loaded onto the vehicle that look for different types of objects. One might be looking for traffic lights, another for traffic signs; one for pedestrians, one for moving vehicles, one for parked vehicles and another for traffic lanes. Each of these networks helps build, in real-time, a 3D layer map that represents and perceives everything within 300 meters (984 feet) of the vehicle.

Another section of Imagry’s software is devoted to motion planning, and this is where Imagry ran into difficulties with simulated testing. This part of the company’s code uses deep convolutional neural networks that the company has been training since 2018 using data from test vehicles driving on actual roads in the U.S., Germany, and Israel.

“We trained those networks for many years in order to understand all kinds of scenarios,” Ofir said. “We trained them on hundreds of millions of images – on 10,000 roundabouts and on a million pedestrians and on, I don’t know, 150,000 traffic lights. I just mean many, many, many, many, many objects. And when we tried simulation, it didn’t work for us. It wasn’t good enough for us.”

The virtual driving environments couldn’t match the variety of things that actually were happening on the road, Ofir said. To make up for that gap, Imagry records every drive its vehicles make and then, using semi-automatic annotation tools, it further teaches the neural nets to identify objects using supervised learning. Here, too, Ofir sees limitations in what software can do on its own.

“The problem with unsupervised learning that you hear some vendors talking about is this: would you get into a vehicle that is driving based on software that learned unsupervised, without human control, you don’t know what the system has learned?” he asked. “This is why we strongly believe that the system has to be supervised, which takes time. You need to walk in the desert, as it were, for a few years until you get it to the level. But once you’ve got it, that’s it.”

Counterpoint: Helm.ai

Unsupervised learning is exactly the technology that Helm.ai sees as the key to training its own software. Helm.ai is working on perception for self-driving cars and robotics. Its software takes raw images from cameras and attempts to detect things that an AV needs to see: pedestrians, vehicles, wheels, drivable surfaces and so on. Michael Viscardi, tech lead for perception at helm.ai, said unsupervised learning was vital to getting its AI to understand difficult conditions like nighttime driving or when there’s lots of fog or glare.

“This is where a lot of autonomous vehicles struggle, and humans, too,” Viscardi told SAE Media at IAA. “We developed this method. Instead of deep learning, and call it deep teaching. The neural net is basically able to teach itself using large-scale, unsupervised learning on millions of images to get robustness to all these corner cases.”

So, to learn how to drive at night, Helm’s AI used millions of frames that were not annotated by humans, Viscardi said. Helm’s AI strategy is to ingest data from a bewildering number of different sources – for example, dashcam footage from YouTube showing rain or night – so the neural net can learn from that data and produce robust lane predictions.

Helm.ai double-checks what the AI has learned through compute metrics, including key performance indicators (KPIs), on what Viscardi called an extensive validation data set.

“On the issue of real-world versus simulated testing, because it’s a very general net, we can validate on data across the world,” he said. “It’s been trained on a wide range of data that can then be specialized to certain geographies or cameras.”

Helm.ai also can adjust its AI to evaluate specific data from an OEM, fine-tuning it to allow for large-scale testing and better accuracy.

“Unsupervised learning means that for every single corner case that comes up, we don’t need to go and collect a million frames and then get them all labeled, which is very expensive and time-consuming,” he said. “You could keep throwing money and time at it and maybe eventually you’ll get there, but it’s not a scalable approach. What we provide is scalability across cameras, across geographies and across corner cases.”

Unsupervised learning also costs less time and money than hand-annotating files of edge cases, he asserted, and the system also is hardware-agnostic. Viscardi admitted that there likely will be a plateau of some sort to what Helm’s AV AI can learn, but the company isn’t yet anywhere near that limit. For now, the more data, the better, he said, especially as Helm starts work on an urban AI pilot to add to its highway pilot system.

“Getting autonomous driving right in urban scenarios based on that perception, that’s kind of what we’re really excited about working on right now,” he said.

From YouTube to AVs: DeepScenario

Helm.ai is not the only AV-development company that disagrees with Imagry’s anti-simulation stance. DeepScenario’s goal is to be able to measure a location and be able to simulate much quicker than anyone else while providing “the highest, highest detail,” according to DeepScenario co-founder Holger Banzhaf. A 10-person, Stutgart-based startup that spun off from TU-München (the Technical University of Munich) in 2021, DeepScenario has developed software that creates testbed environments for automakers to run their automated-driving algorithms against virtual scenarios.

“Right now, [the software shows] just boxes,” Banzhaf told SAE Media at IAA. “But cars have a shape, and they have colors, and that matters for the perception algorithms, because black objects are not as well-detected as white ones.”

To create the kind of challenging edge cases Imagry’s Ofir – and others – are asking for, DeepScenario’s technology extracts motion data from standard videos to build its virtual testing worlds. One thing the company is doing differently is to access video feeds from stationary cameras that show traffic flows. This gives DeepScenario a significant amount of real-world data from a specific type of intersection in a short time. During a recent excursion in Los Angeles, California to capture information from highway entries, DeepScenario’s drones captured 12,000 highway entries and exits in three hours (Banzhaf said from a legal perspective, drones are easy to fly in the U.S., but the company gathers information in multiple countries). Armed with this kind of data, DeepScenario can approach OEMs and Tier 1s with potential solutions to exactly the cases where the driving software has problems, through ‘scenario mining.’

“If your system is not good at intersections, not good at entries, at particular scenarios, we can really quickly accelerate the databases for those locations,” Banshaf said. “You're looking for something specific in your data. You’re looking for accidents, for almost-accidents, and you want to get those scenarios – basically, those 20-second snippets – and put them in your database such that when you update the [AV driving] software, you can test against it.”

The UK wants to know if your AV AI is any good

In May 2023, the U.K. government opened funding requests for feasibility studies into how connected and automated-mobility technologies could function as mass-transit solutions. Organized by the Centre for Connected and Autonomous Vehicles (CCAV), the funding competition attracted the attention of dozens of companies, including rFpro. RFpro builds engineering-grade simulation environments for automotive and motorsport companies. In September, rFpro announced it would be a partner in two consortium projects funded by the CCAV to the tune of £4 million ($4.9 million).

The Sim4CAMSens project, which received a £2-million ($2.4-million) grant, attempts to “enable accurate representation of Automated Driving System (ADS) sensors in simulation,” according to the official documents. Or, in the words of rFpro’s technical director, Matt Daley, the mission is to answer “the eternal question: when is a simulation good enough to use? And how far can we trust that,” he said. “At what point should our organizations actually expect the simulation to be able to present this level of scientific results?”

Daley was speaking at September 2023’s AutoSens Brussels conference, where he told SAE Media that rFpro will create new types of sensor models across all modalities and cameras, lidar and radars for the Sim4CAMSens project in order to improve the ability of a virtual test to accurately gauge the competency of automated-driving software.

RFpro is also involved in the DeepSafe project, which received £2 million from CCAV and has an official goal to “develop the simulation-based training needed to train autonomous vehicles to handle ‘edge cases’, the rare, unexpected driving scenarios that AVs must be prepared to encounter on the road.” DG Cities, Imperial College London and Claytex also are involved in the DeepSafe project, which will be led by dRISK.ai. The resulting AV training tools will be released after the project ends, making DeepSafe’s ‘sensor real’ edge-case data – a simulation of what an actual sensor would detect in the real world – commercially available.

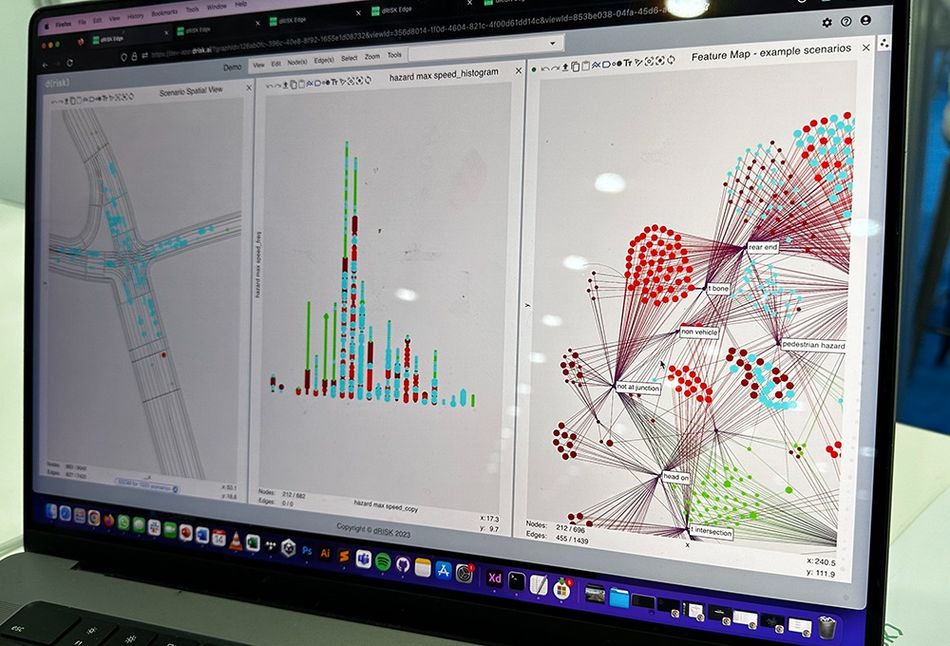

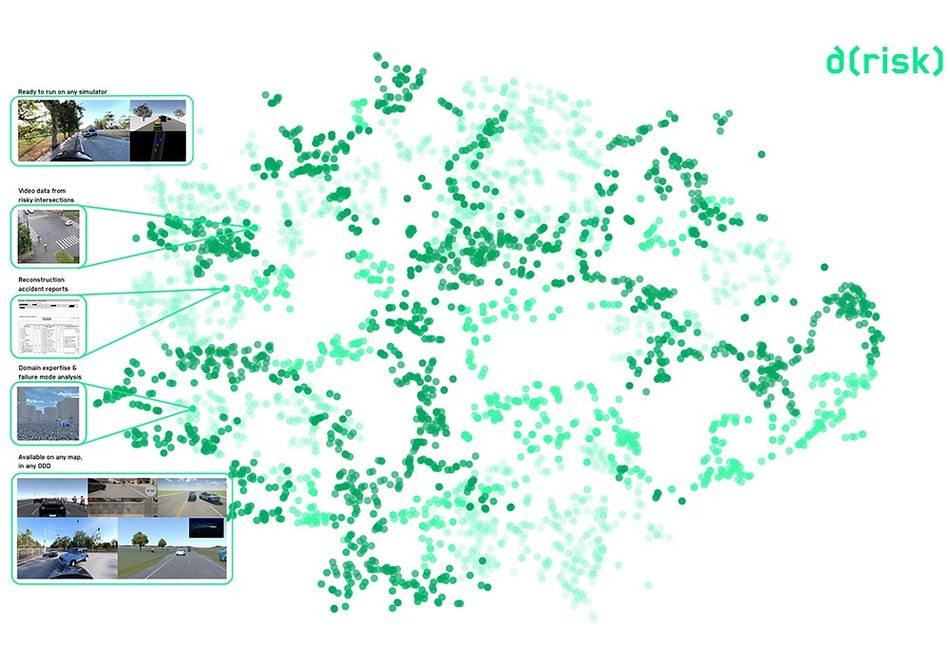

dRISK.ai, formed in 2019 and now based in Pasadena, California and London, England, said it is uniquely situated to lead the DeepSafe project. Looking for unusual traffic situations is what the company’s hyper-dimensional dataset, which tracks around 500 different points in its taxonomy, was built to discover. Publicly, dRISK currently is working with NVIDIA, May Mobility and Luminar.

“We capture weird stuff that happens on the road in any kind of data set that is available,” dRISK transport systems strategist Kiran Jesudasan told SAE Media at the North American International Auto Show in September. “That can be CCTV or dashcam sensor feeds, or even things like accident reports and insurance claims data. We fuse them all under a single taxonomy and then we create simulations out of those.”

When dRISK applied for the CCAV funding, it recommended the U.K. government rethink what it was asking for in the competition.

“The way they initially structured it was, ‘We, as the U.K, Government, are looking for a simulator that we can use to test autonomous vehicles and ADAS vehicles that are coming to our shores,” Jesudasan said. “Our counter pitch to them was, ‘No, you don’t.’ Everybody and their mother is building a simulator. What you want is a mechanism to understand what you should simulate in the first place. What is actually relevant for you and how can you actually go to the public with a certain degree of assurance that yes, this vehicle is actually not going to do terrible things on the road.”

Jesudasan said dRISK’s data can help provide this certainty because it is auditable and should help various AV driving software builds to avoid the common one-step-forward-two-steps-back phenomenon.

“AI gets very good at one particular phenomenon – unprotected lefts, for example – but is absolutely atrocious at other phenomena, like dealing with a pedestrian running across the road while taking a right,” he said. “That’s because it has over-trained in a certain space. With our tools, they can understand the entire training landscape, understand where they have gaps and be able to sort of systemically plug those gaps, as opposed to putting wet fingers in the air and wondering ‘What should I investigate?”

RFpro’s Daley told SAE Media at AutoSens that the overall aim of DeepSafe is to develop and deliver a synthetic, commercial data tool that can retrain AI and ML models in areas where they’re failing.

“Part of that is making sure that we’re able to collect far more levels of crash data,” he said “The amount of physical vehicles we can physically crash into other real, physical vehicles is extremely limited. And we shouldn’t be doing that because of the cost.”

The DeepSafe project will develop new methodologies for taking real lidars, radars and cameras and flying them at vehicles in as controlled a way as possible, Daley said, thereby creating data for critical edge cases to help with critical timing and decision-making.

Once the DeepSafe project is completed, the team will take these results to the public so the key question can be answered, Daley said. “When do you trust that this [simulation] technology is good enough for you to use?”

Whether built by dRISK or Helm.AI or one of many other providers or OEMs, simulated worlds are getting the industry closer to highly-automated vehicles. The truth remains that simulation still is just not quite as real as, well, reality.

“If you could model the real world with the highest complexity in the computer, that would be awesome,” DeepScenario’s Banzhaf said. “You wouldn't need to go out. What currently prevents us is that the computation time would be too high, so you need to simplify the real world. Also, it's not just about how everything looks realistic, it's how the cars move.”

The challenge continues.