BC-Z aims to offer "zero-shot" learning for generalized autonomous robotics

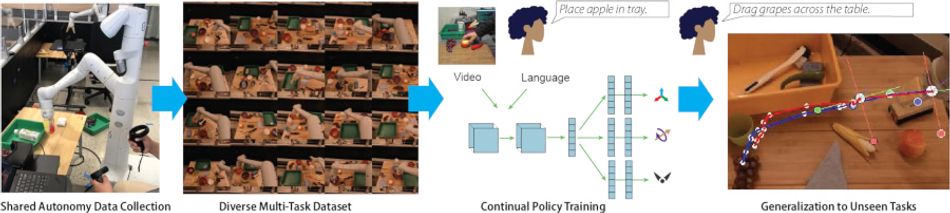

The BC-Z system, initially trained with a mixture of VR demonstration and shared autonomony, demonstrates the potential for generalized learning.

Using a now public-access dataset, a research team has created a robotics control system which can generalize to unseen related tasks — allowing robots to interpret natural-language commands and video demonstrations.

There is considerable interest in reducing how much training it takes for a machine learning system to perform a given task, from few-shot learning approaches like HyperTransformer to one-shot learning — where a network can be trained using just one sample per class, approximating the human ability to intuit and interpret.

What if, however, you could have zero-shot learning, where a machine learning system can generalize its existing knowledge and succeed at entirely novel tasks — without ever having been directly trained? That’s exactly what a team of researchers from Google, X, UC Berkeley, and Stanford University have created in BC-Z: A zero-shot task generalization approach for robotics.

Do as I do

Having a robot perform a task it has not been trained to do, based either on copying a human or by following verbal instructions, is an extremely hard problem — particular in vision-based tasks involving a range of possible skills.

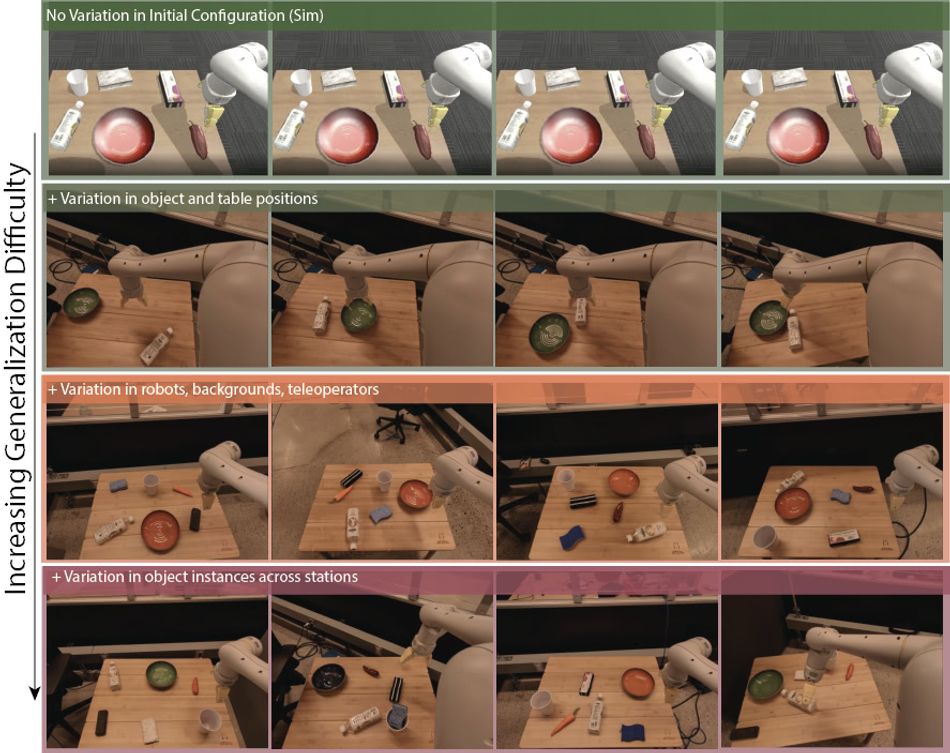

“This problem is remarkably difficult since it requires robots to both decipher the novel instructions and identify how to complete the task without any training data for that task,” explain Chelsea Finn and Eric Jang, co-authors of the paper and authors of a post on the Google AI Blog discussing their work. “This goal becomes even more difficult when a robot needs to simultaneously handle other axes of generalization, such as variability in the scene and positions of objects.”

The proposed system, BC-Z, is built on previous work, with the researchers concentrating on whether understood concepts in generalization through imitative learning can be scaled to a wide breadth of tasks — demonstrating, in one test, a hundred different manipulation tasks and then instructing the robot to carry out 29 related tasks on which it had not been directly trained.

The team’s setup for training and testing BC-Z relied on shared autonomy teleoperation: An Oculus VR virtual reality headset was wired into the robot, with the wearer using two handheld controllers to perform tasks with a line-of-sight third-person view. As well as directly carrying out tasks as a demonstration, the operator also monitored the robot’s own attempts — intervening to correct mistakes as they happened, as a way to improve the training data.

“This mixture of demonstrations and interventions has been shown to significantly improve performance by mitigating compounding errors,” claim Finn and Jang. “In our experiments, we see a 2x improvement in performance when using this data collection strategy compared to only using human demonstrations.”

Try it yourself

The finished dataset totals 25,877 demonstrations from seven operators across 12 robots and totaling 125 hours of robot time. This dataset was then augmented with descriptions of the task being carried out — either in the form of an additional 18,726 videos of humans carrying out the same tasks or a simple natural-language written command.

Initially, the team set about proving that BC-Z was able to learn individual vision-based tasks: A single bin-emptying task, and a single door-opening task. Results were encouraging, with the model able to pick at a rate of around half the speed of the human teleoperator for the bin-emptying task and achieve a success rate of 87 per cent to 94 per cent on the door-opening tasks for trained-scenes and held-out scenes.

The project's focus, though, is on few- and zero-shot learning for previously unseen tasks: 29 held-out tasks, 25 of which use objects mixed from two different trained task families. Some tasks were language-conditioned, provided as a simple written instruction like “put the grapes in the bowl”; others were video-conditioned, communicated as footage of the task being carried out.

In these, BC-Z was able to deliver a non-zero success rate for 24 of the 29 tasks — but below the level which would be considered acceptable for deployment. Its total success rate averaged at 32 per cent, rising to 44 per cent for the language-conditioned tasks.

“Qualitatively,” the researchers note of the failures, “we observe that the language-conditioned policy usually moves towards the correct objects, clearly indicating that the task embedding is reflective of the correct task. The most common source of failures are ‘last-centimeter’ errors: Failing to close the gripper, failing to let go of objects, or a near miss of the target object when letting go of an object in the gripper.”

A cautious success

While the success rate on held-out tasks was absolutely low, seeing any success rate above zero per cent is an undeniably promising start — and the team has already been able to draw interesting conclusions, including that imitation learning can be scaled to zero-shot approaches, task-level generalization is possible with just 100 training tasks, and that frozen pre-trained language embeddings work well as task conditioners without additional training.

The team admits to a series of limitations, however: Performance on novel tasks is highly variable, the natural-language commands follow a restrictive verb-noun structure, and performance on video interpretation was notably lower than on the natural-language command tasks.

For future work the researchers have suggested using the BC-Z system as a general-purpose initialization for fine-tuning downstream tasks alongside additional training with autonomous reinforcement learning, improving the generalization of video-based task representation, and finding a way to solve a low-level control error bottleneck as a means to improve the performance of imitation learning algorithms in general.

The team’s work has been published at the 5th Conference on Robot Learning (CoRL 2021), and is available under open-access terms on the project website. Additionally, it has released the training and validation dataset on Kaggle for use under the permissive Creative Commons Attribution 4.0 International license. More information can be found on the Google AI Blog.

Reference

Eric Jang, Alex Irpan, Mohi Khansari, Daniel Kappler, Frederik Ebert, Corey Lynch, Sergey Levine, Chelsea Finn: BC-Z: Zero-Shot Task Generalization with Robotic Imitation Learning, CoRL 2021 Poster. openreview.net/forum?id=8kbp23tSGYv