Universal Controller Could Push Robotic Prostheses, Exoskeletons Into Real-World Use

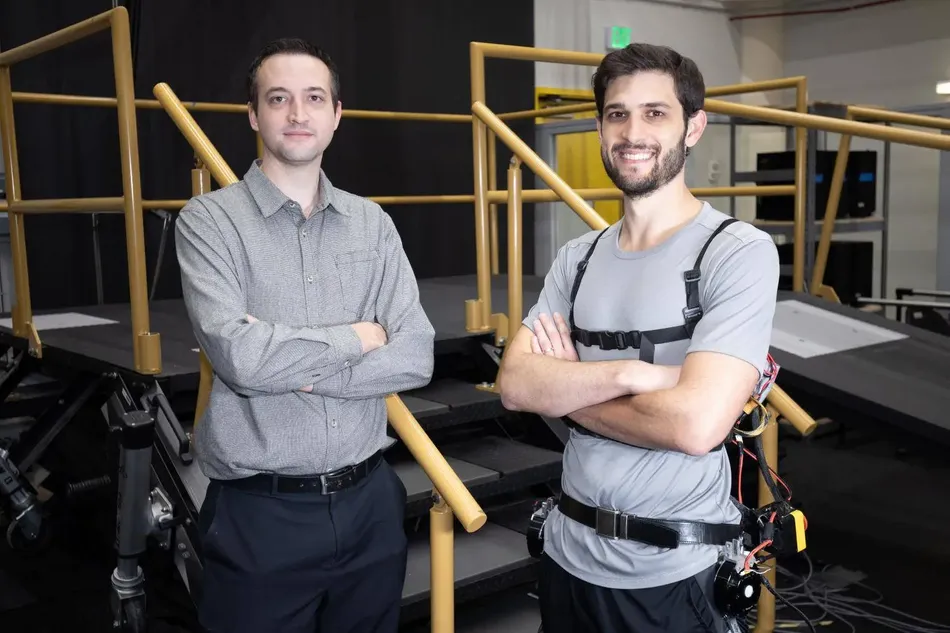

Researcher Aaron Young makes adjustments to an experimental exoskeleton worn by then-Ph.D. student Dean Molinaro. The team used the exoskeleton to develop a unified control framework for robotic assistance devices that would allow users to put on an "exo" and go — no extensive training, tuning, or calibration required. (Photo: Candler Hobbs)

Aaron Young’s team has developed a wear-and-go approach that requires no calibration or training.

This article was first published on

news.gatech.eduRobotic exoskeletons designed to help humans with walking or physically demanding work have been the stuff of sci-fi lore for decades. Remember Ellen Ripley in that Power Loader in Alien? Or the crazy mobile platform George McFly wore in 2015 in Back to the Future, Part II because he threw his back out?

Researchers are working on real-life robotic assistance that could protect workers from painful injuries and help stroke patients regain their mobility. So far, they have required extensive calibration and context-specific tuning, which keeps them largely limited to research labs.

Mechanical engineers at Georgia Tech may be on the verge of changing that, allowing exoskeleton technology to be deployed in homes, workplaces, and more.

A team of researchers in Aaron Young’s lab have developed a universal approach to controlling robotic exoskeletons that requires no training, no calibration, and no adjustments to complicated algorithms. Instead, users can don the “exo” and go.

Their system uses a kind of artificial intelligence called deep learning to autonomously adjust how the exoskeleton provides assistance, and they’ve shown it works seamlessly to support walking, standing, and climbing stairs or ramps. They described their “unified control framework” March 20 in Science Robotics.

“The goal was not just to provide control across different activities, but to create a single unified system. You don't have to press buttons to switch between modes or have some classifier algorithm that tries to predict that you're climbing stairs or walking,” said Young, associate professor in the George W. Woodruff School of Mechanical Engineering.

Machine Learning as Translator

Most previous work in this area has focused on one activity at a time, like walking on level ground or up a set of stairs. The algorithms involved typically try to classify the environment to provide the right assistance to users.

The Georgia Tech team threw that out the window. Instead of focusing on the environment, they focused on the human — what’s happening with muscles and joints — which meant the specific activity didn’t matter.

“We stopped trying to bucket human movement into what we call discretized modes — like level ground walking or climbing stairs — because real movement is a lot messier,” said Dean Molinaro, lead author on the study and a recently graduated Ph.D. student in Young’s lab. “Instead, we based our controller on the user’s underlying physiology. What the body is doing at any point in time will tell us everything we need to know about the environment. Then we used machine learning essentially as the translator between what the sensors are measuring on the exoskeleton and what torques the muscles are generating.”

With the controller delivering assistance through a hip exoskeleton developed by the team, they found they could reduce users’ metabolic and biomechanical effort: they expended less energy, and their joints didn’t have to work as hard compared to not wearing the device at all.

In other words, wearing the exoskeleton was a benefit to users, even with the extra weight added by the device itself.

What’s so cool about this [controller] is that it adjusts to each person’s internal dynamics without any tuning or heuristic adjustments, which is a huge difference from a lot of work in the field. There’s no subject-specific tuning or changing parameters to make it work.

AARON YOUNG

Associate Professor, Mechanical Engineering

The control system in this study is designed for partial-assist devices. These exoskeletons support movement rather than completely replacing the effort.

The team, which also included Molinaro and Inseung Kang, another former Ph.D. student now at Carnegie Mellon University, used an existing algorithm and trained it on mountains of force and motion-capture data they collected in Young’s lab. Subjects of different genders and body types wore the powered hip exoskeleton and walked at varying speeds on force plates, climbed height-adjustable stairs, walked up and down ramps, and transitioned between those movements.

And like the motion-capture studios used to make movies, every movement was recorded and cataloged to understand what joints were doing for each activity.

The Science Robotics study is “application agnostic,” as Young put it. Yet their controller offers the first bridge to real-world viability for robotic exoskeleton devices.

Imagine how robotic assistance could benefit soldiers, airline baggage handlers, or any workers doing physically demanding jobs where musculoskeletal injury risk is high.

Assistive robotics also could help stroke patients regain mobility. Related experiments in Young’s lab are working to understand how their hip exoskeleton and unified controller could be extended to help people recovering from stroke navigate their communities.

Helping Amputees Do More

Other projects in Young’s Exoskeleton and Prosthetic Intelligent Controls (EPIC) Lab are marrying the power of robotics and AI to help adults with above-the-knee amputations navigate more effectively and children recover from neurological injuries.

For adults, the lab is imagining new powered prostheses to help them navigate the world. An active study is exploring how AI can learn a patient’s gait and adapt over time to improve walking.

Stacey Rice has been working with the team for a year, testing iterations of a leg prosthesis and the accompanying AI controller. She lost her leg to bone cancer 44 years ago, so she’s already seen technology improve the devices available to amputees.

The Atlanta native is a multisport athlete and 1988 volleyball Paralympian. She said she can feel a real difference in the amount of effort she expends using the robotic assistance.

I realized while I was on the treadmill that I wasn’t using as much energy as if I was using my own prosthesis. For me to have that energy bank is huge. There’s so much energy the body uses when you’re an amputee and you’re walking. To reserve that energy for your day-to-day activities will allow amputees to be more active during the day and do more.

STACEY RICE

Amputee and Research Study Participant

Gamifying Pediatric Rehab

In other experiments, Young’s lab is working with Children’s Healthcare of Atlanta and Shriners Hospital to incorporate robotics into a new approach to pediatric rehabilitation that helps patients improve their gait.

Kids with cerebral palsy, traumatic brain injuries, and other conditions often undergo physical therapy to help correct walking abnormalities. Incorporating robotics into the process is an area Young is excited about because developing brains are especially good at relearning skills and can recover function over time.

“The robotic therapy does what a physical therapist might do manually, but in an automated way. It encourages them to adopt better gait biomechanics,” Young said.

In each appointment, the researchers combine a robotic exoskeleton with biofeedback systems through a game-like interface.

“When the patients come in, it’s like a video game that they're playing, which both engages them and gives them feedback about their performance,” Young said. “We want them to internalize the learning. The exoskeleton will help them physically achieve their goals, but we need them to retain that and engage in the rehab process.”

Regaining Balance

Elsewhere in the lab, Young and his team use a unique virtual-reality treadmill system to study gait and balance, particularly for older adults or people with mobility issues from strokes or amputations.

The team uses the platform to introduce significant disruptions while participants are walking, challenging them to keep or regain their balance. The resulting data — largely from young, athletic individuals — is inspiring how wearable robots should operate to help people with balance impairments stay upright.

In other words, it’s a busy place. And all in service of making a future of wearable robotics a reality.

“For a lot of us, mobility is something we’re able to take for granted. One of the hopes is that this work can translate to people with mobility impairments and improve their quality of life,” Molinaro said. “And also, there are times when mobility is a limitation. So we’re interested in how we push the edge of mobility, both in restoring it for those with impairments but also augmenting it for those of able body to make them even more capable.”