2023 Edge AI Technology Report. Chapter IV: Hardware & Software Selection

What to Consider When Selecting Your Edge AI Hardware and Software.

2023 Edge AI Technology Report. Chapter IV: Hardware & Software Selection

Edge AI, empowered by the recent advancements in Artificial Intelligence, is driving significant shifts in today's technology landscape. By enabling computation near the data source, Edge AI enhances responsiveness, boosts security and privacy, promotes scalability, enables distributed computing, and improves cost efficiency.

Wevolver has partnered with industry experts, researchers, and tech providers to create a detailed report on the current state of Edge AI. This document covers its technical aspects, applications, challenges, and future trends. It merges practical and technical insights from industry professionals, helping readers understand and navigate the evolving Edge AI landscape.

Introduction

When selecting and implementing Edge AI systems, it's important to consider the limitations of edge devices and their compatibility with other hardware and software. Edge devices like sensors and smartphones often have limited processing power, storage, and battery life. These limitations can negatively affect the performance of Edge AI systems, as they may require significant resources to perform complex tasks, such as image recognition and natural language processing. To overcome these limitations, AI models must be optimized to work with limited resources, and specialized hardware such as field-programmable gate arrays (FPGAs), application-specific integrated circuits (ASICs), and GPUs can accelerate computation at the edge.

Edge AI systems are designed to operate for extended periods, so the hardware selected should be capable of supporting future upgrades. It should be designed to accommodate new technologies and features that may be required in the future. Additionally, compatibility is a critical consideration when selecting software for Edge AI systems. Because Edge AI systems often require integration with existing infrastructure and hardware, it's important to select software that is compatible with a wide range of devices and platforms. This includes both hardware compatibility, such as compatibility with different types of sensors and processors, and software compatibility, such as compatibility with different operating systems and programming languages.

Hardware Considerations

Ensuring that the chosen hardware meets the requirements of Edge AI systems involves considering various factors. One key consideration is processing power, as Edge AI applications require hardware capable of processing data quickly and accurately. The hardware's computing power and clock speed play crucial roles in achieving real-time processing, which is essential in Edge AI systems. Another critical consideration is the amount and type of memory available in the hardware. Edge AI applications necessitate sufficient memory to store and process large volumes of data, with a focus on fast and real-time processing capabilities. There is a race to have the highest number of TOPS (tera-operations per second) in the hardware industry, but it's not a one-to-one comparison.

“The memory system handling, DMA configuration, software compression of weights, intelligence, and decompression all matter significantly. Focusing only on the box numbers is similar to focusing solely on the megapixel or gigapixel of a phone's camera without considering other factors. This tunnel vision is an error for the industry to make.”

- Rahul Venkatram, Senior Product Manager, Machine Learning, Arm

Power consumption is another vital aspect, particularly for devices with limited power resources like IoT devices. Hardware optimization for low power consumption becomes crucial to ensure prolonged device operation without frequent battery replacements or recharging. Techniques such as utilizing low-power chips, hardware accelerators, and intelligent power management systems contribute to achieving low power consumption in Edge AI systems. In a conversation with Henrik Flodell, Director of Product Marketing at Alif Semiconductor, he emphasized that “reducing power consumption is a key parameter users need to consider when they pick suitable devices for edge systems that will use ML.”

The Autonomous Intelligent Power Management (aiPM™) technology from Alif is a vital solution for power consumption. It helps with selectively powering on the sections of an SoC that are needed when they are needed, and it powers them off when they are not, based on instantaneous processing demand and use case. It makes use of dual-processing regions within the MCU to speed up machine learning. The always-on, high-efficiency region continuously senses the environment, while the high-performance region wakes up when needed so it can execute heavy workloads and then return to sleep. This approach allows powerful devices to behave like low-power MCUs when necessary, which enables longer performance of smart IoT devices on smaller batteries.

“Systems that have to rely on CPU-bound processing tend to be power hungry, as the inference operations would take longer to finish, and the controller would run at 100% for much longer periods of time. Well-designed modern platforms offload the ML processing on accelerators designed for such workloads and can, therefore, finish faster and go to sleep sooner.”

- Henrik Flodell, Direct of Product Marketing, Alif Semiconductor.

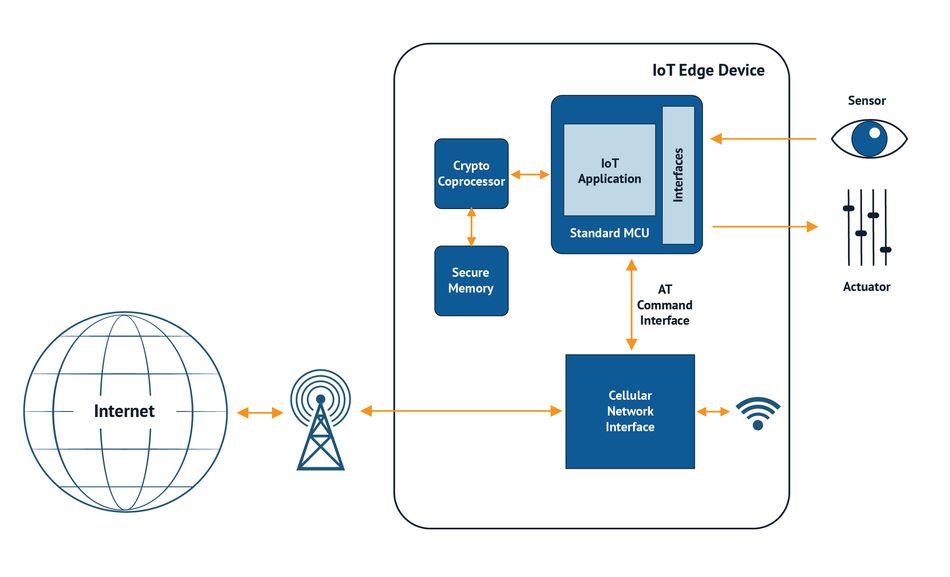

Seamless connectivity is also essential for Edge AI systems, requiring hardware that enables smooth data exchange with other devices and cloud-based platforms. The hardware should offer various connectivity options, including Wi-Fi, Bluetooth, and cellular networks, facilitating efficient communication and data processing with other devices. This connectivity ensures that data is processed and analyzed quickly and efficiently, contributing to the overall performance of the Edge AI system.

Software Considerations

When it comes to Edge AI systems, selecting software with specific characteristics is crucial for optimal operation. Analyzing various factors ensures that the chosen software meets the requirements of the Edge AI system. Compatibility stands as a significant consideration in software selection. The software should run efficiently on the hardware, enabling real-time data processing. Moreover, compatibility with other software components used in the system, such as operating systems, libraries, and frameworks, ensures seamless integration and functionality.

Scalability is another vital aspect to consider in Edge AI system design. Given that Edge AI systems often handle large amounts of data, the software must be capable of handling the real-time processing and analysis demands associated with such data. Scalable software guarantees the system can accommodate increasing data volumes, processing requirements, and user requests without compromising performance.

The accuracy of the software employed in Edge AI systems holds great importance. These systems heavily rely on accurate data analysis and processing to provide meaningful insights and support decision-making. Therefore, the software must exhibit high accuracy and reliability in analyzing and processing data.

Interpretability, or the software's ability to explain its results comprehensibly, plays a crucial role in Edge AI system design. Interpretability allows users to understand the system's decision-making process and provides insights into data analysis. This aspect becomes particularly significant in applications where the decisions made by the Edge AI system have substantial implications, such as healthcare and finance. The software used in Edge AI systems should prioritize interpretability, presenting results in a clear and understandable manner.

Integration Considerations

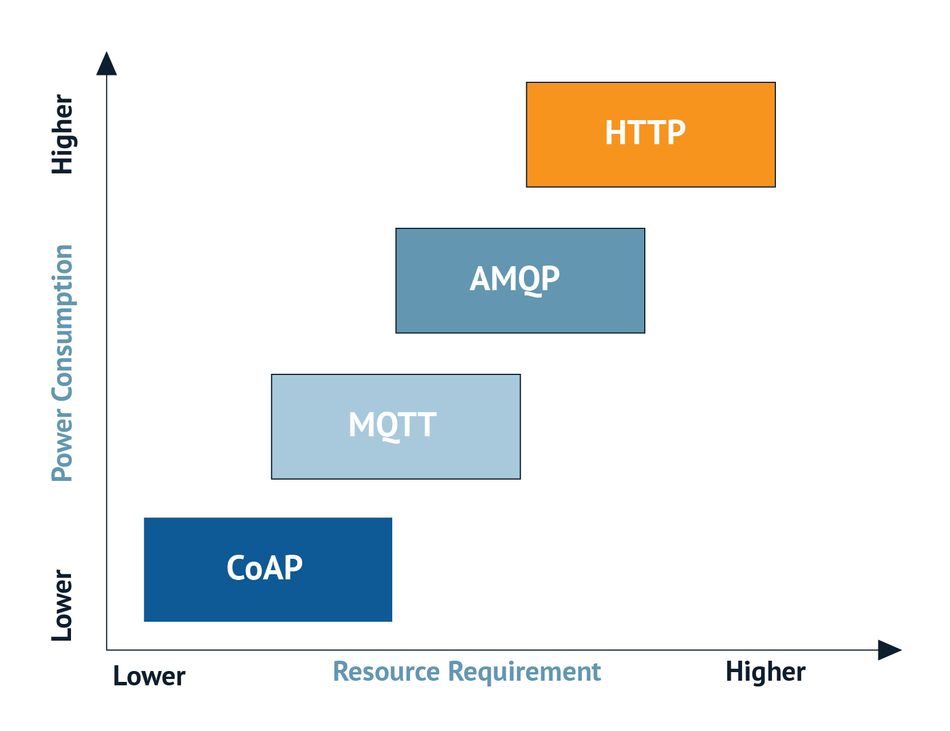

Integrating Edge AI with other systems is essential for seamless and efficient operations. As Edge AI systems generate vast amounts of data that require processing, analysis, and sharing with other systems, selecting communication protocols becomes imperative. Different protocols are used by Edge AI systems to communicate with other systems, and it is crucial to choose a protocol that is compatible with the integrated systems.

Several commonly used protocols in Edge AI system integration include MQTT, CoAP, and HTTP. MQTT is a lightweight protocol widely utilized in IoT systems due to its low overhead and power consumption. CoAP, on the other hand, is designed specifically for resource-constrained networks, making it suitable for Edge AI systems. HTTP, a widely used protocol in web-based applications, is also suitable for Edge AI systems that communicate with web-based applications. The choice of communication protocol should align with the requirements of the Edge AI system and the systems being integrated.

In addition to communication protocols, data formats are another critical consideration when integrating Edge AI with other systems. Edge AI systems generate data in various formats, and it is vital to ensure compatibility between the data generated by the Edge AI system and the systems it interacts with. Data formats must be aligned and properly transformed or translated to facilitate seamless data exchange and interoperability between the systems.

Global technology provider, Arm, has approached integration from a holistic thought process about where and how to deploy processing. David Maidment, Senior Director, Secure Device Ecosystem at Arm, explained their approach as follows: “We've landscaped our approach in two directions. The first is scaling down traditional cloud-native workloads, such as microservers. The second direction is taking IoT and embedded devices and making them connected, updateable, and secure. We're seeing these two trends converge, with higher-end, higher-power devices coming down in cost and power, and lower-end, lower-power devices being pushed up in performance capabilities.”

This results in two distinct software ecosystems. The first is the traditional cloud software ecosystem, with portable workloads that can run on servers with offload and acceleration. The second is a more traditional Linux box that was initially designed for a single function, like a router or gateway but is now being developed as a connected general-purpose box with capabilities that can change during its lifetime through new applications and AI models.

Security Considerations

Ensuring security is a critical factor when integrating Edge AI with other systems. Since Edge AI systems generate vast amounts of data, including sensitive information, protecting it from unauthorized access becomes imperative. Secure communication protocols such as SSL/TLS and SSH should be employed to establish secure channels for data transmission between the Edge AI system and other systems. These protocols encrypt the data, preventing eavesdropping and tampering during transit.

To reinforce security, access controls and authentication mechanisms must be implemented. This ensures that only authorized users can access the data generated by the Edge AI system, safeguarding it from unauthorized individuals. Secure software development practices, such as regular code reviews and vulnerability assessments, should also be followed to identify and mitigate potential security risks in the integrated systems.

Encryption is pivotal in securing Edge AI systems and should not be overlooked. By converting sensitive data into an encoded form, encryption ensures that even if the data is compromised, it remains unintelligible to unauthorized entities. Given that Edge AI systems often process sensitive data like personal health information, financial records, and valuable intellectual property, encrypting this data is crucial in preventing unauthorized access, theft, or manipulation.

Gain a broader perspective on Edge AI by revisiting our comprehensive guide.

The 2023 Edge AI Technology Report

The guide to understanding the state of the art in hardware & software in Edge AI.

Click through to read each of the report's chapters.

Report Introduction

Chapter I: Overview of Industries and Application Use Cases

Chapter II: Advantages of Edge AI

Chapter III: Edge AI Platforms

Chapter IV: Hardware and Software Selection

Chapter V: Tiny ML

Chapter VI: Edge AI Algorithms

Chapter VII: Sensing Modalities

Chapter VIII: Case Studies

Chapter IX: Challenges of Edge AI

Chapter X: The Future of Edge AI and Conclusion

The report sponsors.