Edge machine vision cameras powering industry innovation

The edge machine vision sector is growing rapidly, but developers must have access to the right hardware if they are to keep up with demand and stay ahead of their competitors.

Figures compiled by ABI research[1] reveal the machine vision (MV) market will be valued at $35 billion by 2027, up from $21.4 billion last year. But in order for the sector to service this rising demand, it must be able to test and develop MV systems at speed.

Inadequate technology is a formidable barrier to progress, with developers being forced to either create their own MV cameras or try to integrate complex MV cameras into the systems they’re developing. But off the shelf, ‘plug and play’ cameras could provide the ideal solution to keeping progress moving - allowing developers to create, test and deploy MV systems with fewer delays while reducing costs, wasted time and effort.

A vision of the future.

MV is one of the most crucial components of industry 4.0, but also increasingly of consumer products, public safety and security sectors the world over.

Big enablers for industry growth continue to be machine learning (ML) and deep learning (ML), allowing edge devices to perform more and more complex tasks. Another driver for expansion is the growing open-source toolkits available to developers, which has led to the rise of a multitude of startups.

And large companies such as NXP, NVIDIA and Qualcomm have also been developing processors that run ML models directly on cameras[2], rather than having them in the Cloud. To speed the process along, they have created solutions ranging from ML processors to ML development environment. But for all of these things to work – they need MV cameras of the highest quality.

Challenges for developers

Developers can face a range of obstacles when trying to create MV systems, from lack of adequate training data, to project plans which don’t account for particular problems which may present themselves.

But perhaps the biggest challenges are access to adequate hardware and a lack of time.

If developers don’t have access to the right components, then even the best coding and project management won’t create a solution that works well. Physically constructing a system with an integrated MV camera can also be highly challenging from an engineering standpoint. It can be a part of the process that developers simply don’t want or need.

The interconnectivity of edge technology – while also being a strength – can be a huge weakness. It means that if one part of the system fails, like the MV camera, then the whole system fails. For MV systems to work properly, there can’t be a weak link. With sensors and cameras all having to work together in harmony.

What are the solutions?

Being able to integrate high quality MV cameras into a system helps cut down development time, providing hardware that can be integrated into a new MV system literally straight off the shelf.

This removes a major headache for developers because it means they don’t have to create their own cameras, or resort to using one that requires heavy integration. This cuts down on potential time bottlenecks and allows the developer to concentrate on their part, with the camera hardware all taken care of.

MaxLab: Edge AI camera

The Edge AI camera designed by MaxLab is one such device helping to ease the burden on MV developers. It is designed specifically for plug-and-play workflow and has numerous features that make it suitable for rapid product development such as night vision and motion detection.

For added simplicity, it features a no-code configuration UI for easy project setup and fine sensor tuning. The MaxLab Edge AI camera can also integrate seamlessly with major IoT and AI platforms: AWS IoT, Edge Impulse, ThingsBoard. It also features generic configurable integrations: HTTP and MQTT, accessible right from the embedded web server.

Other key features are that it has its own onboard MCU and sensor interface, which allow for edge AI processing. This all enables the camera to perform real-time image analysis and decision-making on the device itself, without the need for a separate computer of cloud system.

The Edge AI camera at a glance:

Onboard MCU | ESP32-S3 |

Sensor Interface | DVP |

Stock Camera Sensor | OV2640 |

Image Size | 0.3MP/2MP/3MP |

Image Formats | RGB, JPEG |

Frame Rate | Up to 15 FPS |

Night Vision | Yes |

Sensors | Light Sensor, Passive IR (Motion Detection) |

Connectivity | WiFi, BLE |

Memory | 8MB Flash, 512 kB + 8MB RAM |

Software | TF-Lite Micro, esp-dl |

Interfaces | SPI, UART |

Battery Connector | JST-PH (2mm pitch) |

Power Features | Programmable External RTC |

Applying the Edge AI camera

The key features of the camera is its onboard MCU and sensor interface, which allow for edge AI processing. This means that the camera can perform real-time image analysis and decision-making on the device itself, without the need for a separate computer. This makes it an ideal choice for security and surveillance, where fast response times are crucial.

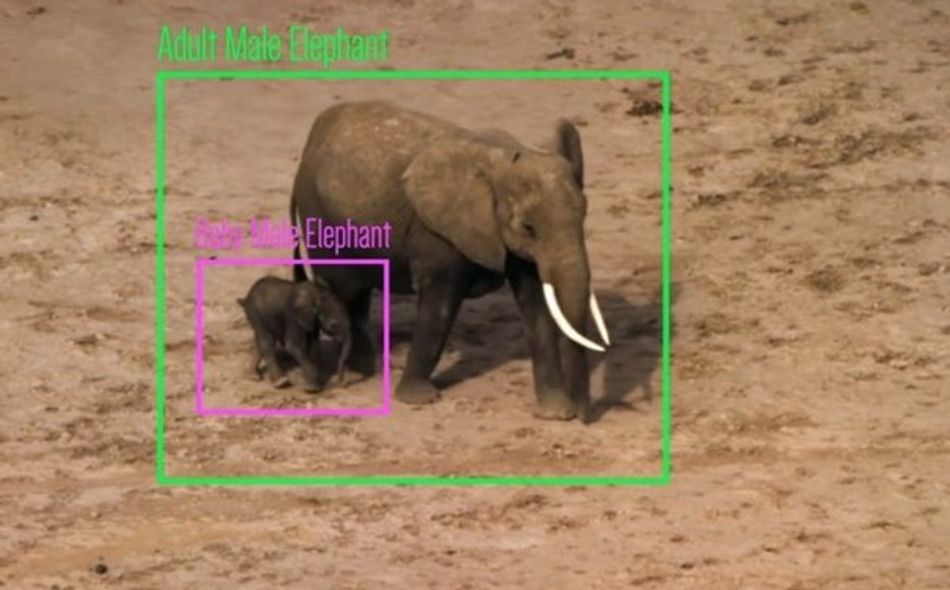

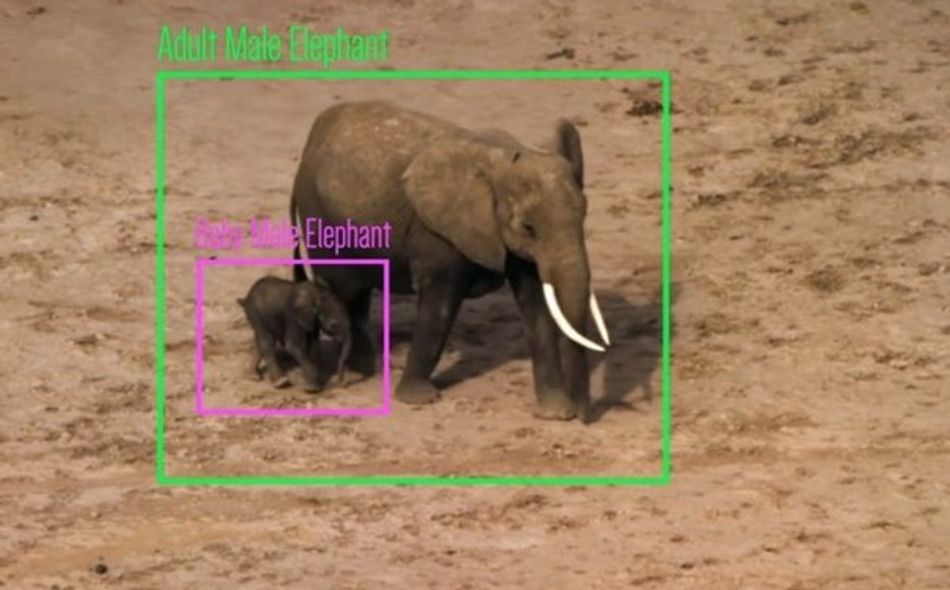

Wildlife Monitoring

The AI camera is a valuable tool for wildlife monitoring and invasive species detection. Its night vision capabilities and motion sensors make it ideal for capturing detailed images of animals in low-light conditions. The camera can be used in both monitoring situations where researchers can use remote devices to do population surveys as well as in “smart traps” where captured animals are identified and then either detained for collection (if they invasive) or released(when identified as endemic to the area).

The Edge AI camera could be sued to monitor endangered wildlife. Image credit: VMLCI.

The Edge AI camera could be sued to monitor endangered wildlife. Image credit: VMLCI.

Agriculture Technology

In agritech, the bult-in edge AI capabilities can be used to analyze crop growth and detect potential issues such as pests or disease. Its motion sensors can also be used to monitor wildlife that may be damaging crops. Food security is a critical topic as global populations increase. Efficient and automated farming will be crucial to achieve this.

Robotics

The rapidly expanding world of robotics has rich opportunities for the edge Ai camera. With its advanced sensor interface, and connectivity options, it can be used as a sensor and decision-making unit on a robot, providing real-time visual data, performing visual recognition task and even controlling the robot’s actions.

Invaluable tool for developers.

The growth of edge computing and MV will only continue, leaving the field wide open for continued innovation and development of new solutions.

But being able to develop, test and trial new MV functionalities requires the right equipment which can be quickly deployed and integrated.

Solutions such as MaxLab’s Edge AI camera can perform this role, reducing those time bottlenecks and allowing developers to focus on innovation.

For more information visit MaxLab.

About the sponsor: MaxLab Io.

We specialize in bringing new hardware products to market by providing comprehensive development services. Our team has a wealth of knowledge in the field of product development and can help guide your project from concept to market launch. We understand the importance of delivering high-quality products on time and within budget, and we are dedicated to working closely with companies to ensure their successful projects. We have a proven track record of delivering hardware products that meet our clients' expectations.

References:

[1] https://www.abiresearch.com/press/commercial-and-industrial-machine-vision-market-to-reach-us36-billion-by-2027/

[2] https://www.abiresearch.com/press/commercial-and-industrial-machine-vision-market-to-reach-us36-billion-by-2027/