TinyMAN, a reinforcement-learning approach to energy harvesting management, could mean your wearables never need charging again

Designed for "energy neutral operation" of resource-constrained wearable devices, tinyMAN learns how to eke out the power available from an energy harvesting system to maximize utility without ever needing a manual charge.

Capable of running on microcontrollers, tinyMAN offers improved utility over rival state-of-the-art energy management approaches.

Deploying devices for the Internet of Things, particularly wearable devices, brings with it one major bottleneck: The need to keep things powered. Energy harvesting — whether from light, radio signals, heat, movement, or something else — is a solution, but only a partial one. The problem: the energy you harvest isn’t always going to be available, and removing the device to charge it isn’t always possible and is never desirable.

The solution proposed by a trio working at the University of Wisconsin-Madison: TinyMAN, a reinforcement learning system designed to manage the energy usage of a device according to available harvesting sources — and lightweight enough to deploy on low-end microcontrollers with limited resources.

Prediction-free

Controlling the power usage of a device according to the energy available — making it take measurements or transmit less frequently when it needs to conserve energy, for example — is by no means a new concept. Even using machine learning to optimize the energy management isn’t new: Previous efforts have seen success by forecasting availability of harvested energy, predicting when will be best to ramp up operations and when a lighter hand is required.

The system proposed by Toygun Basaklar and colleagues, however, takes a different approach. “TinyMAN does not rely on forecasts of the harvested energy,” the team writes in the abstract to their paper on the topic, “making it a prediction-free approach.”

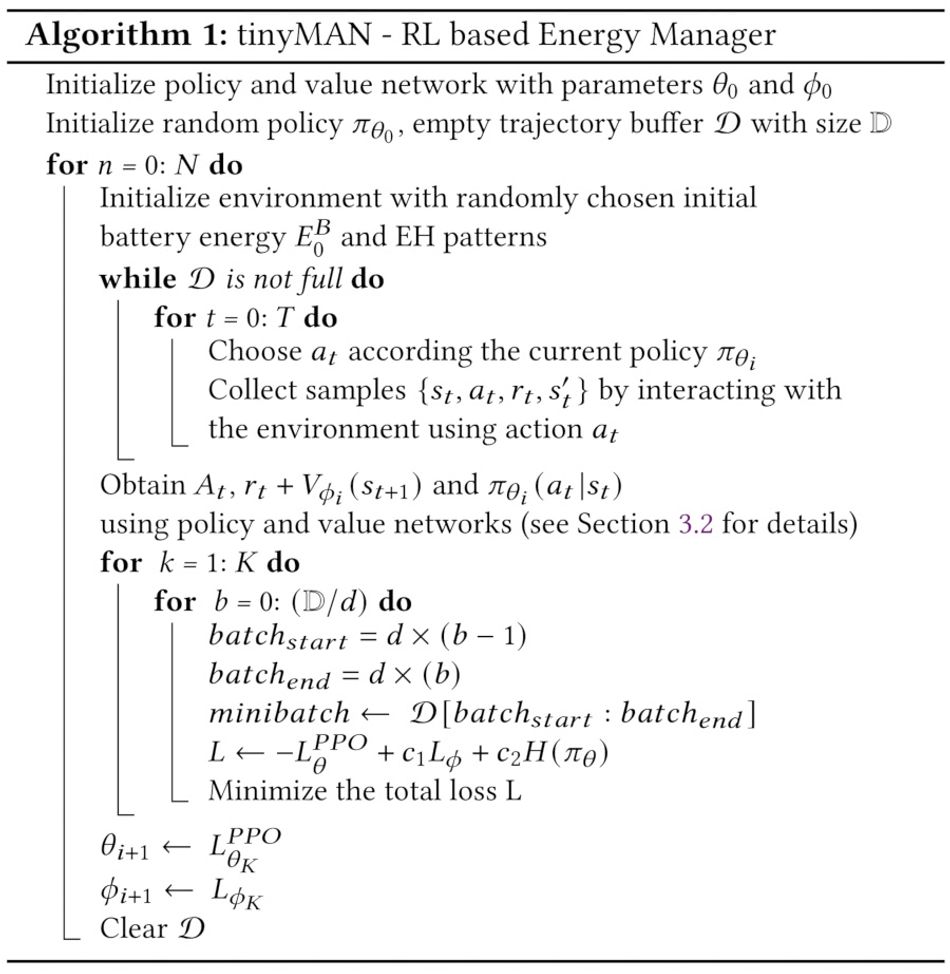

Instead, tinyMAN works by taking the current charge level of the device’s battery and the current amount of energy being harvested as states and uses a proximal policy optimization (PPO) algorithm to allocate the harvested energy throughout the day, based on training which takes place in an environment built around light and motion energy harvesting and the American Time Use Survey.

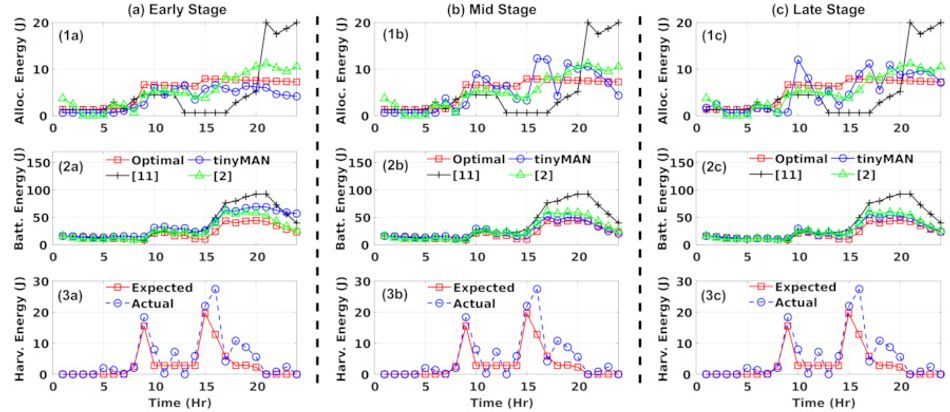

During the initial stages of training, the team notes, tinyMAN performs conservatively — but as the training progresses tinyMAN begins to learn a generalized representation of energy harvesting patterns, increasing energy allocations as the available harvested energy increases and decreasing allocations should the available energy drop. The result is a trainable management system which makes no predictions, and the performance of which the team describes as “oscillating around the optimal values” provided by a theoretical perfect management system.

Efficient

A key design consideration in tinyMAN is efficiency: The best energy management system in the world is of no use whatsoever if it consumes more energy than it saves. The team’s use of proximal policy optimization (PPO) works in its favor, here, using less memory than alternative algorithms which could deliver similar optimization performance.

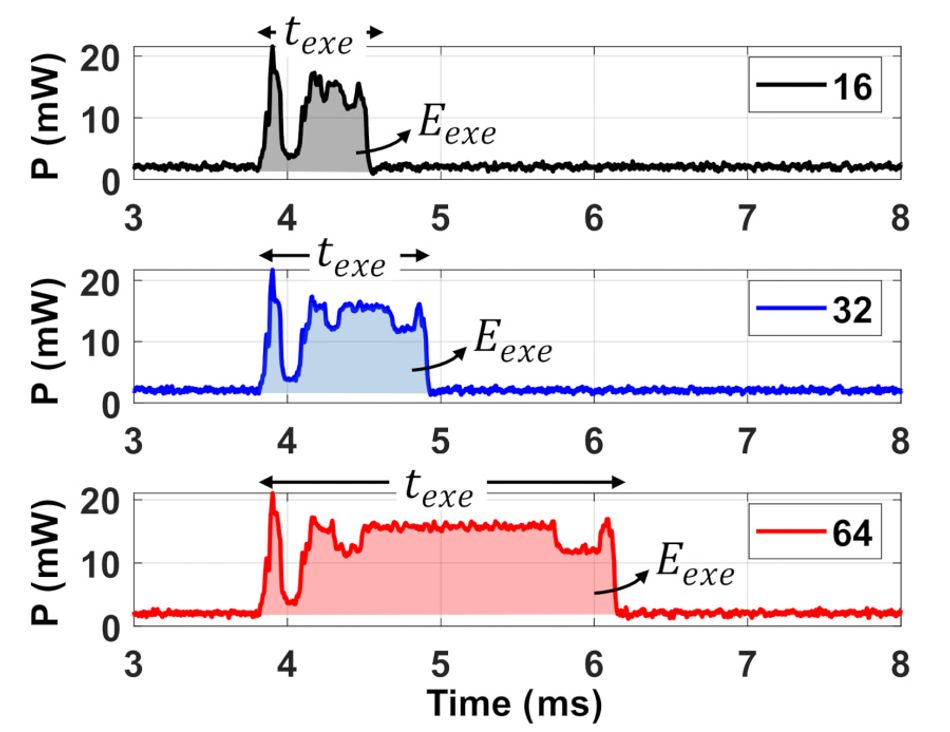

Implemented in Python then deployed to a prototype wearable using TensorFlow Lite for Microcontrollers — a flexible device with a small 12mAh battery, integrated energy-harvesting capabilities, and a Texas Instruments CC2652R microcontroller with a single 48MHz Arm Cortex-M4F core, 80kB of static RAM, and 352kB of flash storage — the tinyMAN system consumes 5kB of RAM and between 86kB of flash storage when using a model size of 64 and just 69kB of flash for the trade-off of a drop in normalized utility of seven per cent when using a model size of 16.

The platform isn’t just efficient in its use of memory, either: It offers low execution time and energy usage, consuming just 27.75µJ and 2.36ms of processor time per inference at a model size of 64 dropping to a mere 6.75µJ and 0.75ms at a model size of 16.

Despite its lightweight nature, testing of tinyMAN reveals it can deliver results. Experiments detailed in the paper show up to 45 per cent higher utility values than Kansal et al’s 2007 proposal for energy-harvesting sensor networks and up to 10 per cent higher than Bhat et al’s “near-optimal” energy management system for self-powered wearables detailed in 2017.

“TinyMAN judiciously uses the available energy to maximize the application performance while minimizing manual recharge interventions,” the researchers conclude. "It maximizes the device utilization under dynamic energy harvesting patterns and battery constraints."

“As future work, we plan to extend our prototype device to log the harvested energy over a day. This will pave the way for adding online learning functionality to tinyMAN.”

The team’s work is to be presented at the tinyML Research Symposium 2022 at the end of March, with a copy of the paper available now under open-access terms on Cornell’s arXiv preprint server. The team has also pledged to make the project available under an open-source license post-presentation.

References

Toygun Basaklar, Yigit Tuncel, and Umit Y. Ogras: tinyMAN: Lightweight Energy Manager using Reinforcement Learning for Energy Harvesting Wearable IoT Devices, tinyML Research Symposium 2022. DOI arXiv:2202.09297 [eess.SP].

Aman Kansal, Jason Hsu, Sadaf Zahedi, and Mani B. Srivastava: Power management in energy harvesting sensor networks, ACM Transactions on Embedded Computing Systems Vol. 6 Iss. 4. DOI 10.1145/1274858.1274870.

Ganapati Bhat, Jaehyun Park, and Umit Y. Ogras: Near-optimal energy allocation for self-powered wearable systems, IEEE/ACM International Conference on Computer-Aided Design 2017. DOI 10.1109/ICCAD.2017.8203801.