Virtual Outlier Synthesis Framework for Improving Out-of-Distribution Detection Performance

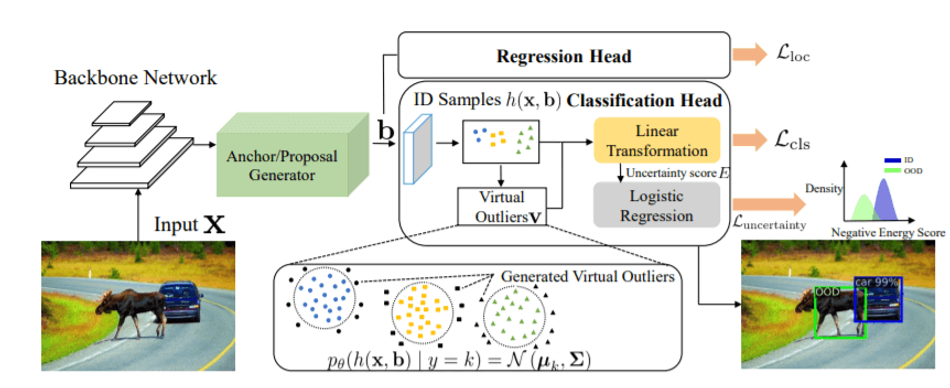

Designed to address the OOD data false prediction by adaptively synthesizing virtual outliers that can maintain the model’s decision boundary during training.

![Virtual Outlier Synthesis Framework showing the: Blue- Objects detected and classified as one of the ID classes. Green- OOD objects detected by VOS. [Image Credit: Research Paper]](https://images.wevolver.com/eyJidWNrZXQiOiJ3ZXZvbHZlci1wcm9qZWN0LWltYWdlcyIsImtleSI6IjAub2RmaDZqOG1qMnBWaXJ0dWFsT3V0bGllclN5bnRoZXNpc0ZyYW1ld29yay5wbmciLCJlZGl0cyI6eyJyZXNpemUiOnsid2lkdGgiOjgwMCwiaGVpZ2h0Ijo0NTAsImZpdCI6ImNvdmVyIn19fQ==)

Virtual Outlier Synthesis Framework showing the: Blue- Objects detected and classified as one of the ID classes. Green- OOD objects detected by VOS. [Image Credit: Research Paper]

Even though deep neural networks have been recognized as the most optimized solution for object detection and image classification applications, there are places where these neural networks suffer from performance degradation when handling unknown scenarios. In the case of out of distribution detection, neural networks produce overconfident predictions on the OOD data leading to catastrophic consequences for mission-critical applications. As we relate to most of today’s neural network innovation in the setup of an autonomous vehicular system, the object detection model trained to recognize in-distribution objects can produce high-confidence prediction, but for an unseen object, the failure case raises concern in terms of the model reliability.

The existing solutions to solve the OOD data false prediction approaches are seen to be costly and infeasible to be deployed in real-life settings. For post-hoc approach, the softmax confidence score is a common baseline which is observed to be very high for OOD inputs. To solve this challenge, [1] proposed ReAct, a simple activation rectification strategy that significantly improved the test-time for ODD. Problems related to the collection of real outlier data still exist for which a group of researchers from the Department of Computer Science at the University of Wisconsin-Madison proposed a novel framework for OOD detection, VOS, by adaptively synthesizing virtual outliers that can maintain the model’s decision boundary during training.

A novel approach for OOD detection

In the paper, “VOS: Learning What You Don't Know by Virtual Outlier Synthesis,” University of Wisconsin researchers have developed a machine learning method dubbed as Virtual Outlier Synthesis which objective to optimize ID task and OOD detection performance. As a learning framework, VOS consists of three components to address some of the questions of how to synthesize the virtual outlier data, followed by the way to leverage the synthesized outlier for effective and efficient model regularization and then approaches to perform OOD detection during model inference time.

The work carried out does not rely on external data while focusing on generating virtual outliers for model regularization. An overview of the VOS framework shows a backbone network that looks like a CNN or vision transformer is given the input (object detection in this case) to generate some bounding constraints (b). “We model the feature representation of ID objects as class-conditional Gaussians, and sample virtual outliers v from the low-likelihood region,” the team explains. “The virtual outliers, along with the ID objects, are used to produce the uncertainty loss for regularization.”

Along with this, the team proposes a novel unknown-aware training object that shapes the uncertainty space between the in-distribution data and synthesized outlier data. As a part of the VOS framework, the ID workload and OOD uncertainty regularization is carried out simultaneously during the training, while during the inference time, the uncertainty estimation produces a larger probabilistic score for the ID data enabling effective OOD detection. The VOS offers many advantages over the existing OOD approaches as the framework is effective on both object detection and image classification, unlike the previously proposed methodologies. While the existing solutions suffer to separate the ID data from the OOD data, the VOS framework synthesizes the outliers to estimate a compact decision boundary between the OOD and ID dataset.

The Experimentation

The evaluation was performed with Python 3.8.5 and PyTorch 1.7.0 using the NVIDIA GeForce RTX 2080Ti GPUs on the ID training data taken from PASCAL VOC and Berkeley DeepDrive. The OOD dataset consists of subset images from MS-COCO and OpenImages. “We manually examine the OOD images to ensure they do not contain ID category,” the team notes. The researchers use the Detectron2 library to train backbone architecture, ResNet-50 and RegNetX-4.0GF. The evaluation of OOD detection performance reports the false positive rate (FPR95) of OOD samples when the true positive rate of ID samples is at 95%.

VOS achieves improved performance on both object detection and image classification models by reducing the FPR95 by up to 7.87% compared to the previous best method. While the proposed framework has already outperformed most of the competitive OOD detection methods, VOS also improves the OOD detection performance by 10.95% on BDD-100k and 11.91% on Pascal VOC in comparison to GAN-synthesis.

This research article was published on Cornell University’s research sharing platform, arXiv under open access terms. Model training on object detection based on the Detectron2 codebase is available in the GitHub repository. The codebase and dataset of the research article are also provided for community contribution.

Reference

[1] Yiyou Sun, Chuan Guo, and Yixuan Li. React: Out-of-distribution detection with rectified activations. In Advances in Neural Information Processing Systems, 2021.

[2] Xuefeng Du, Zhaoning Wang, Mu Cai, Yixuan Li: VOS: Learning What You Don't Know by Virtual Outlier Synthesis. DOI arXiv:2202.01197 [cs.LG]