Bringing the LLM revolution to social robots

Lessons learnt from integrating ChatGPT in Pepper and NAO

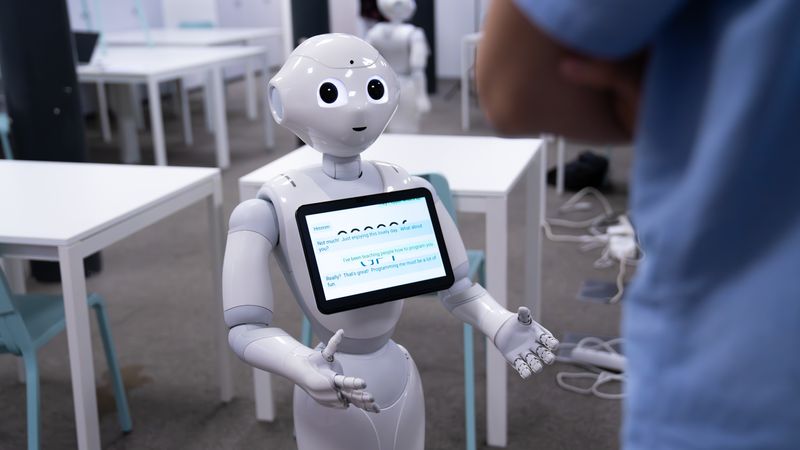

A Pepper robot showing a ChatGPt-type dialogue

The recent advances in Large Language Models (LLM) have made a lot of noise recently – everybody is using ChatGPT. Robotics is no exception; there has been a lot of talk about how to integrate them in various kind of robotic use cases; in this article, we will talk about their applications to social robots like NAO and Pepper.

Social Robots?

Social robots are designed to be used in a places, interacting with people, and as such are a natural fit for conversation-oriented language models. But they have been used for years before that, and the field of human-robot interaction is well-developed.

Compared to a GUI chat interface like ChatGPT, social robots have to deal with extra uncertainty linked to speech recognition (the input the langauge model received might not be what the user intended to say), and handling several users speaking, some of which might not even intend to speak to the robot.

Improving what the robot understands

One advantage LLMs have is in understanding open-ended instructions. There are several challenges in understanding voice commands:

- Speech recognition: turning the raw sounds into text; how effective this is is going to depend of how the user speaks (accents, slurred speech, volume, clarity of enunciation), the characteristics of the microphone, surrounding sound, etc.

- Variety of expressions: In practice, people have a huge variety of ways of expressing even simple things (For example: "I'm looking for an ATM", "Would you show me the nearest ATM?", "Is there an ATM around here?", "Where's the nearest ATM?","Do you know where I can withdraw some money?", "Where can I withdraw cash around here?", "Is there an ATM in this building?" etc.); and handling all of those properly usually requires some bespoke customisation via a dialogue engine like dialogflow or qichat.

- Context: In addition to the explicit information contains in a question like the ATM example above, some information comes from what was previously discussed ("How much does one cost?" "How can I get there?"), or from gestures such as pointing ("Is it that way?" "What's that?"); keeping track of that information usually requires more bespoke logic related to keeping track of users.

These are all challenges that could be handled previously with some work; but LLMs introduced a great increase in quality: they can be very good at handling a wide variety of expressions out of the box. They are also very good at taking into account any context included in the conversation log (typically sent alongside the prompt); this usually includes previous conversation but (with a bit more work) can include any information that can be infered from the sensors (for example, by using an object recognition model to turn what the robot sees into a text description).

One more surprising improvement when using LLMs is on speech recognitions – more specifically, even if there are mistakes in the speech recognition (that can be misheard words, but also homophones like their/there/they're), the LLM will often use context and common sense to handle the input appropriately anyway, giving the impression of a robot that hears better.

Improving how the robot answers

One obvious output is Speech, and plugged into a speech-to-text engine, a LLM can easily create a conversational robot.

However, this brings a few new issues:

- One notorious ones is hallucinations, a problem that's far from solved for LLMs. The robot can understand a wider variety of inputs, his answers to those might be confident but highly inaccurate – if there's a word he doesn't understand, he might decide to invent a fanciful product description; if an unfamiliar name is given, he might make someone up. This can be mitigated, but at the risk of making the robot repetitive or boring – it's a fine balance, and it might depend on the use case.

- A less obvious one is speech that's too verbose. If ChatGPT talks too much, the user can just skim the paragraphs; but a speaking robot takes time and can be much more annoying. This can be improved with better prompting, with pretraining etc. as well as UX tweaks (e.g. a way of interrupting the robot) but requires careful attention. More generally, the LLM's output might sometimes not be as good quality as hand-crafted one.

However, a robot is more than a smart speaker – it might have arms, wheels, etc. and the LLM can be made to control those, either directly or from a library of presets (e.g. navigation waypoints) - in effect, the output of the LLM is not just speech but actions.

How to deal with it the best

How to deal with hallucinations and other problems may depend of the use case:

- For entertainment purposes, the hallucinations may not be a problem, and can even be part of the fun, as long as that is well-presented to the user.

- For information purposes, a choice needs to be made between using LLM output and risking hallucinations, or using fixed, well-prepared outputs.

- One should also keep in mind other non-speech actions and see how many of those can be controlled by the LLM.

Apart from the technical performance on input and output, the largest strengths of LLMs is flexibility – adapting the robot to a new use case, or to a specific context for a new use case, can be as simple as editing a prompt, and produces a behavior of decent quality.

This allows for much quicker iterations.

Further directions

In robotics (social or not) and elsewhere, engineers, researchers and tinkerers all over the world are experimenting with interesting ways to use LLMs. We've covered a few we have experience with here, but there are many more directions that could be applicable, such as:

- integrating vision models to plug it with what the robot sees, to make it even more aware of the context

- making the robot proactively decide what to do (not just respond to what he heard, but decide to e.g. walk up to such-and-such a person and engage conversation)

- giving the robot a long-time memory

LLMs offer great possibilities for social robots if used well, and it will be interesting to observe how the field evolves in the coming years – in the coming months even.