Intra-hug gestures prove key to making HuggieBot 3.0 the most cuddly robot around

While the concept of a hugging robot may sound bizarre, the researchers behind the project — now in its third generation — believe that such a device could have a major impact on everything from social telepresence to elder care.

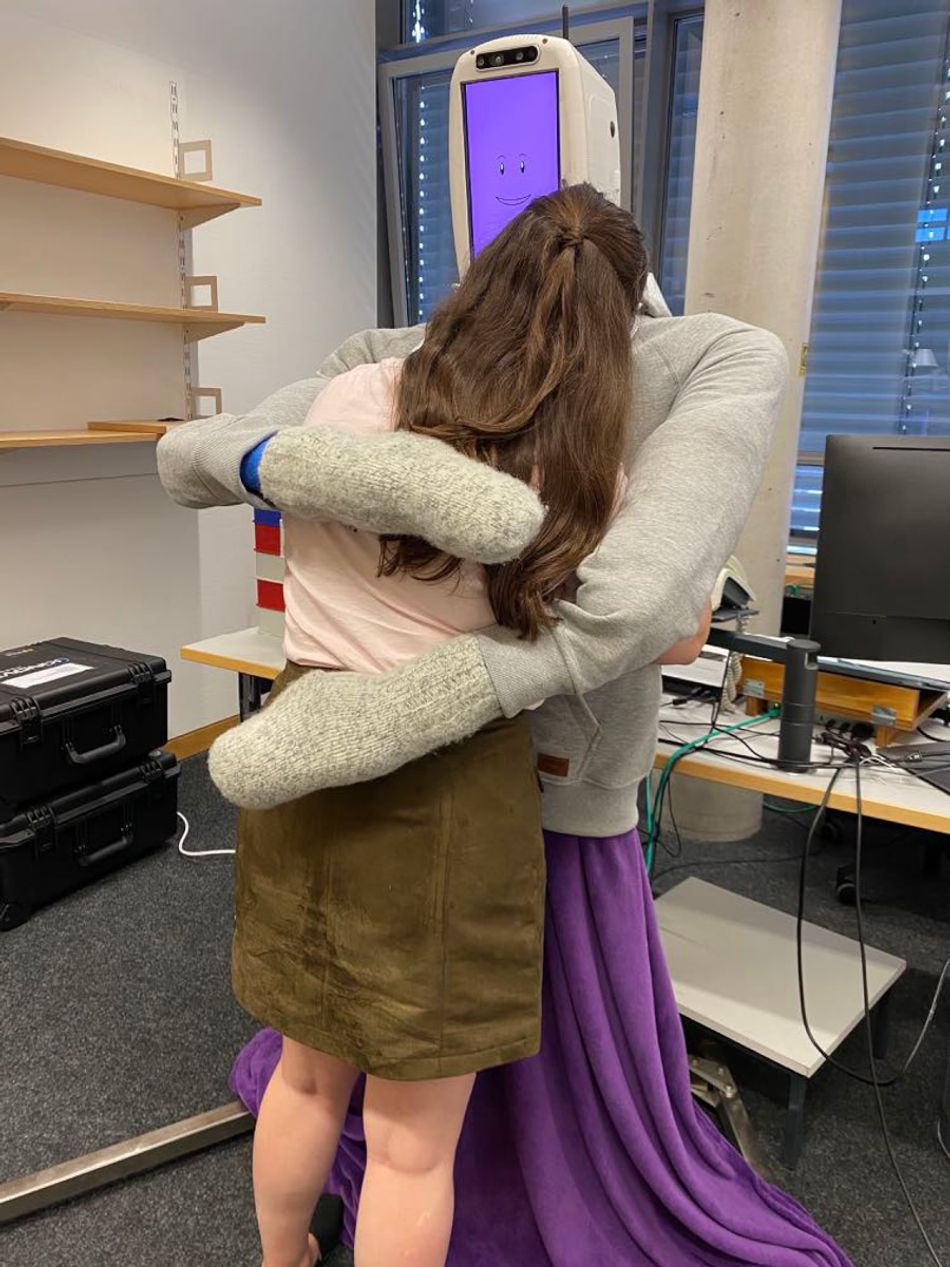

HuggieBot, with its soft pressure-sensing torso, 3D-printed animated head, and six-degree-of-freedom arms, is a research platform into high-quality autonomous hugging.

The warm feeling of being hugged is known to have a number of positive effects — and humans are far from the only creatures to enjoy social touch. Robots, meanwhile, are traditionally cold, sterile, and not entirely cuddly — at least, outside the realm of children’s entertainment.

But what if a robot could deliver a satisfying hug? That’s the question asked by Alexis E. Block and colleagues in the HuggieBot project, with results from a third-generation study having just been accepted for publication. Such a machine could provide comfort to the lonely, have potential medical benefits in care settings, or act as a surrogate when people simply can’t be together in person, as has been so common during the ongoing pandemic.

Enter HuggieBot 3.0, claimed by its creators to be “the first fully autonomous human-sized hugging robot that recognizes and responds to the user’s intra-hug gestures.”

The birth of HuggieBot

Work on the original HuggieBot began in 2016, when Block was studying for a Master’s degree in robotics. The idea: Providing the ability to send a hug anywhere in the world, but in a manner which feels natural and enjoyable — following what Block and colleagues would come to call the “six commandments” of robotic hugging, a series of design guidelines which included the need for such a robot to be soft, warm, human-sized, and able to autonomously enter into and exit from an embrace.

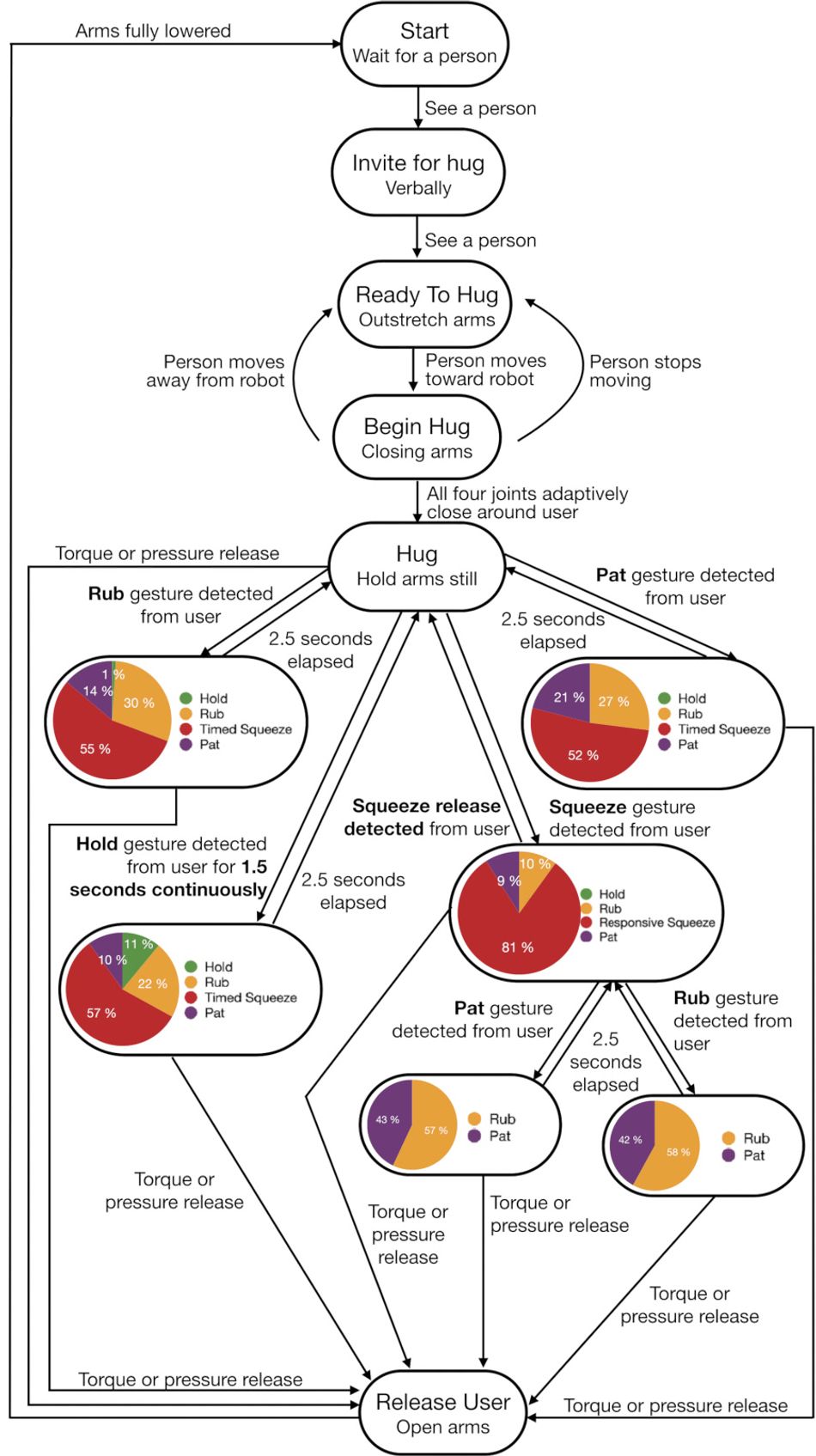

HuggieBot 2.0, put into testing in 2020, took the concept a stage further by using visual and haptic perception to deliver adaptive hugging in closed-loop system, but it wasn’t until HuggieBot 3.0 that the machine would gain a key feature: The ability to autonomously detect, and reciprocate, “intra-hugging gestures” through a probabilistic behavior algorithm — the creation of which contributed an additional five new design guidelines, on top of a revision to one of the original six.

In its tested incarnation, HuggieBot 3.0 incorporates a custom sensing system dubbed HuggieChest which uses two inflated chambers of polyvinyl chloride to provide a soft surface. As a hug takes place a barometric pressure sensor and microphone serve to detect human touch, transmitting data via an Arduino Mega microcontroller board to a Robot Operating System (ROS)-based computer located in the robot’s 3D-printed head — behind a display which provides a friendly and welcoming animated visage.

The robot’s own hugs, meanwhile, are delivered using a pair of Kinova JACO arms, mounted to a custom metal frame and each with six degrees of freedom. “These arms were selected for being anthropomorphic, quiet, and safe,” the researchers explain. “Their movement speed is limited in firmware, and they can be mechanically overpowered by the user if necessary.”

Hug autonomy

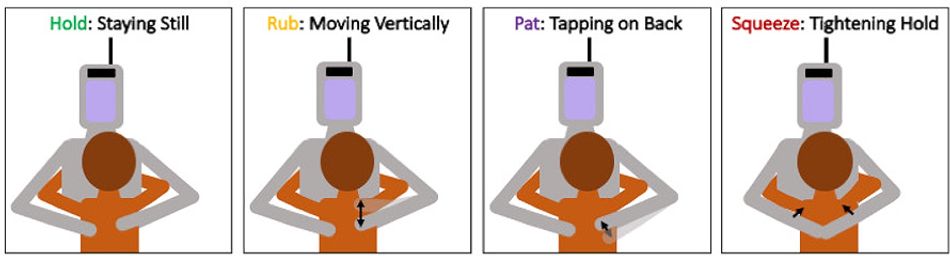

Initially, the team’s testing took place as a “Wizard of Oz” set-up — where a remote operator, not seen by participants, controlled the robot’s motions. Once data had been gathered from 512 human trials across 32 participants, it was used to train a machine learning system capable of detecting and classifying a range of gestures carried out by humans during a hug and which the team classified into four key intra-hug gestures: Remaining still, moving vertically, tapping or patting on the back, and tightening hold in a squeeze.

“Rather than maximizing user acceptance for each robot gesture, which would result in the robot only squeezing the user,” the researchers note, “our behavior algorithm balances exploration and exploitation to create a natural, spontaneous robot that provides comforting hugs.”

Further testing on 16 participants certainly seems to bear that claim out: Twelve of the users were found to hug the robot for longer, subjects declared that they felt “significantly more understood” by the robot, and stated that the revised robot was “significantly nicer to hug” in its new interactive form.

Perhaps the biggest outcome of the work, however, is in its extension of the earlier "commandments": The team revised a guideline on hug initiation, creating a new framework for inviting a potential participant and ensuring consent, while adding new guidelines on ensuring a hugging robot is capable of automatically adapting to the user’s height in order to avoid some below-the-belt contact which occurred during HuggieBot 3.0’s testing, adding a requirement for gesture detection and perception, improving the speed and variety of robot responses to said gestures, and having the robot proactively provide gestures of its own.

There’s more to the team’s work than simply building a better hugging robot: The researchers point to the body of work showing the benefits to physical interaction between humans, and how it may be possible to deliver at least some of the those benefits using autonomous or remotely-controlled robotic surrogate — or, as part of future work on the platform, potentially generalize HuggieBot’s capabilities to a robot which could provide companionship and assistance in care homes, helping to address concerns regarding an ageing population and a dearth of care workers.

The team’s work has been accepted in to the journal ACM Transactions on Human-Robot Interaction, and has been made available in preprint form under free access terms.

References

Alexis E. Block, Hasti Seifi, Otmar Hilliges, Roger Gassert, and Katherine J. Kuchenbecker: In the Arms of a Robot: Designing Autonomous Hugging Robots with Intra-Hug Gestures, ACM Transactions on Human-Robot Interaction, Accepted February 2022. DOI 10.1145/3526110.

Alexis E. Block, Sammy Christen, Roger Gassert, Otmar Hilliges, and Katherine J. Kuchenbecker: The Six Hug Commandments: Design and Evaluation of a Human-Sized Hugging Robot with Visual and Haptic Perception, Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction. DOI 10.1145/3434073.3444656.