Physics-aware training turns physical systems, including an oscillating metal plate, into deep neural networks

Designed to address the exploding computational demands of deep neural networks, physical neural networks (PNNs) branch out from electronics into optics and even mechanics to boost performance and efficiency.

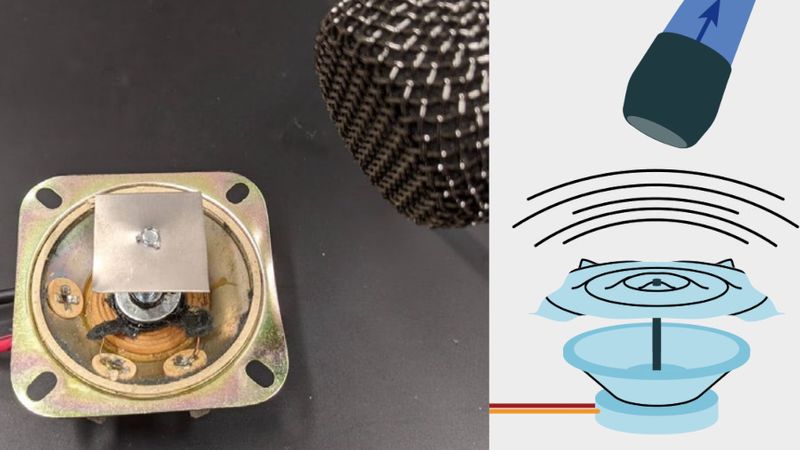

While it may not look like it, this oscillating metal plate serves as a computer running a digital-classification network.

The growing energy demanded by deep-learning systems hampers their potential for widespread deployment against a range of problems, but a team of scientists at Cornell University and NTT Research Inc. believe they have an answer: Creating physical neural networks (PNNs), including an oscillating metal plate which can recognise hand-written digits.

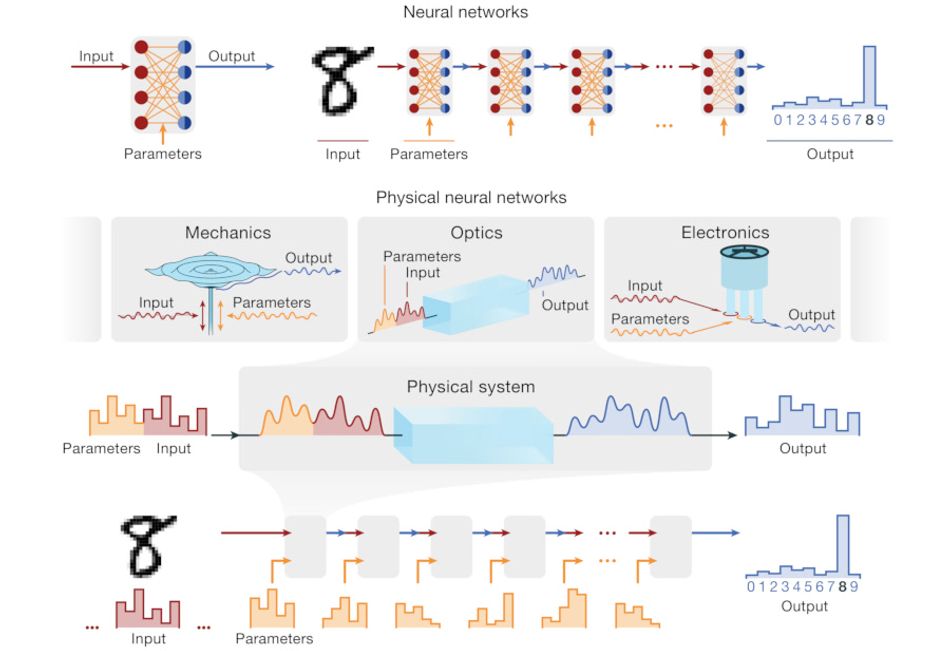

“By breaking the traditional software–hardware division,” the team explains, “PNNs provide the possibility to opportunistically construct neural network hardware from virtually any controllable physical system” — and in doing so offer improved scalability, energy efficiency, and performance than traditional deep-learning systems.

Beating Moore’s law

With the computational demand of deep neural network (DNN) models outstripping the growth in computational power available from each successive generation of hardware — as projected by Moore's Law, which sets out a rough doubling of computational capability every 18 months — there’s considerable interest in DNN-specific acceleration hardware. In effect, that’s what Logan G. Wright and colleagues have created — except they’re not microchips but physical systems based on electronics, optics, and even mechanics.

The idea: Training the physical transformations available in hardware directly so as to perform computation, turning something as simple as a vibrating metal plate into a device capable of rapidly and accurately classifying handwritten digits. In doing so, the team claims it’s possible to improve the performance and reduce the energy requirements of such computations.

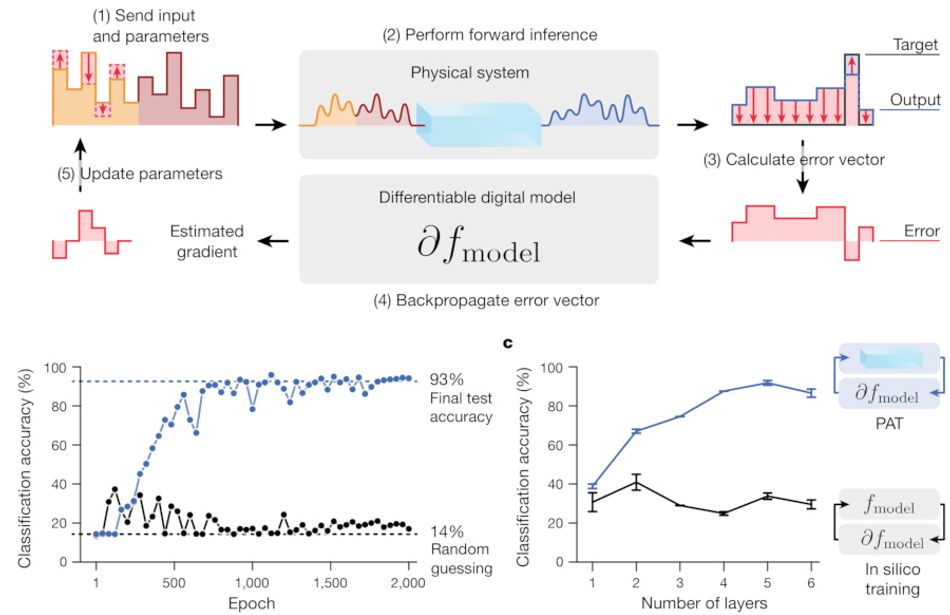

Designed as a universal framework for physical neural networks, the team’s paper details the creation of a number of examples — all enabled using a hybrid in-situ in-silico algorithm dubbed Physics Aware Training (PAT), which takes the backpropagation algorithm which has already proven it use in more traditional neural network designs and allows it to be executed on any sequence of physical input/output transformations.

Processing with physics

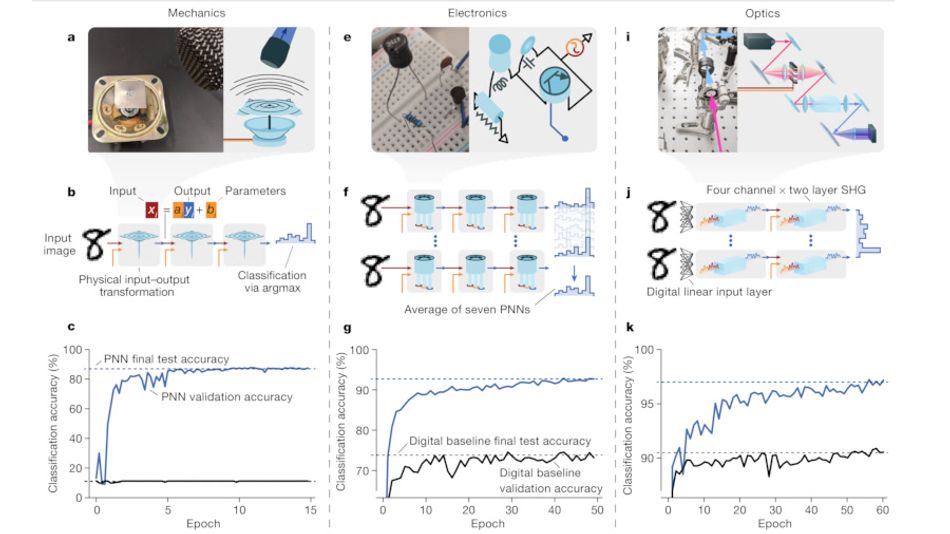

It’s a concept the team demonstrates with a series of prototypes. The most recognizable as something which could potentially run a neural network workload is the electronic prototype, though it uses a non-linear electronic oscillator rather than a microprocessor. The second switches out the electronics for optics, using an ultra-fast optical second-harmonic generator (SHG). The final prototype seems far removed from anything that could be considered a computer: A vibrating metal plate.

The researchers admit that what they have created is “a radical departure from traditional hardware,” but claim that PNNs can be easily integrated into a modern machine learning environment — even to the point of combining them with conventional hardware to create physical-digital hybrid architectures. In doing so, they claim the potential to improve both the efficiency and speed of machine learning “by many orders of magnitude.”

Each device is trained using the Physics Aware Training (PAT) approach: training data is input to the physical system alongside trainable parameters; a forward pass sees the physical system apply its transformation, whatever that may be, to produce an output; the output is compared with the target output to calculate error; then a differentiable digital model estimates the gradient loss with respect to controllable parameters; finally, the parameters are updated based on the inferred gradient.

By repeating the process multiple times, the error level is reduced — and the PNN trained. To prove that claim, the team used the optical PNN to carry out vowel classification — outputting a blue spectrum which is summed via digital computation into spectral bins, and the predicted vowel picked via the bin with the maximum energy. In testing, the device reached an accuracy level of 93 per cent, well above the 14 per cent possible through random guessing.

Thinking with metal

It’s the metal plate which captures the attention, however. Driven by time-varying forces which encode input data and trainable parameters, the vibrating plate — again trained via PAT, in the same manner as the optical vowel classifier — reached 87 per cent accuracy for the Modified National Institute of Standards and Technology (MNIST) handwritten digit classification task.

This, the team points out, proves beyond doubt that it’s possible to use a wide range of physical systems not normally associated with computation for running deep neural network calculations. Further, they say that it offers — “in principle,” at least — the potential to do so “orders of magnitude faster and more energy-efficiently than conventional hardware.”

There are, however, some caveats to the team’s approach. The biggest is simply that some physical systems may be better suited to particular computations than others, particularly where the physical system shares the same or similar constraints to the computation class. Another is that the benefits of PNNs are seen only during inferencing, rather than training — something the researchers say may lead to PNN deployment to focus on their use as an addition to, rather than replacement for, conventional hardware.

For this the team returned to the optical ultra-fast SHG implementation to create a hybrid PNN, featuring trainable digital input layers followed by trainable ultra-fast SHG transformations. The results prove the team’s point: The accuracy on the MNIST test rose from around 90 per cent using the PNN alone to 97 per cent. “This,” the team claims, “illustrates how a hybrid physical-digital PNN can automatically learn to offload portions of a computation from an expensive digital process to a fast, energy-efficient physical co-processor.”

In addition to improving the efficiency and performance of deep neural networks, the team proposes PNNs as having potential for putting computational capabilities directly into sensors — offering the example of a low-power microphone-coupled circuit which could recognize wake-words and only wake up a high-power digital system when detected — as well as into robotics and materials.

The team’s work has been published under open-access terms in the journal Nature; an open-source implementation of the Physics Aware Training (PAT) system, released under the Creative Commons Attribution 4.0 International license, is available on GitHub with an open-access data repository published to Zenodo.

Reference

Logan G. Wright, Tatsuhiro Onodera, Martin M. Stein, Tianyu Wang, Darren T. Schachter, Zoey Hu, and Peter L. McMahon: Deep physical neural networks trained with backpropagation, Nature Iss. 601. DOI 10.1038/s41586-021-04223-6.