The Future of Hardware is... Better Software

The recent history of our physical engineering disciplines is intertwined with computing. The future is too.

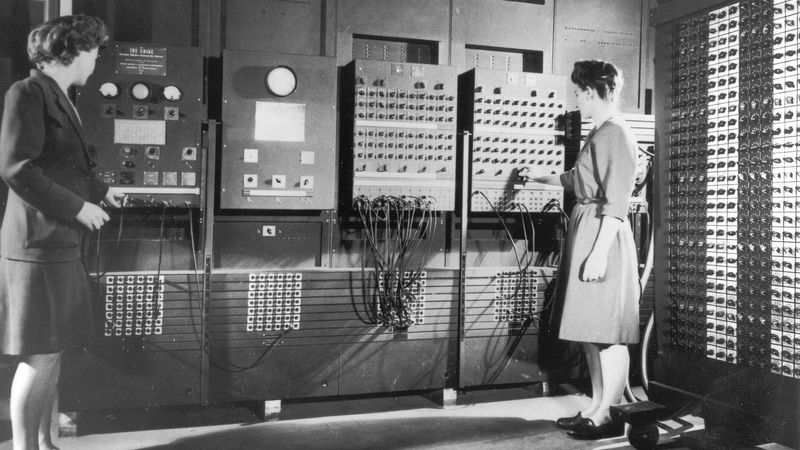

Betty Jean Jennings and Fran Bilas at the main ENIAC control panel. Moore School of Electrical Engineering. U.S. Army, ARL Technical Library Archives

Some Historical Perspective

The relationship between physical engineering and the programmable computer is as old as the computer itself. The reality is that, for most of us, crunching lots of numbers by hand is frustrating, time-consuming, and error-prone.

The British government of the 1820s agreed, opting to fund the early development of Charles Babbage's difference engines. These were visually striking machines: large mechanical computers, some capable of solving 7th order polynomials, that were intended to dramatically reduce the cost of production of tabulated trigonometric and logarithmic functions — the kind that were incredibly useful to scientists, engineers and navigators in the immediate aftermath of the Industrial Revolution.

Fast forward to 1945, and we see the first glimpse of modernity: ENIAC, the world's first programmable, electronic, general-purpose digital computer. If you're curious as to what was it designed for, the answer is the computation of artillery and mortar firing tables; a task that involves exactly the kind of Newtonian mechanics calculations that will be familiar to any undergraduate mechanical or aerospace engineering student (see: Mayevski's Traité de balistique extérierure , or the US Army Ballistic Research Laboratories' The Production of Firing Tables for Cannon Artillery).

Just a decade or so after ENIAC, IBM drew up its first specifications for a new programming language, The IBM Mathematical Formula Translating System, familiar to many of us as FORTRAN. Developed expressly for science and engineering, FORTRAN is a true titan of the scientific computing world. If you've ever fired up MATLAB, analysed a load-case with an FEA package, or run some new geometry in CFD — then it's almost certain that FORTRAN is involved in that stack somewhere, even six or seven decades on.

Why Software Matters For Hardware

The advantages of using digital computers in the physical engineering disciplines are both marked and multifarious, and I hope somewhat obvious.

At a low level, these advantages can be quite straightforward. They include things like providing engineers with the ability to perform large numbers of calculations with little to no risk of human error. Think of something like repeated matrix multiplication, perhaps as part of a kinematics problem. How confident would you be in getting every step of that calculation right, without accidentally picking up a value one row down or one column across from where you intended? Thankfully, with the help of a few lines of FORTRAN, MATLAB, or Python, the computer can perform these calculations on your behalf, perhaps billions of times, without the threat of inconsistency or human error. At least... almost all of the time.

At a higher level, however, these advantages are even more pronounced. They are the culmination of decades of work across several disciplines; the output of engineers, scientists and mathematicians who have designed, implemented, and shared computational techniques that address a myriad of challenging problems — from solving partial differential equations at scale, enabling modern FEA and CFD, through to the development of boundary representation solid modelling techniques that enable modern CAD systems. The convergence of these technologies has revolutionised engineering; accelerating development cycles, reducing the need for physical testing, and helping the world's engineers improve the quality and performance of everything from ships to spacecraft.

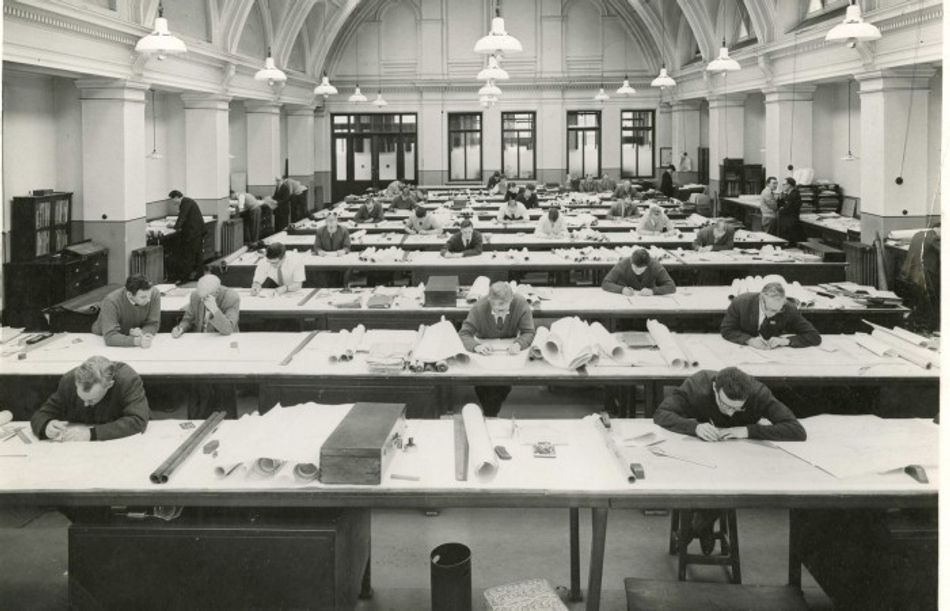

As an illustration, think of the draughting process. For many engineering professionals who started their careers prior to the 1990s, draughting work would have still involved a drawing board, pens, and paper, cellulose, and/or linen. In the event of a mistake, the draughtsperson would have had to break out the scalpel or Tipp-Ex to 'erase' the error, or issue an entirely separate drawing amendment to be paired with the original documents. With modern CAD systems, minor drawing modifications completed prior to release go completely unnoticed, and subsequent amendments can be as simple as a quick tweak and a brief change order — no redrawing of anything, no folder of amendments.

Maritime Belfast/National Museums Northern Ireland

Better yet, imagine the difference in resource requirement and development cycle times for something like a turbocharger. If every minor tweak to blade or housing geometry requires an entire manufacture, assembly, and balancing routine before the new design makes it to the gas stand, every iteration will fall weeks behind and cost significantly more to explore than its simulation-driven counterpart — where a design change could make it to CFD in a matter of minutes. This is before we even consider the introduction of something like mathematical optimisation into this loop, where the responsible engineer could define their objective function, their variables, and their constraints, and conceivably let the computer play tunes with the geometry, feed that into the simulation, interrogate the results, then rinse and repeat until it reaches an optimum. Ultimately, well correlated models, even if wrong, are incredibly useful.

Why Hardware Matters for Everyone

So computers are wonderful tools, and so are the niche software packages we run on them, but so what? Well, I believe we now find ourselves at a critical point in both the trajectory of engineering as a discipline, and in its utility as a mechanism for improving our lives as a species. We have a lot of *very* active engineering fields right now, with a lot of important problems to address — and in the end, at some point, somebody has to actually make stuff to make things better.

For example, it seems to me that we are standing but a few feet forward of the starting line in a new, privately funded space race. We have seen SpaceX engineers repeatedly achieve nothing short of a triumph of control engineering: reversing a rocket onto a barge in the ocean, opening the door to reuse, and as a result, both dramatically reduced space access costs and dramatically increased launch cadence. We have companies like SpinLaunch working to yield orbital access mechanisms that help escape the tyranny of the rocket equation .

We have a burgeoning paradigm shift in personal transport, with electric cars, scooters, and e-bikes encroaching further and further on the internal combustion engine's long-established territory.

We have additive manufacturing evolving into a serious, cost-competitive manufacturing technique that can achieve geometries beyond the reach of traditional subtractive approaches, and we have generative design technology helping engineers produce mathematically optimised, often organic-looking solutions to pressing design problems. To see the power of these two in concert, just look at Czinger and Divergent.

And, of course, we have ever more compelling incentives to overhaul the majority of our civilisation's energy infrastructure, pushing us to diversify the means by which we generate electricity, fostering the development of improved wind, solar, and — I hope — nuclear technologies.

In short, we've got a lot of real-world engineering to tackle, and plenty of hardware to build.

Software is Eating the World

Ultimately, it strikes me that the way in which we develop the physical systems around us is changing, and not all our tools yet accommodate this.

Despite the arrival of an entire range of engineering software systems in the latter part of the last century, these were typically specialised products, often designed to form improved but effectively like-for-like replacements of an existing way of accomplishing a given task, whether that was replacing the draughtsman and his drawing board with a CAD workstation, or replacing a complex, stiffness-altering oil pressure measurement apparatus around a bearing with a CFD case.

As a result, we find ourselves at a point where, as a profession, we're equipped with some extremely impressive tools; tools that can help us build the shorter iterative loops that are required to yield accelerated system development.

However, it feels like we're still using these revolutionary tools, developing the technology that is critical to our species' future, inside an operational framework that was designed for slide rules, drawing boards, and row after row of draughtsmen packing a tobacco smoke filled drawing office.

Our systems are heavily siloed, our teams often fragmented. We struggle to collaborate across teams, sites, and time zones. We have vast and unstructured knowledge bases that are full of effectively undiscoverable data, put beyond the reach of those who don't know exactly where to look. And we struggle for visibility — of tasks, responsibilities, upcoming changes, and most importantly, of our data.

Even the big players recognise this. Dassault push research that shows engineers spend 34% of their time performing 'non-value-added' work; dominated by the amount of time spent just looking for stuff, trawling through network drives and cluttered inboxes. This same research shows that engineers are typically working with outdated information 20% of the time — imagine a full day a week, every week, for every member of your team, just being thrown away because of bad input data. This pattern has to change.

In fact, for newer hardware companies, I think some things have *already* changed. Software is eating the world applies not only to the electronic control of fuel injectors and ignition coils, but to the adoption of software-like management practices, with a focus on short design-build-test loops. I may hate the confusing, jargon-heavy nomenclature of 'agile' methodology, but there is some method in the madness.

Better, More Collaborative Software for Engineering Teams

The future that I see is one where we not only have specialised tools for specific parts of the engineering cycle, but we have a central, engineering specific hub; an operating system that ties these things together.

We want to see the world's physical engineering teams build better products faster; to achieve shorter development cycles, to iterate faster, and to better leverage their internal knowledge — so that they can achieve their goals, whether that's kinetic launch, faster cars, or greener energy.

We think this will be brought about by transforming how hardware teams communicate, collaborate, and manage technical knowledge. That means better, more connected software; software that focuses on resolving the productivity-killing shortfalls in current engineering process. We think hardware teams should have technical communication mechanisms that are fast, painless, and traceable; that allow new hires to find the information they need, without requiring someone else to go rooting through their inbox for half an hour. We think engineers should be able to see what colleagues are working on, how these items are prioritised, and what's coming next. We think engineers should be able to annotate drawings, share calculations and schematics, and offer opinions on proposed solutions — all in real-time, wherever they are, with all that data stored and indexed for easy discoverability down the line.

Above all, we think engineers should have a single source of truth to work with; a repository of version-controlled design values, simulation outputs, and performance targets — whether those are scalar values, or n-dimensional maps.

In the end, what we want to see is engineering teams moving beyond the pain of e-mail ping-pong with spreadsheets and presentations, to stop scrawling performance differentiating IP over paper drawings that are destined for the shredder, and to start letting the computers handle the busywork.