ViKiNG, the hiking robot, can plan its journey using overhead maps - even if they're inaccurate

Using satellite imagery or road schematic maps as "side information," the ViKiNG robot can plan its own miles-long route to a goal — measurably outperforming its strongest competitors, even when its side information is inaccurate or outdated.

ViKiNG, pictured in full regalia, uses a combination of direct computer vision control and "hints" via side information to navigate long distances.

Autonomous robots, from wheeled road vehicles to drones, have the potential to transform the provision of services in urban and rural regions alike — but only if they’re actually capable of finding their way to a given destination without human interaction.

It’s this problem that ViKiNG, the creation of Dhruv Shah and Sergey Levine of the University of California at Berkeley, aims to solve — by allowing an artificially intelligent robot to make use of geographic hints, including relatively low-accuracy roadmaps and outdated satellite imagery, in the same way as a human might do.

Learning-based approach

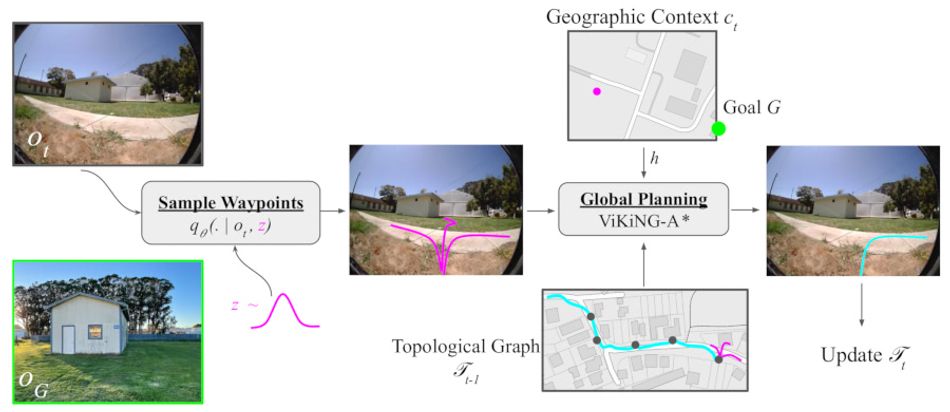

Long-range navigation, on the order of miles rather than feet, remains a considerable challenge in the field of machine learning. ViKiNG, a backronym for “Vision-based Kilometer-scale Navigation with Geographic Hints,” attempts to solve the problem by combining short-range vision-based navigation and longer-distance route planning using what its creators call “side information.”

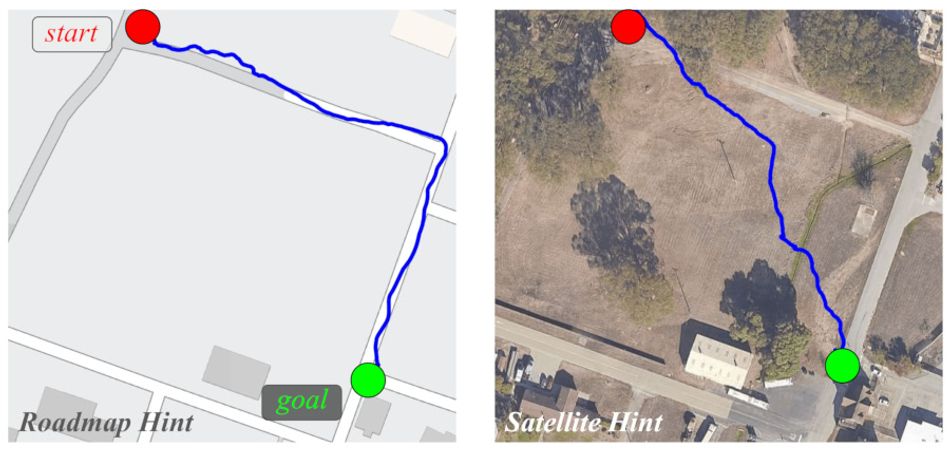

This “side information” can take several forms: In testing, the team used satellite imagery and low-detail road maps — the sort of thing a human navigator might use to plan a trip. Unlike rival systems, though, ViKiNG performs no geographic reconstruction: It’s entirely based on a learning model based on inference and heuristics.

“When humans navigate new environments,” the team explains, “they make use of both geographic knowledge, obtained from overhead maps or other cues, and learned patterns. [But] humans don’t require maps or auxiliary signals to be very accurate: A person can navigate a neighborhood using a schematic that roughly indicates streets and houses, and reach a house marked on it.”

The system’s developers sought to replicate that functionality in a machine learning system, giving it the ability to navigate over long distances with zero prior experience in the target environment and access to side information which could be outdated — such as inaccurate maps or old satellite imagery — to be treated as a hint alongside direct control via a computer vision navigation system.

Traversability

Where a traditional navigation system concerns itself with producing a geometric map of surroundings, routes, and goals, ViKiNG opts instead for a prediction of traversability — how easy the robot is likely to find a given route. Impressively, ViKiNG does this for entirely novel environments: The team trained the system on a dataset made up of 30 hours of navigation data from an office park environment augmented with a further 12 hours of teleoperated driving data from sidewalks, hiking trails, and parks — but with no individual journey lasting more than 260 feet.

This dataset trained a deep neural network capable of navigating for nearly two miles — breaking journeys down into a series of sub-goals — across wholly unfamiliar environments towards a goal indicated as a simple photograph and rough GPS coordinates.

To test the system, the pair deployed the network on a Clearpath Jackal uncrewed ground vehicle equipped with a front-facing RGB camera, on-board GPS receiver, and an NVIDIA Jetson TX2 edge-AI computer system with cellular hotspot for monitoring. Its performance proved impressive: The deployed ViKiNG was able to successfully navigate its way to various goals in a very human-like fashion: Sticking to sidewalks, avoiding buildings, and backtracking where its physical searches led to a dead end.

“The robot learns to stay on trails where possible,” the team notes of the results when the robot was deployed along a hiking route. “This behavior is emergent from the data — there is no other mechanism that encourages staying on the trails, and in several cases a straight-line path between the goal waypoints would not stay on the trial.”

Leading performance

The team found that ViKiNG was able to outperform all prior approaches for long-distance navigation, in some instances completing navigation challenges which no other method could finish. Better still, it proved able to do so even in the face of low-detail or outdated side information.

In one experiment, the ViKiNG robot was given a schematic roadmap and told to reach a goal, which it did by following the sidewalk at the edge of the road; when switched to a higher-detail satellite imagery, the robot opted to leave the sidewalk and cut across a meadow, having correctly predicted its ability to traverse the region and cut a significant chunk off the journey distance.

When faced with outdated side information, ViKiNG also showed flexibility: In another experiment, the robot was given satellite imagery which did not include a freshly-parked truck blocking the primary route. When the vision-based control system found the truck in the way, the robot automatically avoided the obstacle and found a new path; the same was also noted with the overhead imagery was provided with a fixed three-mile offset.

“Effectively leveraging a small amount of geographic knowledge in a learning-based framework can provide strong regularities that enable robots to navigate to distant goals,” the researchers conclude. “While we only use satellite images in our experiments, an existing avenue for future work is to explore how such a system could use other information sources, including schematics, paper maps, or textual instructions.”

The team’s work has been made available under open-access terms on Cornell’s arXiv preprint server, with more information to be found on the project website.

Reference

Dhruv Shah and Sergey Levine: ViKiNG: Vision-Based Kilometer-Scale Navigation with Geographic Hints, arXiv. DOI arXiv:2202.11271 [cs.RO].