A 3D-printed thumb with a camera at its base proves the potential of the Insight deep-learning robotic haptic feedback system

Costing under $100 in parts to produce, the Insight prototype can create a point cloud of multiple touch locations with enough sensitivity to infer the alignment of its "thumb" relative to the Earth's gravity.

Designed to mimic the appearance of the human thumb, and boasting a highly-sensitive "fovea" at the nail, this low-cost approach to electronic skin shows promise.

Tags

Fictional robots in popular media tend towards polarization of functionality: At one end are metal-limbed skull-crushing machines like Robocop’s ED-209 or Judge Dredd’s ABC Warriors, and at the other fully-flexible hugging machines like Baymax from Big Hero 6. Outside of fiction, the bulk of the robot population is closer to one end of the spectrum than the other — but a team of researchers believe their easily-produced low-cost sensing system could change that.

Designed to offer future robotics system a sense of touch as close to a human’s as possible, the Insight system developed at the Max Planck Institute for Intelligent Systems uses a low-cost off-the-shelf camera with RGB LED ring housed in a 3D-printed thumb, all linked in to a deep-learning computer vision system, to figure out where it’s being touched and how hard — with enough sensitivity to pick up the pull of the Earth’s gravity.

Insight in a thumb

The experimental proof for the project, created by Huanbo Sun, Katherine J. Kuchenbecker, and Georg Martius, is a full-scale but non-functional mimic of a human thumb, created by 3D printing a hollow “skeleton” structure in aluminum and applying an opaque yet reflective flexible elastomer “skin” over the top using 3D printed molds to create what the researchers describe as a “soft-stiff hybrid structure.”

At the base of the thumb, which is not capable of movement, is a low-cost off-the-shelf camera module connected to a Raspberry Pi single-board computer. The camera captures a live view of the inside of the thumb, as lit by a low-cost ring of individually-addressable RGB LEDs and a 3D-printed collimator — producing images that, while attractive, show little of use to a casual observer.

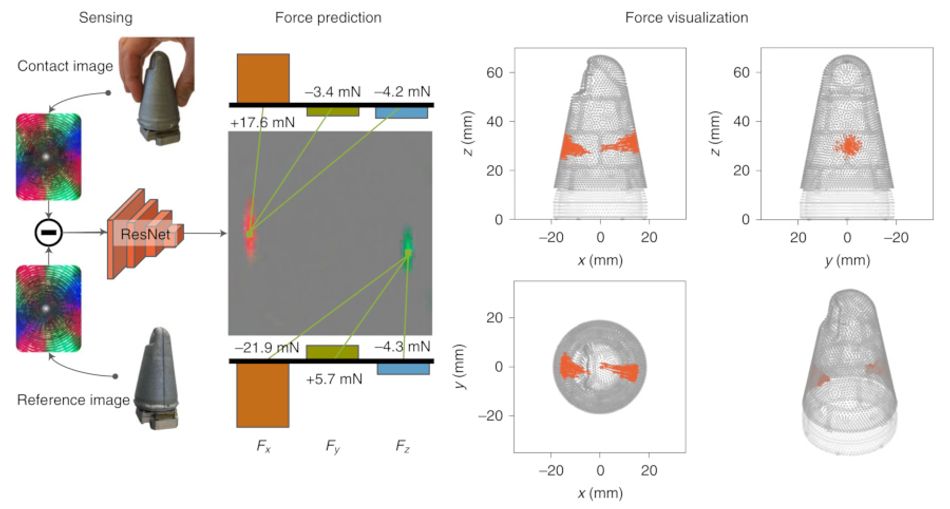

To a machine, though, the colorful images reveal plenty. The Insight device works using a combination of photometric stereo and structured light approaches for producing three-dimensional data from two-dimensional single-camera imagery, something described by its creators as “unique among haptic sensors.” By analyzing the light, it’s possible to create a point-cloud representing not only the location of a touch contact in 3D space but also its force and direction — with, experimentation showed, impressive accuracy.

The parts, meanwhile, are surprisingly low in cost: Glossing over the price of the 3D printers required to produce the mold and skeleton, the materials and parts for a single thumb-sized Insight sensor are available for under $100.

A touch of deep learning

The secret behind Insight’s performance: An adaptation of the ResNet deep convolutional neural network (CNN), capable of taking the imagery as an input and producing the point cloud as its output — based purely on how the light is viewed within the 3D-printed thumb.

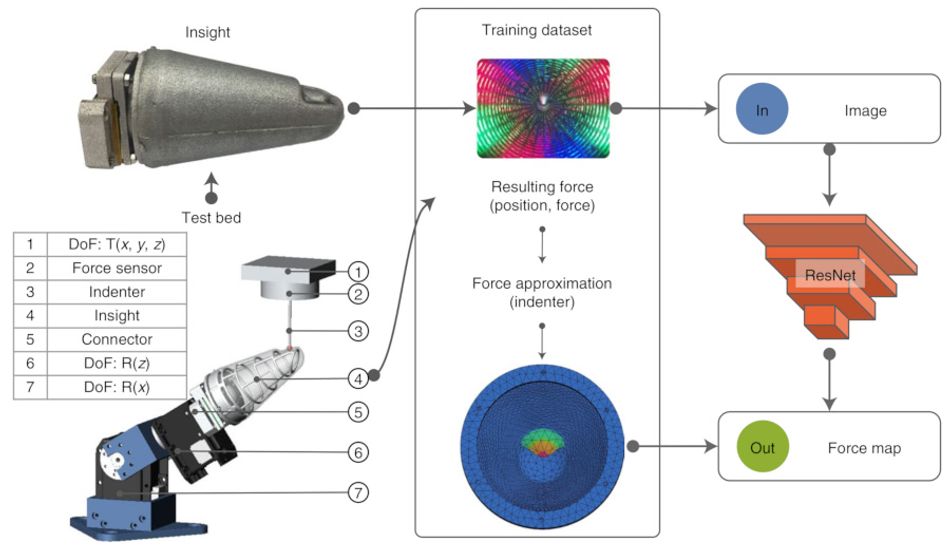

With no readily-available datasets for training, however, Sun and colleagues needed to make their own. The solution: A custom test bed, a computer-controlled positioner with five degrees of freedom (DoF) and a six-DoF force-torque sensor capable of prodding and poking the thumb from almost any angle and capturing a ground truth state of position and force alongside the internal camera’s imagery.

The customized ResNet structure was trained from the test bed dataset, comprised of over 187,000 samples at 3,800 randomly-selected initial contact locations — plus a further 16,000 readings with the thumb itself in differing positions, affected purely by gravity. Four ResNet blocks are used for estimation of contact position and amplitude, two for estimation of the force distribution map, and four for estimating the sensor posture.

The proof is in the prodding

The results are unarguably impressive. Overall, the Insight sensor offers a spatial resolution down to 0.4mm (under 0.016"), a force magnitude accuracy of around 0.03N, and a direction accuracy of around five degrees between 0.03 to 2N — and can track up to five contact points simultaneously. Peak performance, however, is limited to the “thumbnail” area, which is designed for more than decoration: The elastomer layer is thinner here than in the rest of the thumb, boosting sensitivity compared to the thicker but more robust “skin” layer.

The Insight system’s overall sensitivity, meanwhile, is high enough to be able to estimate the posture of the sensor by tracking its own self-weight as acted upon by the Earth’s gravity. Invisible to the human eye, the tiny changes made to the soft outer material and the resulting impact on the light from the LEDs as captured by the internal camera offer sensing to an orientation accuracy of around two degrees.

The team claims the Insight approach isn’t specific to replica thumbs: The core design concept can, the researchers say, be applied to a variety of robot body part shapes and purposes, while the machine-learning approach is fully generalized and applicable to other sensor designs.

The work has been published in the journal Nature Machine Intelligence under open-access terms, with raw image and contact information data plus Python source code published to the Edmond system under a permissive open-source license.

References

Huanbo Sun, Katherine J. Kuchenbecker, and Georg Martius: A soft thumb-sized vision-based sensor with accurate all-round force perception, Nature Machine Intelligence 4. DOI 10.1038/s42256-021-00439-3.

Huanbo Sun, Katherine J. Kuchenbecker, and Georg Martius: Insight: a Haptic Sensor Powered by Vision and Machine Learning, Edmond. DOI 10.17617/3.6c.

Search for articles and topics on Wevolver

Tags