Enhancing Quality Control with Machine Vision Systems

Transforming manufacturing processes through advanced inspection

Image credit: Fraunhofer IGD

Introduction

Machine Vision System is a technology that uses cameras and computer algorithms to perform inspections and measurements automatically, typically for quality control and automation in manufacturing environments.

Machine Vision Systems (MVS) have emerged as a benchmark in quality control, transforming production processes across industries. The purpose of these advanced systems is to automate inspection tasks, detect anomalies, and ensure the highest quality standards in manufactured goods. MVS offers improved accuracy, increased speed, and enhanced efficiency. They eliminate human error, reduce waste, and deliver substantial cost savings, all while ensuring consistency in quality control.

Recent developments in MVS technology reflect a significant increase in deployment in diverse production processes. This trend can be attributed to the system's ability to adapt, learn, and process complex data quickly. Whether in the electronics industry for inspecting PCBs, the pharmaceutical industry for pill verification, or the automotive industry for part assembly verification, MVS has become integral to ensuring quality and maintaining competitiveness in today's fast-paced manufacturing landscape.

This article covers MVS capabilities, types, and underlying technology. It delves into how artificial intelligence, deep learning, image processing algorithms, and neural networks bolster these systems, further enabling them to revolutionize quality control. As we look toward the future, exploring emerging trends and advancements will underscore the continued potential of MVS in shaping various economic sectors.

Technology Behind Machine Vision Systems

Machine Vision Systems (MVS) harness several advanced technologies to perform effective quality control. The fundamental components include image processing algorithms, artificial intelligence (AI), deep learning, and neural networks. These technologies work together to process and interpret visual data, allowing MVS to inspect, measure, and identify anomalies effectively.

Image processing algorithms

Image processing algorithms serve as the main component in MVS, converting raw visual data into meaningful insights. These algorithms involve a sequence of computational operations that enhance, analyze, and interpret digital images. Standard procedures such as filtering, segmentation, and feature extraction help improve image quality and isolate the elements of interest, laying the foundation for subsequent analysis1.

Artificial Intelligence, Deep learning, Neural networks

The subsequent stages of interpretation and decision-making are powered by AI and its subsets, deep learning, and neural networks. AI enables MVS to mimic human intelligence, identify patterns, learn from experience, and make predictions2.

Deep learning, a subdomain of AI, goes further by utilizing neural networks - algorithms inspired by the human brain's structure and function - to carry out complex tasks3. For example, convolutional neural networks (CNNs), a specific type of neural network, have proven highly effective in analyzing visual data. CNNs can identify patterns with varying sizes, orientations, and positions in an image, enabling the automated detection of defects in manufactured products4.

In essence, the union of these technologies allows MVS to perform at high accuracy. Image processing algorithms provide a solid foundation, while AI, deep learning, and neural networks equip these systems with a dynamic, evolving capability that can adapt and improve over time. Thus, the collaboration of these technologies is propelling MVS to the forefront of quality control solutions and shaping the future of manufacturing and production processes5.

Types of Machine Vision Systems for Quality Control

Machine Vision Systems (MVS) for quality control are generally categorized into three main types: 1D, 2D, and 3D vision systems, each with unique features, benefits, limitations, and applications that cater to different industry needs.

1D Vision Systems play a vital role in the packaging industry, assisting in barcode and QR code reading for product identification, inventory management, and tracking. For example, in beverage production lines, these systems scan barcodes on moving products, assessing legibility, and thus preventing defective goods from reaching the consumer.6

2D Vision Systems are commonly deployed in the automotive manufacturing industry. These systems use high-speed cameras and advanced image processing algorithms to perform critical tasks such as defect detection and part identification. For instance, during the assembly process, a 2D Vision System might inspect and verify whether the correct components have been assembled in the right order and orientation. If a defect or error is detected, the system can signal to halt the production line, thereby preventing faulty units from progressing further in the manufacturing process.7

3D Vision Systems capture depth information, enabling accurate measurement of object dimensions and volumetric data8. Applications include robotics guidance, surface inspection, and object recognition.

Advancements and current developments

Emerging trends

Large Language Models (LLMs) are one of the most significant developments influencing the future of MVS in quality control processes. These models are part of the broader field of Natural Language Processing (NLP) and can understand, generate, and interpret human language.

LLM’s potential applications in MVS can be seen in environments where textual or symbolic information needs to be analyzed. For instance, LLMs can be integrated with MVS to analyze the text on product packaging or labels for correctness or adherence to regulations. They can also facilitate the interpretation of complex patterns or symbols which might be difficult for traditional image processing algorithms to handle10.

LLMs, like GPT-3, trained on extensive datasets, can generate human-like text, offering new possibilities for interpreting and responding to complex patterns in image data11. This advancement enhances the diagnostic capabilities of MVS, allowing them to provide detailed insights into quality control issues. It moves beyond mere detection, delivering comprehensive reports and facilitating informed decision-making12.

Advancements in sensor technology

High Dynamic Range (HDR) sensors can capture high-quality images under different lighting conditions. Traditional sensors can struggle to capture details in very bright and very dark areas within the same scene due to the limited dynamic range - the span between the lightest and darkest elements an image can capture without losing detail.

HDR sensors address this issue by effectively increasing the dynamic range. They do so by taking multiple images at different exposure levels (bracketing) and combining them into a single image that preserves details in both highlights and shadows. This process is known as tone mapping. This adaptability is crucial in manufacturing environments where lighting conditions can vary widely, and unpredictability can lead to inspection inaccuracies13.

The introduction of 3D sensors allows MVS to construct three-dimensional representations of objects, enabling detailed inspections and precise defect detection14. The accuracy achieved with these developments could replace the need for final human inspection of products, thereby optimizing the quality control process further.

Integration of machine vision systems with robotics

The integration of MVS with robotics is redefining the parameters of automated quality control. This enables real-time inspections, where robots equipped with MVS can scan products on the production line, identify defects, and even rectify issues without halting the production process15.

The collaboration of MVS and robotics is more effective with the introduction of collaborative robots, or 'cobots'16 . A cobot equipped with high-resolution imaging and sophisticated image processing algorithms can accurately identify minute defects that may be overlooked by human inspectors due to fatigue or human error.17

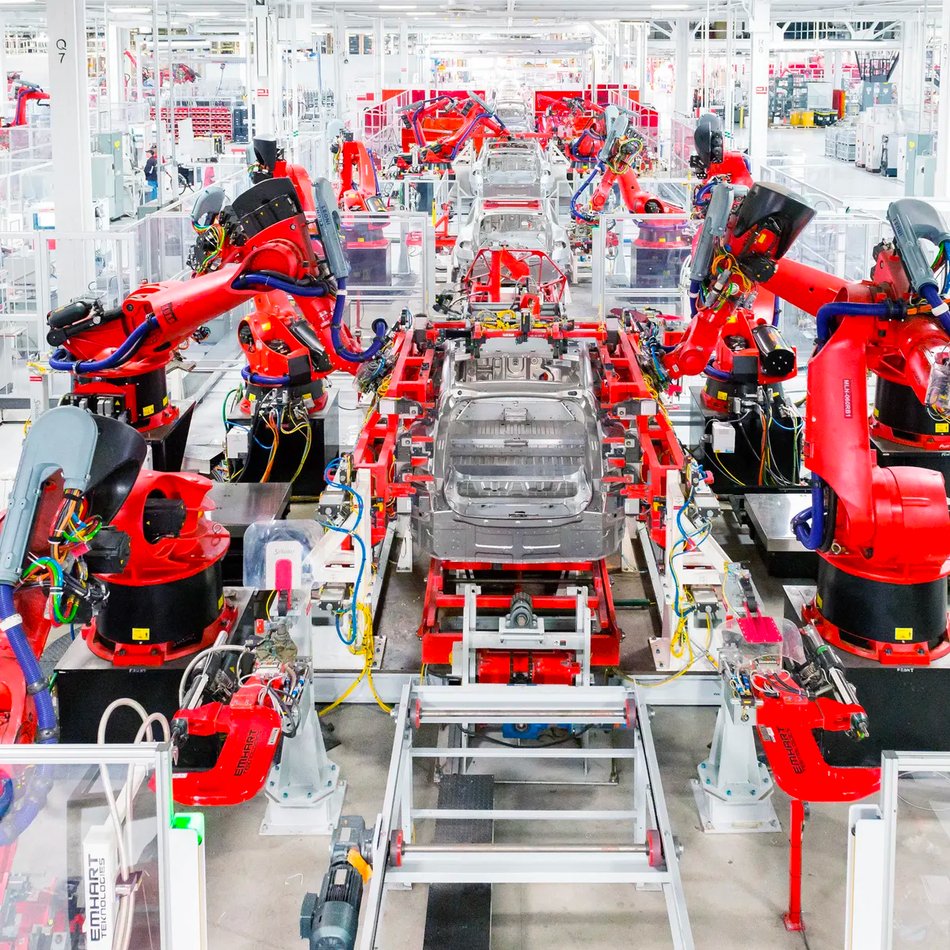

Tesla is leveraging the power of MVS and Artificial Intelligence (AI) to accelerate and optimize its production process. Tesla's production facilities have significantly transitioned to automation, implementing advanced MVS for quality control. These systems use high-resolution cameras and image-processing algorithms to scrutinize vehicles in real time during the assembly18. They inspect body panel alignment, paint quality, and other visual parameters, identifying defects with accuracy beyond human capabilities. Detected irregularities are corrected manually or automated, ensuring each Tesla vehicle adheres to stringent quality standards19.

Conclusion

Machine Vision Systems (MVS) are pivotal in enhancing quality control in manufacturing and production processes. Their ability to process visual data quickly and precisely makes them indispensable in modern industrial settings. The evolving technology driving these systems, including image processing algorithms and Artificial Intelligence (AI), enhance their functionality and capabilities.

These transformative technologies are evolving rapidly, with advancements such as improved sensor technology and integration with robotics propelling the sector forward. Companies like Tesla are a testament to the large-scale potential of MVS and AI integration, indicating a future where such systems are ubiquitous in manufacturing facilities. As we look towards this future, it's evident that MVS will continue to drive quality improvements and operational efficiency in manufacturing, shaping the industry for years to come.

About the author: Deval Shah is a Machine Learning Engineer with 5+ years of experience in developing ML-based software for video surveillance cameras. He enjoys implementing research ideas and incorporating them into practical applications and advocates for highly readable and self-contained code.

This article was prepared by Wevolver on behalf of Mouser. Read more about the impact of Machine Vision Systems at Wevolver.com.

References

[1]. Gonzalez, R. C., & Woods, R. E. (2018). Digital image processing (4th ed.). Pearson Education, Inc.

[2]. Russell, S., & Norvig, P. (2016). Artificial Intelligence: A Modern Approach (3rd ed.). Prentice Hall.

[3]. Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT Press.

[4]. Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2017). ImageNet classification with deep convolutional neural networks. Communications of the ACM, 60(6), 84-90.

[5]. Klette, R. (2014). Concise Computer Vision. Springer.

[6]. Kumar, A., & Zhang, D. (2012). Bar Code Recognition for Fast Moving Consumer Goods: An Application to the Retail Industry. Proceedings of the IEEE International Conference on Automation and Logistics, Chengdu, China.

[7]. Heikkilä, T., & Kälviäinen, H. (2005). A vision system for on-line quality control in the automotive industry. Machine Vision and Applications, 16(2), 106-113.

[8]. Pahlevani, F., Kovacevic, R., & Milosavljevic, M. (2021). 3D Machine Vision in Quality Control: Challenges and Opportunities. *Robotics and Computer-Integrated Manufacturing,* *68*, 102056.

[9]. Malik, H., & Choi, T. S. (2016). Machine vision system: An industrial inspection tool for quality control. Journal of Industrial and Production Engineering, 33(5), 318-329.

[10]. Zuehlke, D. (2010). SmartFactory—Towards a factory-of-things. Annual Reviews in Control, 34(1), 129-138.

[11]. Deng, J. et al. (2022). Natural Language Processing in Quality Control: A Review. Journal of Manufacturing Systems, 61, 102-114.

[12]. Kusiak, A. (2018). Smart manufacturing. International Journal of Production Research, 56(1-2), 508-517.

[13]. Sjöberg, J., & Ringaby, E. (2021). High dynamic range imaging for machine vision systems. Optical Engineering, 60(3), 031113.

[14]. Liang, X., et al. (2022). In-depth Analysis of 3D Sensor Technology in Machine Vision Systems. Journal of Imaging Science and Technology, 66(2), 020501.

[15]. Wang, L., Törngren, M., & Onori, M. (2015). Current status and advancement of cyber-physical systems in manufacturing. Journal of Manufacturing Systems, 37, 517-527.

[16]. Foisel, P., & Westkämper, E. (2009). Robots for Quality Control in Manufacturing. In International Conference on Changeable, Agile, Reconfigurable and Virtual Production (CARV), 1, 380-385.

[17]. Perzylo, A., Somani, N., Rickert, M., & Knoll, A. (2016). An ontology for CAD data and geometric constraints as a link between product models and semantic robot task descriptions. In IEEE/RSJ International Conference on Intelligent Robots and Systems, 4196-4203.

[18]. Kalra, N., & Paddock, S. M. (2016). Driving to safety: How many miles would it take to demonstrate autonomous vehicle reliability? Transportation Research Part A: Policy and Practice, 94, 182-193.

[19]. Tesla. (2021). Impact Report 2020. Tesla, Inc.

[20]. Bojarski, M., Del Testa, D., Dworakowski, D., Firner, B., Flepp, B., Goyal, P., ... & Zhang, X. (2016). End-to-end learning for self-driving cars. arXiv preprint arXiv:1604.07316.