Hearing Beyond Human Limits: Infineon's XENSIV™ MEMS Microphones Fuel Next-Gen AI

The evolution of MEMS microphones marks a significant leap towards a future of seamless human-to-machine interaction, ensuring that AI can truly listen - and one day even "understand" - beyond our auditory limits.

Artificial intelligence (AI) is now part of our daily routines, from virtual assistants in our living rooms to complex automotive systems on the road. Yet, to truly understand and interact with us, AI must excel at one of our most fundamental forms of communication: the human voice.

Voice isn’t just about spoken words; it carries emotion, context, and intent. If AI systems are to understand human communication with all its facets and respond naturally—whether that’s a home speaker detecting frustration or an in-car assistant distinguishing highway noise from a soft command—they need microphones that capture sound with exceptional clarity.

Further reading: |

That’s precisely where Infineon Technologies comes in. Each new generation of their XENSIV™ MEMS microphones pushes the boundary of what AI can hear—recording whispers and thunderous roars alike with the highest precision and minimal distortion and noise.

The impact of voice AI, enabled by XENSIV™ MEMS microphones, goes far beyond convenience: in healthcare, more sensitive microphones boost hearing-aid performance; in industrial IoT, they pick up early warning signs of mechanical failures through sound anomalies; and in enterprise collaboration, they capture emotional voice nuances that transform basic call transcriptions into genuinely insightful interaction reports.

In all of these applications, the evolution of XENSIV™ MEMS microphones marks a significant leap towards a future of seamless human-to-machine interaction, ensuring that AI can truly listen — and one day even “understand” — beyond our auditory limits.

Technical Foundations: Why are high-SNR / high-ENL MEMS Microphones crucial for all these applications?

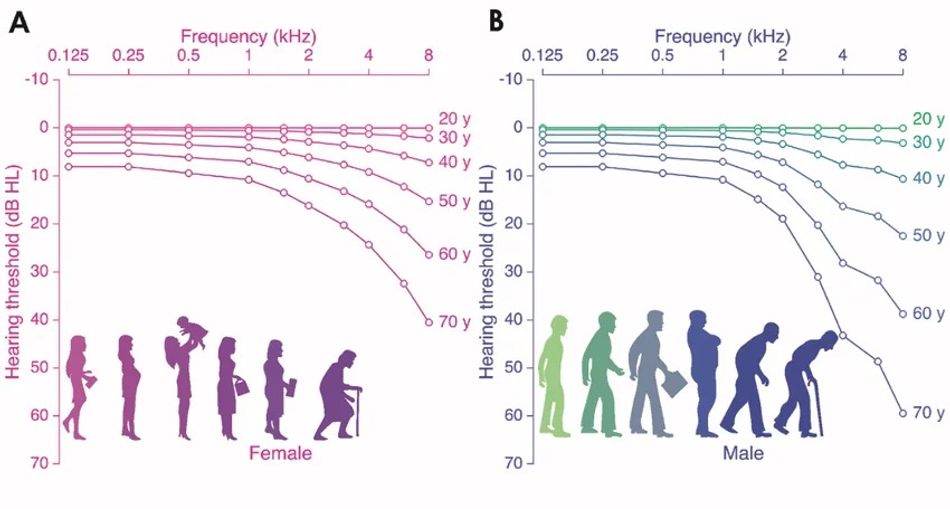

Human Hearing vs. Microphone Performance: To understand why a high signal-to-noise ratio (SNR) is a key requirement for microphones in the AI-enabled applications above, it is important to understand human hearing first. Human hearing is remarkably sensitive. By convention, 0 dB sound pressure level (SPL) is the threshold of hearing for a young, healthy listener at a sound frequency of 1 kHz – corresponding to a minute pressure of about 20 μPa. This means that the softest sound a typical human ear can detect at this frequency causes a pressure variation of just 20 micro pascals in the air.

Our ears are most sensitive in the mid-frequency range (~500 Hz to 6 kHz), which is critical for understanding speech (especially around 2 kHz).

At very low or very high frequencies, and as we age, the hearing threshold rises – meaning sounds must be louder for us to detect them. Age-related hearing loss (presbycusis) can significantly reduce sensitivity at higher frequencies over a lifetime.

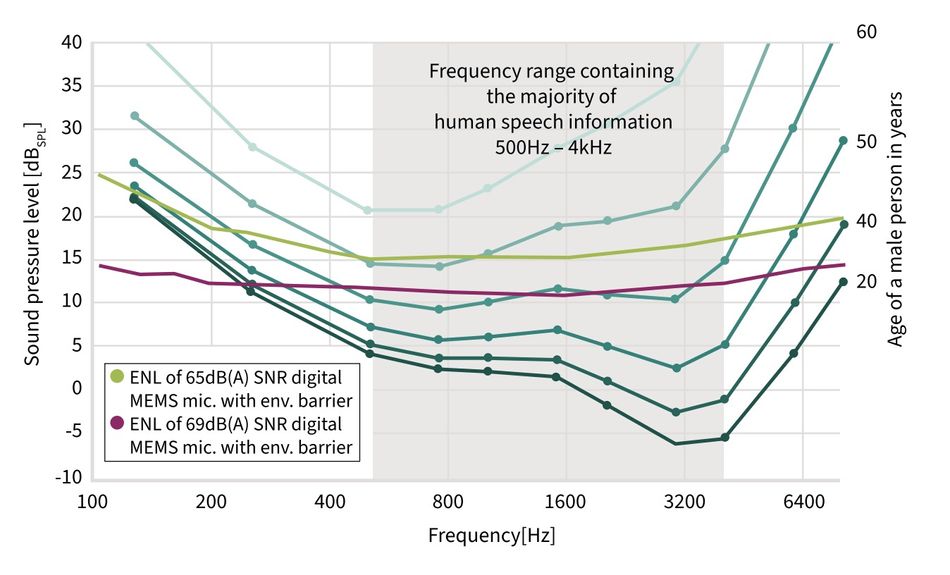

MEMS microphones, like human ears, have a hearing threshold; these microphones have a self-noise floor. Known as the equivalent noise level (ENL), it represents the sound pressure level at which the microphone’s internal noise equals its output signal when no external sound is present.

Infineon’s measurements (Figure 2) comparing a mid-range MEMS microphone (65 dB SNR) with a high-end MEMS microphone (69 dB SNR) illustrate how close we are to human-like hearing.

The high-end microphone’s ENL curve is significantly lower than that of the mid-range mic – an improvement in the order of 5–10 dBSPL in specific frequency bands – which means it can detect softer sounds. However, even this state-of-the-art microphone has a higher noise floor than the human ear.

Notably, around ~2 kHz, the best MEMS mic still has about a 12 dBSPL higher threshold (more noise) than the ear of a young adult. In other words, current microphone technology can’t match all aspects of human hearing, but each new generation closes the gap further.

Key Metrics – SNR vs. ENL: A Simple Explanation

Signal-to-Noise Ratio (SNR) and Equivalent Noise Level (ENL) are critical metrics for microphone performance. Understanding these helps engineers pick the right microphone for human-centric and machine-listening applications.

As explained above, Equivalent Noise Level (ENL) measures the microphone’s absolute noise floor without favoring any frequency range. It’s given in dBSPL (Sound Pressure Level), indicating how loud a sound must be to surpass the microphone’s self-noise. Signal-to-Noise Ratio (SNR) tells us how much stronger the desired signal is than the microphone’s noise. In practice, a reference sound is used, and the microphone’s output is compared to its self-noise. The higher the SNR (measured in decibels), the “cleaner” the audio.

A weighting is commonly used for SNR measurements, which mirrors human hearing sensitivity by giving more weight to mid-range frequencies. This works well if you’re designing products for people — our ears are more sensitive to certain frequencies, so an A-weighted measurement paints a realistic picture of perceived noise levels.

ENL can be more revealing than SNR for AI and machine-listening tasks because computers can “hear” across the entire frequency spectrum without bias. A microphone with a lower ENL can detect quieter sounds, which might be essential in applications like voice-activated systems, environmental monitoring, or subtle audio event detection.

In simple terms:

SNR: How much louder is the signal than the mic’s inherent noise (weighted by human hearing)?

ENL: What is the mic’s actual noise floor, objectively measured, across all frequencies?

As AI continues to shape audio technology and becomes a key enabler in countless new applications, ENL is becoming a go-to metric for engineers who need reliable capture of soft or complex sounds that humans might overlook. However, SNR remains valuable for applications focused on human perception.

Application Areas: MEMS Microphones Powering AI Innovation

MEMS microphones have become integral to various AI-driven applications, offering high signal-to-noise ratios (SNR) and low power consumption. Their compact size and superior performance make them ideal for enhancing user experiences across multiple sectors.

Healthcare: Enhancing Assistive Devices

In healthcare, assistive hearing devices like hearing aids and cochlear implants are being enhanced by AI-driven audio processing. These devices must distinguish speech from ambient noise so that users can communicate clearly in any environment.

High-SNR microphones with low power consumption let AI algorithms in a hearing aid filter out background chatter and amplify important sounds without draining the tiny battery. Such microphones are explicitly designed for hearables and hearing enhancement devices, where long battery life and speech clarity are critical.

By capturing a cleaner audio signal, they enable AI noise reduction and speech amplification features that significantly improve the quality of life for people with hearing loss.

Automotive: Smarter In-Car Assistants

Modern cars – especially electric vehicles (EVs) – present new audio challenges and opportunities. Without engine noise, sounds like road and wind noise become more pronounced, and passengers expect voice-activated assistants and crystal-clear hands-free calls as part of the driving experience. High-SNR MEMS microphones power intelligent in-cabin audio systems that can cancel noise and reliably pick up voice commands.

MEMS microphones are built to capture everything from a driver’s whisper to a window-rattling alarm without distortion.

Additionally, they have high environmental protection against dust and water by design, ensuring longevity in challenging conditions like car exteriors and interiors. Infineon also offers an automotive-grade certification on its microphones, ensuring even higher robustness against temperatures of 100°C and more.

These features enable multiple automotive use cases, such as noise-cancelling cabin systems, voice-controlled infotainment, clear communication between passengers and AI, and more – making the vehicle smarter and safer.

Smart Home & Consumer Tech: Context-Aware AI

In smart homes and consumer electronics, voice-enabled AI has become ubiquitous – from smart speakers that respond to keywords such as “Alexa” to true wireless stereo (TWS) earbuds that cancel noise and adapt to your environment. The challenge here is enabling context-aware AI: devices that hear your commands and can generate information from how you say them. Research shows that the tone and intonation of our voice carry much of the meaning and emotion in communication.

By minimizing the self-noise in high-SNR MEMS microphones, those nuances – a frustrated tremor in your voice, a joyful lilt, a question’s uptick in pitch – are preserved for the AI to analyse rather than lost in a hiss of background noise. This means a smart speaker can tell the difference between an urgent command and a casual request, or a voice assistant can detect stress or sarcasm in your voice and adjust its responses accordingly.

Equally important is the form factor of the microphone: many consumer devices demand high performance in tiny packages. Infineon addresses this with ultra-compact MEMS microphones that fit into space-constrained gadgets like AR/VR headsets, smart glasses, and earbuds.

Industrial IoT: Predictive Maintenance

In industrial IoT, sound is an invaluable data source for predictive maintenance. Many machines emit tell-tale acoustic signatures when something goes wrong – a faint grinding noise from a motor bearing, an irregular hiss from a leaky valve, or a slight knock in a pump.

MEMS microphones, known for their low noise and stability, can be deployed in industrial settings to serve as the “ears” of factory AI. Thanks to their small size, robustness, and cost efficiency, arrays of these mics can be installed on factory floors or inside equipment, enabling 24/7 acoustic monitoring of machinery.

These microphones can pick up sound frequencies that human operators might not notice and feed to AI models that analyse patterns. If an anomaly is detected – a deviation in the acoustic frequency profile of a rotating machine – the system can alert maintenance personnel to check the equipment before a failure occurs.

High-performance MEMS microphones capture real-time audio anomalies to help AI systems predict maintenance needs, improving safety and reducing unplanned downtime in industrial environments.

Enterprise: Emotion-Aware AI for Collaboration

AI is also transforming enterprise collaboration, which can listen to and understand what is said and how it’s said.

Consider an AI meeting assistant that transcribes a conference call – beyond mere transcription. It can identify the emotion or emphasis behind each speaker’s words and tag action items by urgency. The AI relies on microphones that preserve fine speech details to do this.

MEMS microphones capture vocal nuances such as pitch changes and sibilance – the crisp “s” sounds that add clarity – indicating a speaker’s state of mind. AI algorithms can perform real-time sentiment analysis and speaker diarization (distinguishing between different speakers).

In summary, advanced high-SNR microphones empower AI systems to precisely detect emotions, analyse speakers' moods, and separate multiple voices in a conversation.

Spotlight: Infineon’s 2025 MEMS Microphone Portfolio

All of the above requirements for MEMS microphones – high SNR, high robustness, small form factors and more – are covered by Infineon’s broad XENSIV™ MEMS microphone portfolio. To be able to provide the perfect microphone for each use case – reaching from highest-performance “flagship” microphones to small, cost-effective models - Infineon’s portfolio is built on two core technology platforms – Sealed Dual Membrane (SDM) and Single Backplate (SBP) – each tailored to different application needs. Below, we spotlight each technology and how it’s enabling next-generation AI audio solutions.

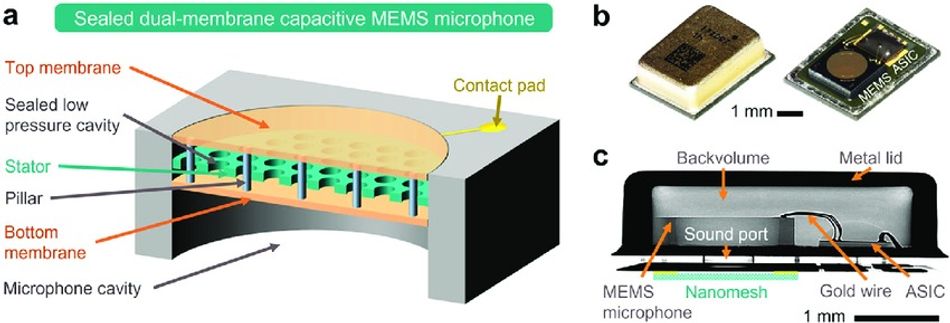

Infineon’s revolutionary Sealed Dual Membrane (SDM) MEMS microphone technology utilizes two membranes and a charged stator to create a sealed low-pressure cavity and a differential output signal. The architecture enables ultra-high SNR (up to 75dBSNR) and very low distortions and delivers high ingress protection (IP57) at a microphone level. This level of acoustic performance in medium and larger packages is ideally suited for AI-driven applications.

Focus XENSIV™ SDM microphones

● IM72D128V

● IM73A135

● IM70A135

● IM69D127

● IM69D128S

● IM70D122

● IM73D122

● IM72D128

Single Backplate (SBP) Technology

The Single Backplate (SBP) technology represents an industry standard for mid-end microphones. Infineon’s new generation of SBP microphones offers the best performance-to-cost ratio, especially for small package sizes, improved robustness, and SNR values up to 69dB SNR. This superior performance enables AI-driven applications even in space-constrained devices and at lower costs.

New XENSIV™ SBP microphones – coming soon

● IM69D122J

● IM68D128B

● IM66D132H

● IM66D130M

Future Outlook: Further Bridging the Gap Between AI and Human Hearing

Looking ahead, the evolution of MEMS microphones is set further to blur the line between machine perception and human hearing.

Infineon’s roadmap emphasizes continuing to lower the ENL (noise floor) of microphones by another 5–10 dBSPL – a substantial leap that experts say would put MEMS microphones on par with the hearing threshold of a healthy young person. Achieving such low self-noise would mean that AI systems could detect sounds as faint as we can, effectively giving future devices “ears” as sensitive as our own.

While technically challenging, this improvement is feasible with ongoing innovations in MEMS structures (like further membrane advancements or new signal processing techniques). Each 1 dB reduction in microphone self-noise is hard-won but yields outsized benefits due to the logarithmic nature of the decibel scale. A 5–10 dB ENL reduction would represent a generational breakthrough, closing the remaining auditory gap.

At the same time, AI algorithms are rapidly advancing to better use this richer audio input. In the near future, we can expect AI assistants that don’t just respond to commands but understand the context: they will use audio to recognize your environment, who is speaking, and even the speaker’s mood.

High-performance MEMS microphones will enable features like automatic environment classification (e.g., detecting that you’re in a crowded street versus a quiet office and adjusting audio processing accordingly), sophisticated multi-speaker diarization in meetings (so the AI knows Alice from Bob when transcribing a call), emotion detection (recognizing whether Alice is talking angrily or happily to Rob) and real-time sound event detection (like recognizing alarm signals or glass breaking in a smart home).

Infineon anticipates that high-SNR MEMS microphones will play a key role in these scenarios – they build the foundation for the next-level AI capabilities mentioned above.

Conclusion: Infineon’s Vision for AI’s Auditory Future

Today, MEMS microphones are “ears” of modern AI – and improving those ears brings us closer to AI that interacts with us as naturally and empathetically as another person would.

Infineon’s relentless innovation in high-SNR, low-noise, and small form-factor microphones enables machines to hear and listen, picking up the subtleties of voice and sound that imbue interactions with meaning. With each advancement in microphone technology, AI systems become more context-aware and capable of understanding our intentions and emotions.

Infineon’s MEMS microphones aim to bridge the gap between AI and human hearing, ensuring that voice-enabled technologies genuinely resonate with the human experience.

References

Infineon Technologies, AI-driven interactions powered by high-SNR MEMS microphones – Infineon PDF

Syntiant Corporation, Mic SNR impact on voice AI features in consumer electronics – Syntiant Article

Infineon Technologies, XENSIV™ MEMS microphones for consumer and automotive applications – Infineon Product Page

ISO 226:2023, Acoustics – Normal equal-loudness-level contours

ISO 7029:2017, Acoustics – Statistical distribution of hearing thresholds as a function of age

Infineon Technologies, IM69D128S MEMS microphone datasheet – Product Datasheet

Infineon Technologies, IM67D130A MEMS microphone datasheet – Product Datasheet

Infineon Technologies, IM70A135 MEMS microphone datasheet – Product Datasheet

Infineon Technologies, IM73A135 MEMS microphone datasheet – Product Datasheet

Infineon Technologies, IM69D120 MEMS microphone datasheet – Product Datasheet

Infineon Technologies, IM69D122J MEMS microphone datasheet – Product Datasheet

Infineon Technologies, IM72D128V MEMS microphone datasheet – Product Datasheet

Infineon Technologies, IM73D122 MEMS microphone datasheet – Product Datasheet

Infineon Technologies, IM70D122 MEMS microphone datasheet – Product Datasheet

Infineon Technologies, IM72D128 MEMS microphone datasheet – Product Datasheet

Infineon Technologies, IM66D132H MEMS microphone datasheet – Product Datasheet

Infineon Technologies, IM68D128B MEMS microphone datasheet – Product Datasheet

IM69D120 | High performance digital XENSIV™ MEMS microphone - Infineon Technologies