Real-world Applications of Generative AI at the Edge

Edge AI Technology Report: Generative AI Edition Chapter 3. Advances in model optimization techniques like pruning, quantization, and knowledge distillation pave the way for more efficient deployment of generative AI at the edge.

We once believed the cloud was the final frontier for artificial intelligence (AI), but it turns out the real magic happens much closer to home—at the edge, where devices can now think, generate, and respond in real-time. The rapid evolution of AI, particularly generative AI, is fundamentally reshaping industries and challenging the existing computing infrastructure.

Traditional models, especially resource-intensive ones like Large Language Models (LLMs), have long relied on centralized cloud systems for the necessary computational power. However, as the need for AI-driven interactions grows across sectors—from autonomous vehicles to personalized content generation—there is a clear shift toward edge computing.

Our new report, drawing from discussions with industry leaders and technologists, explores how generative AI is being harnessed and integrated into edge environments and what this means for the future of technology.

Read an excerpt from the third chapter of the report below or read the full report by downloading it now.

Real-world Applications of Generative AI at the Edge

As generative AI technologies continue to develop, their deployment at the edge is becoming increasingly significant. Edge computing is uniquely positioned to address the growing demand for real-time AI capabilities, low-latency decision-making, and improved privacy and security. These characteristics are crucial in industries such as healthcare, manufacturing, robotics, and smart cities, where timely, localized data processing is essential.

Market trends underscore the rising importance of edge AI. The global AI market—including edge computing—is projected to experience rapid growth, with generative AI playing a pivotal role. McKinsey estimates that edge AI, particularly in sectors like retail and manufacturing, can significantly enhance real-time decision-making, driving productivity improvements. Generative AI's potential value could add between USD 2.6 trillion and USD 4.4 trillion in global profit across industries, with its impact on R&D, product development, and simulation being especially critical for sectors like life sciences and manufacturing.

Additionally, analysts predict a sharp increase in the deployment of AI workloads on edge devices by 2025, with edge infrastructures increasingly favored to reduce cloud dependencies and manage data privacy concerns. This shift is driven by advances in hardware and software that allow smaller, optimized versions of generative AI models to operate on resource-constrained devices like IoT gateways and mobile platforms.

However, the path to widespread generative AI adoption at the edge is not without challenges. Resource limitations—such as restricted computational power, memory, and energy on edge devices—pose significant hurdles for deploying large-scale models that typically run in the cloud. Additionally, connectivity constraints in edge environments, particularly in remote or mobile settings, raise concerns about reliable, uninterrupted performance.

Despite these challenges, advances in model optimization techniques like pruning, quantization, and knowledge distillation pave the way for more efficient deployment of generative AI at the edge. Here, we dive into these innovations and present use cases across several industries, illustrating how generative AI is starting to gain traction in real-world edge environments.

Overview of Current Generative AI Techniques and Implementations

As the convergence between generative AI and edge computing gains momentum, innovations rapidly evolve to optimize performance, minimize latency, and ensure efficient operation on resource-constrained devices. A key focus has been on developing techniques that enable the deployment of generative models—including LLMs and other AI architectures—on devices like IoT gateways, smartphones, and embedded systems.

Optimizing Generative AI Models for the Edge

A major challenge in deploying generative AI at the edge involves the significant computational requirements of these models, given that they are inherently large and require substantial memory, processing power, and energy. Model optimization techniques have been developed to address this, enabling AI models to run effectively on edge hardware without significant performance loss.

One such technique is model pruning, which removes non-essential components from a model to reduce its size and complexity. By eliminating parameters that do not contribute to the model’s performance, pruning can lower the computational load, making it feasible for edge deployment without sacrificing accuracy. For example, Qualcomm utilizes model pruning in its Snapdragon AI platform, allowing for efficient generative AI tasks such as voice recognition on mobile devices. Similarly, NVIDIA's Jetson platform leverages pruning for real-time object detection in robotics, optimizing performance on resource-constrained devices. Google, as well, applies pruning in its LiteRT (formerly known as TensorFlow Lite), enabling applications like voice assistants to function smoothly on smartphones with reduced computational overhead.

Another key technique is quantization, which involves reducing the precision of the numbers used in a model’s computations, thus decreasing memory usage and computational demands. Quantization has been especially effective for edge devices, allowing AI models to operate with reduced energy consumption and lower memory footprints. As highlighted in our 2024 State of Edge AI report, applying 8-bit quantization to AI models has resulted in up to a 50% reduction in power consumption on edge platforms while maintaining acceptable performance levels for real-time applications.

Knowledge distillation is a widely used optimization technique where smaller models, known as "student models," learn from larger, more complex "teacher models." This process allows generative AI models to be compressed, making them suitable for resource-limited edge environments while maintaining essential capabilities. For instance, NVIDIA’s TAO Toolkit facilitates this process by enabling the transfer of knowledge from robust AI models to smaller versions suitable for edge deployment.

According to researchers from Peking University, knowledge distillation not only compresses models for resource-limited edge environments but also enhances data efficiency by enabling student models to achieve comparable performance with less training data. Additionally, it improves robustness, allowing student models to generalize well, even if the teacher model is imperfect or contains noise.

These findings suggest that knowledge distillation can mitigate the limitations posed by edge devices, making it a valuable approach for optimizing generative AI models where resource constraints are a major challenge. Edge Impulse's recent advancements with LLMs like GPT-4o further highlight the use of distilled models in edge devices to boost performance without relying on constant cloud access.

These optimization techniques are crucial in making generative AI feasible for edge applications, as they address the constraints of limited processing power and energy efficiency that are characteristic of edge environments.

Accelerating Edge AI with Optimized Generative Models by SyntiantGenerative AI is transforming how edge devices operate. Traditionally confined to cloud-based systems, these models are now finding a home on the edge thanks to advancements in hardware and software. Syntiant is pushing the boundaries of generative AI at the edge, enabling real-time processing on devices with limited compute power and unlocking new potential for sensor-driven applications. Syntiant’s Edge AI StrategyGenerative AI models in audio and visual domains are essential for edge applications reliant on microphones and cameras. In particular, Syntiant focuses on developing powerful multimodal AI models that simultaneously process audio, text, and visual input. Compared to earlier solutions for processing sensor input centered around feature engineering or more traditional CNN models, new transformer-based AI models can capture a broader corpus of knowledge, enabling novel applications of interacting with sensor data, like querying video footage with plain text. Though incredibly powerful, generative AI models have traditionally demanded significant computational power, making them impractical for most edge devices. Even when connectivity is available, cloud latency limits the user experience. Syntiant is overcoming these challenges with optimized hardware and software solutions that bring generative AI to the edge without sacrificing accuracy or expressiveness. Syntiant’s edge generative AI strategy is two-pronged.

Real-Time LLMs and Optimized Power Efficiency at the EdgeOne application of Syntiant’s small transformer-based models is their Small Language Model Assistants, which have significantly boosted LLMs’ performance at the edge. Consisting of only 25 million parameters, they are far smaller than traditional LLMs, which range from 500 million to several billion parameters. This compact size enables these models to run on commodity edge hardware platforms even when no NPU acceleration is available, fitting on even the smallest ARM and RISC-V CPUs and MCUs. This parameter count reduction is achieved by focusing models on specific domain knowledge while maintaining the generalized language skills of larger language models. Devices that once depended on cloud-based LLMs can now operate autonomously, delivering a low-latency, real-time user experience—crucial in consumer IoT where instant interaction is expected. In addition to static model optimization, Syntiant has developed several dynamic model optimization techniques that further enhance the efficiency of these models. Applied to these small generative AI models, these dynamic optimization techniques allow Syntiant’s already small transformer-based models to run on even the smallest edge targets. Such run-time optimizations are based upon executing only parts of the network that are relevant to the input, essentially turning off large parts of the network that are irrelevant. This cuts energy use without compromising accuracy, enabling high-performance generative AI on devices with limited power resources. Use Cases: Advanced AI in ActionSyntiant’s AI technology is transforming edge devices across many industries. In consumer IoT, small language models are replacing traditional quick-start guides and instruction manuals. Devices like set-top boxes and wireless routers now feature natural language interfaces, allowing users to ask questions and receive plain-language answers—particularly useful during setup when internet connectivity may be lacking. These models improve user satisfaction by delivering instant, relevant responses, reducing the need for customer support. This cuts costs for manufacturers and increases margins. Syntiant’s edge AI is also vital in hands-free environments, such as automotive and wearable devices, where real-time, voice-driven interaction is key. Accelerating Edge AI with LLMsSyntiant has made notable strides in accelerating LLMs for edge devices. Through the development of their domain-specific model architectures combined with other core optimizations such as sparsification, Syntiant has significantly reduced the computational footprint of LLMs. These breakthroughs allow LLMs to run entirely at the edge, eliminating the need for cloud connectivity and improving latency and privacy. Syntiant’s innovations have been applied across millions of devices globally, from earbuds to automobiles, demonstrating the wide applicability of their low-power, high-performance AI solutions. These advancements enable smarter conversational interfaces, making it easier for devices to interact naturally with users while reducing cloud costs and improving user experience across industries. |

Hybrid AI Models: Cloud + Edge

While model optimization allows generative AI to run on edge devices, many implementations are taking a hybrid approach, combining cloud-based processing with edge computing to balance workloads and maximize efficiency. In this model, the more resource-intensive tasks—such as training and handling complex reasoning tasks—are offloaded to the cloud, while inference and real-time processing occur at the edge.

This hybrid model enables scalability, allowing businesses to manage vast datasets in the cloud while ensuring low-latency operations at the edge. According to a perspective article by Ale et al. in Nature Reviews Electrical Engineering, not only do hybrid AI systems reduce bandwidth consumption by processing data locally, but they also improve data privacy, as sensitive information can remain on the device rather than be transmitted to cloud servers. This is particularly advantageous in sectors like healthcare and finance, where privacy is paramount.

A notable example of hybrid models is in autonomous vehicles, where real-time decision-making happens at the edge (i.e., on the vehicle itself) while data aggregation and training are managed in the cloud. This combination of local inference and remote processing ensures that the vehicles can make immediate decisions without relying on a constant cloud connection. At the same time, the cloud processes broader datasets to refine and update the AI model continuously.

The adoption of cloud-edge hybrid models is expected to grow, especially as more industries look for solutions that blend real-time processing with the scalability and computational power of the cloud. Retail, smart cities, automotive, and industrial automation are increasingly leveraging hybrid models to enhance operational efficiency, reduce latency, and drive innovation through AI-generated insights delivered in real time.

Generative AI is radically changing how we process, analyze, and utilize data, and edge computing is driving this transformation closer to devices and users. To explore this groundbreaking space, join us for The Rise of Generative AI at the Edge: From Data Centers to Devices webinar on Wednesday, January 15th.

Generative AI Across Key Industries

Generative AI has rapidly expanded its reach across industries, from healthcare to automotive and manufacturing. At its core, this technology is revolutionizing workflows and enabling real-time, autonomous systems to operate at the edge. As industries increasingly demand real-time decision-making, reduced latency, and enhanced personalization, edge computing has emerged as a crucial facilitator for generative AI. This convergence is unlocking unprecedented opportunities for optimized processes, cost reductions, and better customer experiences across sectors.

Robotics: Industrial Robots, Humanoids, and Human-Machine Interface

The integration of generative AI in robotics, particularly in industrial and warehouse settings, creates a new frontier for automation and operational efficiency. In these environments, generative AI enables robots to optimize real-time decision-making, automate routine tasks, and improve overall productivity.

In manufacturing, for example, generative AI-powered robots can assist with quality control by using advanced image recognition algorithms to detect product defects in real time. Additionally, robots equipped with AI are capable of predictive maintenance—anticipating equipment failures before they occur, thus reducing downtime and increasing operational efficiency.

Warehouse robots benefit from AI-generated path optimization, allowing them to move goods more efficiently within warehouse environments. By processing real-time data locally and relying on edge devices, these robots can quickly adapt to changing warehouse layouts or new inventory management systems without relying heavily on cloud infrastructure. This reduces latency and enables real-time responses to dynamic warehouse conditions.

On the other hand, humanoid robots are leveraging generative AI in more complex tasks, such as natural language processing (NLP), allowing for sophisticated, real-time human-machine interactions. LLMs enable these robots to process instructions, answer questions, and respond to voice commands autonomously without relying on constant cloud connectivity. This capability is invaluable in scenarios requiring immediate, human-like responses, such as customer service and cobots (collaborative robots) in manufacturing.

The Toyota Research Institute (TRI) announced a breakthrough in generative AI, using a Diffusion Policy to rapidly and reliably teach robots new dexterous. Image credit: Tri.global

A key component in this evolution is the human-machine interface (HMI), which facilitates seamless communication between humans and robots. Through AI-enhanced HMI, robots can better understand user intent, customize interactions, and improve their decision-making processes, leading to a more intuitive, context-aware user experience. This is especially relevant in manufacturing, where cobots interact closely with human workers, performing complex tasks such as assembly and quality control in real time. Generative AI helps these robots learn and adapt to different tasks by dynamically adjusting their behavior based on human input, reducing the need for manual reprogramming and improving operational efficiency.

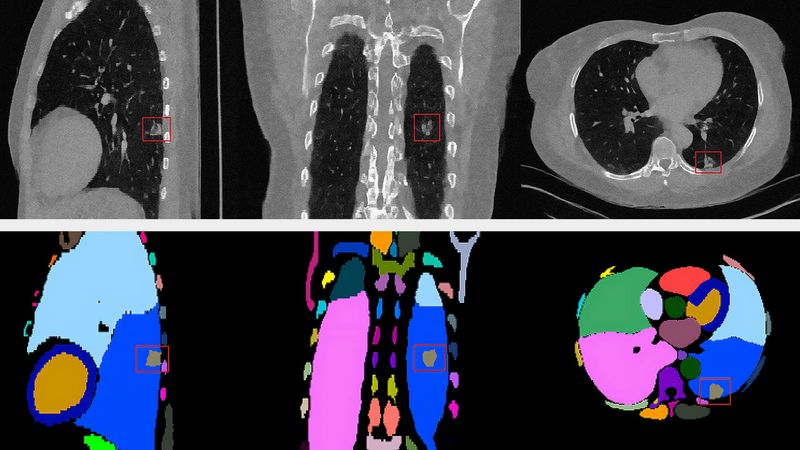

Healthcare: Medical Imaging & Diagnosis Assistance

While still in its early stages, generative AI holds significant potential in transforming healthcare, particularly in medical imaging and diagnostics. By leveraging edge computing, AI models can perform real-time diagnostics and imaging analysis directly within healthcare settings, such as hospitals and clinics. This shift to localized processing could ensure quicker results, improved data privacy, and reduced dependence on cloud infrastructure, which remains a challenge for healthcare providers with limited internet connectivity or privacy concerns.

In medical imaging, generative AI applications could help enhance diagnostic precision by improving image resolution and interpretation, especially when working with incomplete or lower-quality medical scans such as computed tomography (CT) or magnetic resonance imaging (MRI). For instance, AI models trained to generate high-quality images from suboptimal inputs can assist radiologists in making more accurate diagnoses, even in resource-constrained environments. While this technology is still being researched and tested, its deployment at the edge could enable hospitals and smaller clinics to adopt advanced diagnostic tools without relying on cloud services.

In diagnostics, edge-based generative AI models could help process patient data on-site, supporting real-time analysis that complements clinical decision-making. These models would allow healthcare practitioners to access rapid insights and personalized healthcare recommendations based on patient data like genetic profiles and medical histories. One of the most promising potential benefits is the capacity to improve patient privacy by keeping data processing local, avoiding the risks associated with transmitting sensitive medical information to remote servers.

Generative AI models are also projected to play a significant role in assisting personalized healthcare solutions. The technology could analyze vast amounts of patient data in real time to recommend tailored treatment plans, particularly for chronic illnesses or long-term care management. By performing this analysis at the edge, medical institutions could offer more immediate and personalized treatment while reducing data exposure to external threats.

Although widespread case studies are limited at this stage, the potential of generative AI and edge AI in healthcare is substantial. Industry reports indicate that healthcare systems will increasingly adopt edge computing as AI models become more optimized for resource-constrained environments. A 2024 McKinsey report has shown at least two-thirds of healthcare organizations have already implemented or are planning to implement generative AI in their processes. With ongoing research into model optimization, such as quantization and knowledge distillation, we can expect significant advances in the next few years, driving healthcare innovation and improving patient outcomes.

Manufacturing: Design Optimization and Process Simulation

Generative AI is increasingly making headway in the manufacturing sector, not to predict failures or optimize existing workflows as seen with predictive AI, but to create entirely new designs, optimize processes, and simulate production environments. These advancements are enabling manufacturers to innovate faster and improve efficiency across product design, production, and quality control processes.

The design process can be one of the most resource-intensive stages. Generative AI is streamlining this by generating new design iterations based on input parameters such as material constraints, performance requirements, and production costs. The edge computing capabilities allow these generative algorithms to operate locally, providing immediate feedback and enabling manufacturers to quickly adapt designs without relying heavily on cloud-based systems. By using generative algorithms, manufacturers can explore a wide array of design possibilities quickly and efficiently.

For example, generative AI helps engineers manufacture lightweight and optimized components for aerospace and automotive applications. Companies like Airbus and BMW are harnessing this technology to generate thousands of design options that are iteratively refined based on performance metrics such as weight, cost, and structural integrity. For example, Airbus is using generative AI to reinvent aircraft component design, focusing on lightweight structures that improve fuel efficiency and reduce CO₂ emissions. By applying AI-driven algorithms, Airbus engineers can explore more design variations, leading to innovations that make aircraft components more sustainable and cost-effective .

Similarly, BMW is leveraging AI to optimize its automotive production processes. BMW collaborates with platforms like Zapata AI to enhance manufacturing efficiency, applying AI to generate optimized designs for car parts. This process accelerates product innovation, reduces time-to-market, and ensures that final products are both robust and cost-effective. For instance, AI helps BMW streamline production planning, resulting in faster prototyping and more refined, optimized vehicle components. This method of design not only reduces time-to-market but also ensures that final products are more robust, cost-effective, and sustainable.

Generative AI (GAI) has a lot of potential in addressing the challenges related to traditional VR graphics. Credit: Queppelin

Beyond design, Generative AI plays a critical role in process simulation. By creating virtual simulations of manufacturing processes, AI can model different scenarios in production lines before any physical changes are implemented. These simulations, also referred to as "digital twins," allow manufacturers to test various operational strategies, minimize downtime, and optimize throughput.

For instance, in the semiconductor industry, where precision is paramount, AI-generated simulations can identify potential inefficiencies in the manufacturing process before they lead to costly errors. Generative AI can propose optimized workflows that improve efficiency and ensure quality consistency across large-scale production. Moreover, manufacturers can use AI simulations to model rare events, such as equipment breakdowns, to train systems to better handle such disruptions, ultimately leading to more resilient production lines.

In addition, while predictive maintenance is typically associated with more traditional AI models, generative AI is beginning to assist in this area by creating synthetic data sets for maintenance simulations. By simulating different failure scenarios and maintenance protocols, generative models help optimize the upkeep schedules of critical machinery in manufacturing environments. This ensures that companies can avoid unnecessary downtime while improving equipment lifespan.

Generative AI's ability to create vast amounts of synthetic data also aids in training more accurate predictive models. This synthetic data can simulate real-world conditions, such as the wear and tear of factory equipment, and help refine models to predict when machines will likely need servicing, reducing unexpected failures and optimizing overall operational efficiency.

The integration of Generative AI in manufacturing is still evolving, but its role in design optimization and process simulation is already making significant strides. By leveraging AI to create new designs, simulate workflows, and optimize maintenance schedules, manufacturers are experiencing increased efficiency, reduced costs, and enhanced product innovation. These advancements, combined with edge computing capabilities, bring these benefits directly to the factory floor, enabling real-time optimization and faster decision-making.

Automotive Industry: Autonomous Vehicles, Design, & Simulation

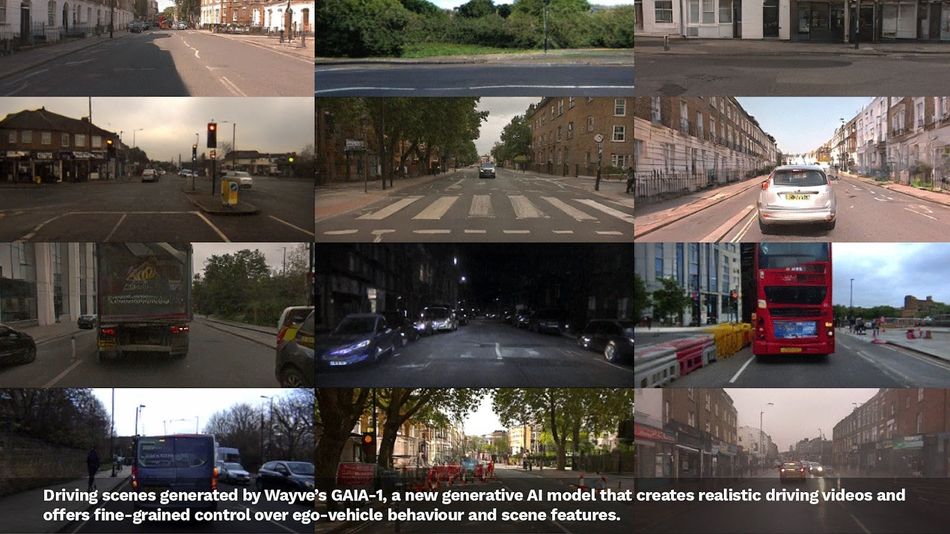

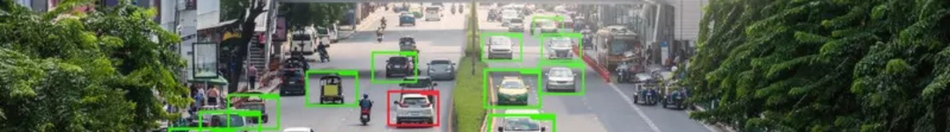

Generative AI, when combined with edge computing, contributes to the evolution of the automotive industry signficantly, particularly in the development of autonomous vehicles and advanced vehicle design. As automakers increasingly adopt AI to enhance driving systems, the integration of generative AI at the edge ensures real-time processing and decision-making capabilities critical for safety and efficiency.

Autonomous vehicles rely heavily on AI-driven systems to process large volumes of data from sensors, cameras, and LiDAR devices to make split-second decisions. The challenge lies in reducing latency and ensuring real-time responses to dynamic road conditions. This is where edge computing becomes essential: instead of sending vast amounts of data to the cloud, processing happens locally on the vehicle, significantly reducing the time required to interpret the data and make decisions.

Driving scenes generated by Wayve’s GAIA1a new generative AI model that creates realistic driving models. Credit: Wayve.ai

Generative AI enhances this system by simulating various driving conditions and generating synthetic data to improve the accuracy of decision-making models. For example, by simulating thousands of different driving scenarios—from weather variations to traffic patterns—Generative AI can train AV systems to react to a broader range of situations without relying solely on real-world testing, which can be time-consuming and expensive.

Real-world examples include Tesla’s Full Self-Driving (FSD) system, which uses edge-based AI for real-time decision-making. Tesla’s FSD system processes data locally in the vehicle, ensuring the car can make decisions autonomously, even in areas with limited or no connectivity. Generative AI models play a role in scenario generation and testing during the development phase, allowing Tesla to simulate millions of driving situations to fine-tune its autonomous driving algorithms before deploying them in the real world. Similarly, companies like Waymo and Cruise are deploying robotaxis that rely on edge computing to handle real-time processing for navigation and decision-making in urban environments. These systems incorporate AI to recognize traffic patterns, pedestrians, and obstacles, enabling safe navigation without cloud reliance.

Generative AI is also transforming the automotive design process, particularly in the early stages of vehicle development. Manufacturers can streamline the design phase and reduce prototyping costs by leveraging AI models that generate and optimize designs based on specific parameters such as aerodynamics, safety, and materials.

For instance, generative AI models can produce thousands of design iterations for vehicle components—such as chassis, body panels, and structural elements—within minutes, allowing engineers to select the most efficient and innovative designs. These AI-generated designs can then be simulated using real-world constraints to test for factors like structural integrity, energy efficiency, and performance in crash scenarios. This approach accelerates the design cycle, bringing new vehicles to market faster while reducing overall development costs.

General Motors (GM), for example, has used generative design techniques to develop lightweight vehicle components that meet rigorous safety standards while optimizing fuel efficiency. By using generative AI to generate and test designs, GM reduced the weight of certain parts by up to 40%, contributing to overall energy efficiency.

One of the critical challenges in the automotive industry is ensuring that vehicles meet safety and performance standards before they reach production. Generative AI offers a solution through simulation and virtual testing, which are particularly important for autonomous vehicles. Using edge computing, manufacturers can run simulations in real-time, testing vehicles under a variety of conditions and rapidly generating insights from those simulations. This reduces the dependency on physical prototypes and extensive road testing.

For example, AI simulations can replicate driving through dense urban environments, heavy rain, or snowy conditions, enabling automakers to fine-tune their systems before actual deployment. With edge computing, this simulation data is processed on local servers or even directly on the vehicle, ensuring that these insights are generated quickly and efficiently.

Generative AI and edge computing are pushing the boundaries of innovation in the automotive industry. From enabling real-time decision-making in autonomous vehicles to streamlining the design and testing process, these technologies are driving significant advancements in vehicle safety, efficiency, and performance. As the automotive sector becomes more advanced and digitalized, the role of generative AI will become increasingly critical in shaping the future of transportation, with edge computing ensuring that these innovations can be applied in real-time, real-world environments.

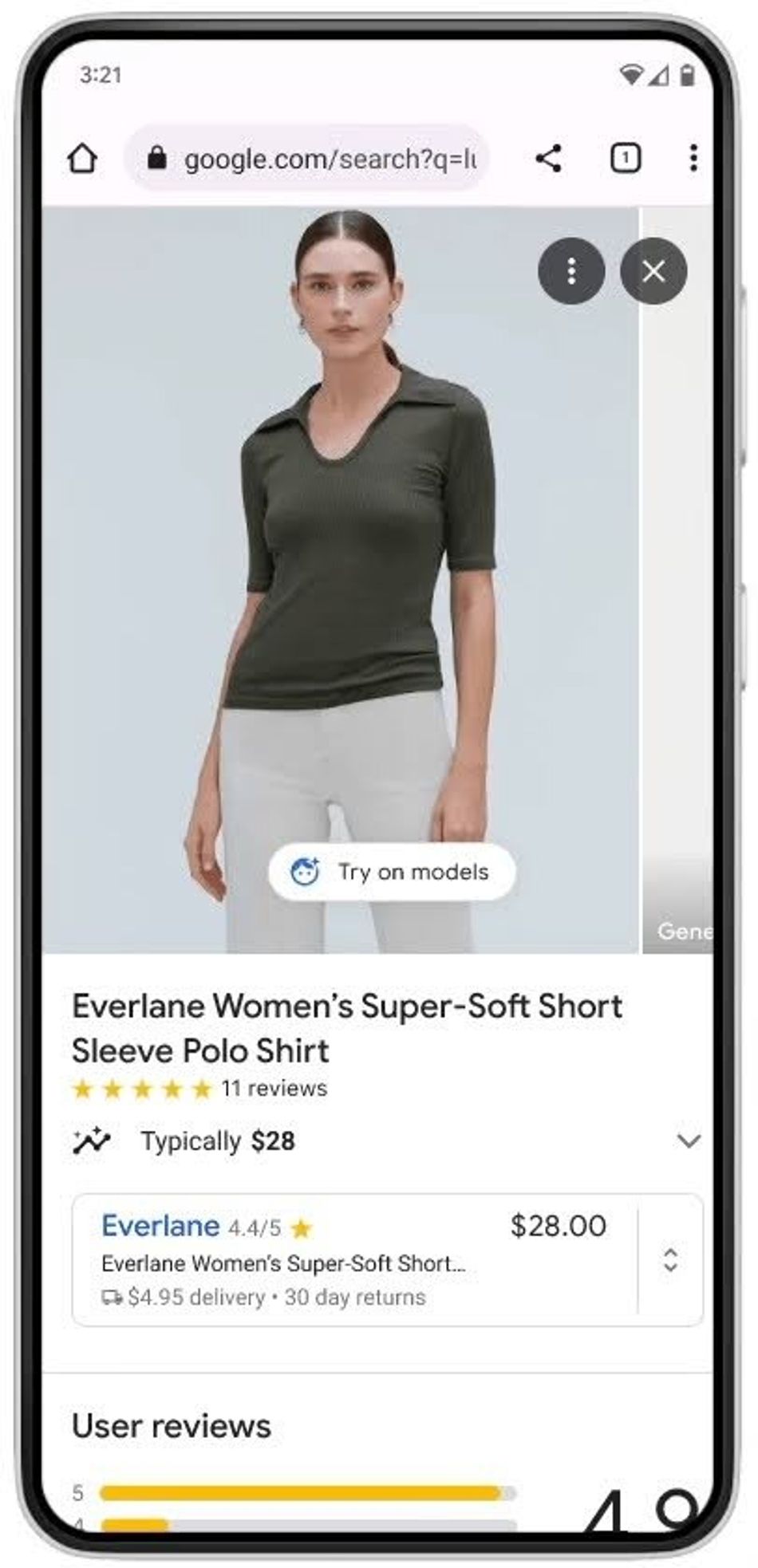

Retail Sector: Personalized Customer Experiences & Inventory Management

Generative AI at the edge is beginning to reshape the retail industry by enabling more personalized customer experiences and optimized inventory management processes. With the increasing availability of real-time data analysis at the edge, retailers can better understand customer preferences and behavior, offering tailored product recommendations directly on-site without needing extensive cloud infrastructure. This shift improves latency and enhances the overall shopping experience, driving higher customer satisfaction and engagement.

Google’s new generative AI model can take just one clothing image and accurately reflect how it would drape, fold, cling, stretch and form wrinkles and shadows on a diverse set of real models in various poses. Image credit: Blog.Google

One of the primary areas where generative AI is making an impact in retail is through personalized recommendation engines. These engines analyze customer data, such as browsing patterns, purchase history, and even interactions within the store, to create a dynamic and personalized shopping journey. By running these AI models at the edge—on devices located in physical stores or smartphones—retailers can provide instant, relevant suggestions, improving conversion rates and customer retention. A McKinsey report highlights that businesses using advanced AI personalization techniques see a 5-15% increase in revenue and a 10-30% increase in marketing spend efficiency.

In addition to customer experience enhancement, generative AI is playing a growing role in inventory management. Retailers traditionally rely on cloud-based systems to monitor stock levels and predict supply chain demands. However, edge computing combined with generative AI allows for on-site real-time inventory analysis. For example, AI models can predict stock shortages or overstock situations and recommend actions such as reordering items or redistributing products across locations. This level of automation reduces wastage, ensures that high-demand items are always in stock, and improves overall operational efficiency .

Companies like Amazon have pioneered the use of edge-based AI for retail, leveraging these technologies to personalize product recommendations and optimize warehouse operations. Additionally, startups are introducing new Edge AI solutions to revolutionize in-store experiences and provide more interactive, AI-driven shopping environments. These applications are further augmented by advancements in hybrid cloud-edge models, where customer interactions and inventory management tasks are split between cloud and edge devices, ensuring scalability without compromising real-time responsiveness .

In conclusion, edge-enabled generative AI is transforming retail by delivering more personalized shopping experiences and improving inventory management efficiency. These developments are part of a broader shift in retail toward data-driven, AI-powered decision-making that allows retailers to operate smarter, more agile businesses.

Conclusion

Generative AI at the edge is poised to redefine the landscape of how industries operate, not only through incremental improvements but by fundamentally altering workflows across sectors. As edge computing continues to evolve and companies overcome current challenges, generative AI has vast potential to extend its reach. Industries such as healthcare, manufacturing, retail, and urban planning will benefit from more autonomous and responsive systems that adapt in real time to complex environments, enabling faster, more informed decision-making.

Read an excerpt from the report's chapters here:

Introduction Chapter

Chapter 1

Generative AI and edge computing are transforming industries by enabling low-latency, real-time AI on edge devices, allowing efficient, private, and personalized applications without reliance on data centers.

Chapter 3

Chapter 4