IIT Develops Adaptive Collaborative Interface for Safe Human-Robot Interaction with Xsens motion capture

By Xsens MVN Analyze

This article was first published on

www.movella.comResearchers at the Italian Institute of Technology (IIT) are transforming human-robot collaboration with adaptive interfaces, enabling real-time robot adjustments and safe, seamless interactions.

Key takeaways:

- Real-time adaptation for collaboration: Sirintuna and Ozdamar developed an adaptive collaborative interface (ACI) that allows robots to adjust their actions to the human movement intention in real-time, improving teamwork and efficiency.

- Safety and predictability: Safety protocols and torque-controlled robots reduce risks in human-robot interaction, though unpredictable human behavior remains a challenge.

- Xsens motion capture: The researchers use Xsens for precise, continuous movement data, crucial for interpreting human intentions and enabling seamless robot-human collaboration.

- Future applications: The research has potential in healthcare, emergency response, and home assistance, aiming for robots that adapt intuitively in real-life, unstructured environments.

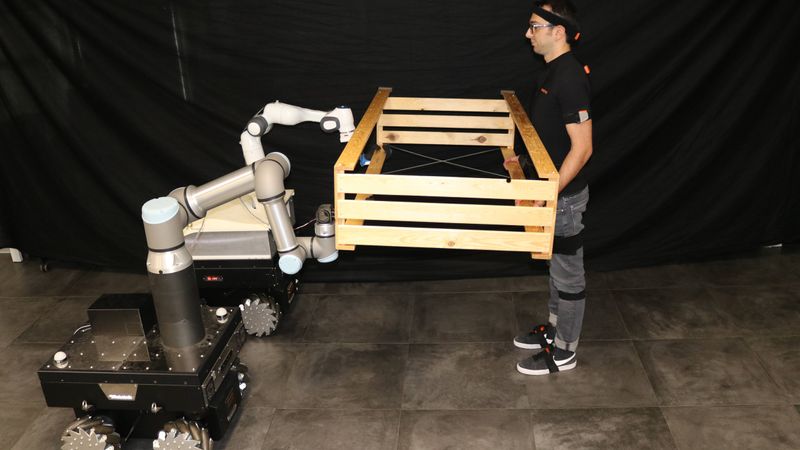

Humans have been working with machines for centuries, and with the ongoing robotics revolution, this collaboration is becoming more equal. Researchers Doganay Sirintuna and Idil Ozdamar from the Italian Institute of Technology (IIT) are at the forefront of this change, investigating how robots can effectively work with humans in tasks such as carrying and delivering objects.

The new challenge

IIT has been actively researching human-robot collaboration for several years, showcasing robots that can perform a variety of tasks, from assisting in drilling holes to serving cups of coffee.

Researchers Doganay Sirintuna and Idil Ozdamar, from the Human-Robot Interfaces and Interaction Laboratory, are developing a framework that allows robots to assist with transporting objects. Their framework, called Adaptive Collaborative Interface (ACI) uses haptic feedback and motion capture data to enable robots to adjust their speed and position for better teamwork with humans.

In Ozdamar's study, “Carrying the Uncarriable: A Deformation-Agnostic and Human-Cooperative Framework for Unwieldy Objects Using Multiple Robots,” a framework is presented for one person and two robots to carry a large, deformable object using flexible straps. In a related study by Sirintuna and Ozdamar, “Human-Robot Collaborative Carrying of Objects with Unknown Deformation Characteristics,” they explore handling items from flexible ropes to rigid rods. By analyzing human movements and force feedback, robots can detect the deformability of the handled objects and adapt their actions.

Sirintuna and Ozdamar's research focuses on robots consisting of a base with wheels (mobile manipulators) and a robotic arm with multiple joints (known as collaborative robots or cobots). They explain, "In our setup, we have 9° of freedom, and with wheeled robots, you can perform fast movements. So, we selected an industrial robot to concentrate on collaboration tasks."

The choice of Xsens

In their work, Sirintuna and Ozdamar rely heavily on Xsens motion capture to collect accurate movement data to guide the robots. Xsens allows the researchers to conduct the experiments with minimal setup—making it a practical choice for laboratory environment. Sirintuna and Ozdamar stress the importance of precise, continuous data when working in close collaboration with humans. They appreciate Xsens’ reliability, noting, “We used Xsens because its data was very continuous, which allowed us to provide a reliable stream of data for the human-robot collaboration. Optical sensors we have tried can experience jumps in position, affecting the performance of our framework.”

A key focus of their research is interpreting human intentions through movement data. Xsens provides detailed kinematic data, enabling them to categorize actions such as standing still, pushing, or pulling. Through repeated training, they achieved a 98% accuracy in predicting human movements within just three months. “We label specific joint positions and angles as pushing or pulling and use these as input for our system,” Ozdamar explains. Sirintuna and Ozdamar also developed an algorithm that interprets human’s rotational intent by analyzing torso and hand movements, with the goal of creating robots that assist efficiently in tasks like turning while carrying objects.

The research results

As a result of applying the adaptive collaborative interface, Sirintuna and Ozdamar found that cobots can handle tasks with higher precision, anticipating human movements in real time and adjusting actions based on nuanced data. With the help of a robot operating under ACI in the research on the different deformable objects, "participants completed the task faster, with less effort and higher effectiveness". In the research with two robots assisting one person with transporting a bulky object using deformable straps, the ACI allowed robots to have faster response and better alignment of the movement and required a lesser force input from the person, making the lifting task easier.

The findings underscore the power of applying ACI for human-robot collaboration scenarios and lay a foundation for future research in this area.

The future of human-robot collaboration and humanoids

Looking ahead, Sirintuna and Ozdamar see their research supporting applications in healthcare, emergency response, and home assistance, as well as enabling robots to become common in public spaces and workplaces to meet needs, for example, in agriculture, affected by climate change. The researchers note that “human-robot teams that leverage human adaptability and decision-making can perform tasks with the flexibility required in unstructured environments.”

However, making this robotized future reality requires addressing safety concerns in human-robot collaboration. The research robots are torque-controlled, equipped with soft joints, and programmed to avoid reacting to unexpected high-velocity impacts or large force inputs. Yet, as the researchers point out, for real-world applications, the unpredictability of human behavior remains the greatest challenge. “We design algorithms based on expected movements, but humans often behave in unexpected ways,” says Sirintuna. Ozdamar adds, “We can ensure slightly more safety on the robots’ side than on a person’s because we can predict the robot’s behavior.”

Ultimately, Sirintuna and Ozdamar’s work lays a foundation for future robots capable of safe, intuitive collaboration with humans, bringing us closer to a world where robots seamlessly assist in our daily lives.