The 2023 Manufacturing Robotics Report: Hardware

Innovations such as biomimicry, software inspired by insect brains, smart skin, neuromorphic computing, and other emerging technologies further expand robots' capabilities and push the boundaries of robotics hardware.

Image credit: Fortop

The 2023 Robotics Manufacturing Report was created to enable you to be up to date and understand the complexity and depth of robotics and to help you gain specific insights into the current status of robotics manufacturing.

Over the five articles of the report, we examine the core technologies that make up a robotics project and shed light on the trends and challenges in creating them.

Read the introduction chapter here.

Read the full report now.

Hardware requirements and the rise of embedded systems

Robots extend human capabilities with more precision, less fatigue, and a higher tolerance for hazardous conditions. Robotics today are expanding from the limited movements of the factory to uncontrolled environments shared closely with humans. This progress is made possible with advances in robotics hardware, which includes the motors, joints, and sensors used to create and control the movement required to solve a given task.

This move also demands an increase in computation to solve the accompanying perceptual, planning, and control tasks ‘robots in the wild’ require. Advances in software, particularly artificial intelligence (AI) and machine learning, go some way to deliver the smart robots of the future, but computing platforms will also take a significant role in the future of solving complex perceptual and control tasks.

In the first half of this chapter, we give an overview of the core hardware requirements for most robotics projects. In the second half, we look at the rise of embedded systems that enable cutting-edge robotics.

Robotics hardware overview

Sensors

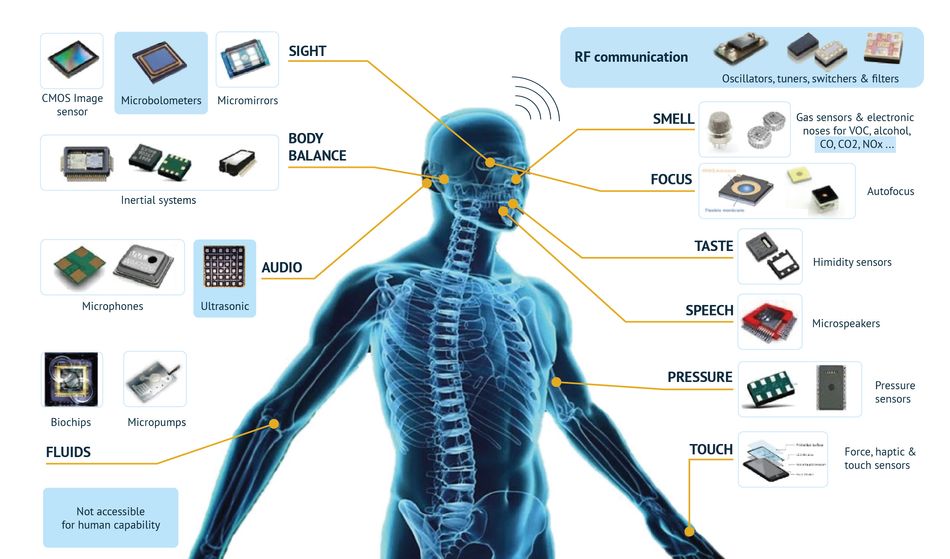

Sensors are the method by which the robot receives input from the world around it, similar to the human senses of sight, hearing, balance, touch, and smell. Common types of robot sensors are:

- Cameras for a visual of the environment

- Microphones to pick up sound

- Force sensors to detect weight and resistance

- Proximity sensors that pick out objects in an environment

- Gyroscopes that identify robot orientation

- Environmental sensors to measure humidity, temperature, gases, pollutants, and other factors

Sensors are passive, meaning that they do not give the robot any type of direction. Instead, the information from the robot’s sensors is typically sent to a central processing unit (CPU), where the input is transformed into action.

One of the biggest trends in robotics sensors is the increasing use of ultrasonic sensors. An ultrasonic sensor measures the distance of a target object by emitting ultrasonic sound waves, and converts the reflected sound into an electrical signal.

Ultrasonic sensors have two main components: the transmitter (which emits the sound using piezoelectric crystals) and the receiver (which encounters the sound after it has travelled to and from the target).

Central processing unit

The CPU is the hardware and software interface between the robot sensors and the control system. The CPU parses commands for the robot to execute and routes them to specific components of the robot. In automated robots, it processes the information received from the input sensors, interprets the information, performs calculations, and may apply machine learning algorithms. In that sense, the CPU functions as the major component of the robot’s brain.

The CPU provides additional processing for error handling. When the robot fails to execute a command, or when something unexpected occurs in the robot’s environment, the CPU determines how the robot handles the situation. Some examples of error-handling are:

- Retrying the command

- Requesting manual control from the robot operator

- Recording the error as a data point for machine learning

- Disabling the robot

For a semi-autonomous robot or a manual robot, the sensor input may be relayed to an external human operator. The operator’s commands are sent back through the CPU and to the robot.

In some robots, a lighter weight single board computer (SBC) might be installed as middleware to process input and send commands. An SBC gathers sensor input and may relay it to an external CPU for further processing. The CPU then sends commands back to the robot, which are parsed by the SBC and sent to specific parts of the robot.

With the advent of artificial intelligence and edge computing, newer SBCs have AI-friendly designs to boost efficiency and drive greater applications. Edge computing allows data processing and analytics to take place at the network’s edge, providing rapid real-time insights and allowing SBCs to be used in IoT-based systems with greater ease and adaptability. One of the most significant benefits SBCs offer is flexibility in platform configuration. The system can be designed with minimum requirements and can then be scaled according to the application. Purpose-built PC systems do not provide such agility.

Control system

- The robot control system sends the commands that govern the robot’s actions, similar to the human nervous system, which sends the signals that direct the human body to move. The robot control system depends on the robot’s level of autonomy.

- With fully autonomous robots, the control system may be a closed feedback loop in which the robot is programmed to perform a specific task. The CPU uses input from the sensors to inform the action to take and may detect whether the action was performed correctly.

- With manual robots, the control system is manipulated by the human operator using an external console. The CPU routes commands from the operator to the robot.

- With semi-autonomous robots, the control system is a hybrid of both types of control systems. The level of autonomy determines whether the human operator makes all of the high-level decisions using the control system or whether the robot requests human input as needed.

- Hardware for the robot control system can take many forms, depending on the environment, tasks to perform, and manufacturing capabilities. For example:

- A manual or semi-autonomous robot may be controlled using various types of hardware, such as a personal computer, a purpose-built hand controller, headset, foot pedals, a microphone for voice commands, or even a textile-based controller, such as a VR-like bodysuit.

- An autonomous robot may be controlled entirely by the onboard CPU, or it may be controlled by software on an external server.

Motors and actuators

Motors and actuators are the mechanisms for robot mobility, similar to the human muscular and cardiovascular systems, which provide the means for human movement. Without motors and actuators, the robot cannot perform tasks in the physical world.

Advances in motor technology enables smaller casings, which are often injection moulded, so that motors are more agile and have an increased range of applications. Recent advances in motor design include newer permanent magnets that distribute more power with smaller motors. Higher-resolution encoders improve accuracy, and motor tuning can also be improved to help with accuracy and cycle time. This creates opportunity for higher-precision robotics applications, and it improves repeatability and the throughput of the robot and automation. Smaller motors with better torque-to-weight ratios achieve higher peak speeds for a short time, as well as faster acceleration and deceleration times. Smaller motors also reduce robot mass, which in turn permits more rigidity and reduces vibration.

Motors and actuators are similar to each other, but serve distinct purposes.

- A motor has rotational movement and is usually intended for continuous power. For example, a motor may drive a robot across terrain, operate an internal fan, or rotate a drill.

- An actuator has linear movement and is usually intended for more precise operation. For example, an actuator may be used to provide the flexible bending motion of a robotic elbow, or to rotate a robot torso. Most actuators are electrical, hydraulic, or pneumatic.

Degrees of freedom (DOF) is an important concept in robotic movement that refers to the axes that a mechanical joint can move along. The DOF of a robotic joint is limited by hardware, including the actuators that power the movement.

End effectors

End effectors (sometimes called end-of-arm tools or EOAT) are mechanical assemblies positioned at the end of a robot arm that allow the robot to manipulate objects in the physical environment. End effectors essentially act as the robot’s hands, although their function varies from that of human hands.

- Tool-specific end effectors can be highly specialised for robots that perform specific tasks. There are limitless possibilities for these end effectors, from power tools like a drill or electric sander, a water pressure washer, a powered vacuum suction cup, or tools for scientific research.

- Gripper-type end effectors may be used to grasp and hold objects, and are more generalised than tool attachments. Grippers may take on a variety of forms, such as clamps controlled by actuators, electromagnetic systems, or static features, like hooks or specially-designed fasteners.

Some robots have a combination of gripper and tool-specific end effectors. For example, a robot built to weld pipes may have a welding torch at one arm and a gripper to hold the pipe in place at another.

With interchangeable end effector attachment systems, the operator can choose the best tool for a task, whether it is a specialised power tool, or a more generalised gripper-type attachment. Some grippers can hold and operate tools, though they may offer less precise control for a human operator than tool-specific end effectors.

The field of soft robotics extends the versatility of end effectors by replacing rigid components with softer and more flexible end effectors. Soft robotics mimics biological qualities, which may improve a robot’s ability to manipulate fragile or pliable objects. Minimising rigid surfaces on the robot may also reduce safety hazards for robot operators.

Connectors

Connectors are used in robotics to relay commands and power throughout the robot. Motors, actuators, sensors, and end effectors require both data bus and electrical circuits to operate. Types of connectors used in robotics include:

● Robust connectors for data and power, such as circular connectors, push-pull connectors, and micro-D connectors. These are connected and disconnected by hand, and also prevent accidental disconnection. Circular connectors can be very compact, making them effective for smaller moving parts at end effectors and robotic joints.

● Data networking connectors. These connectors are specific to data signals and can provide a faster connection than multi-purpose connectors. RJ45 connectors are ethernet connectors that are commercially available in a variety of flexible shells that suit industrial robotics.

● Wireless data connectors. Wireless connectors are critical for mobile robots that send sensor data and receive commands via Wi-Fi and radio signals. They are also used in stationary industrial robots connected to a central server.

Today, many types of connectors serve the purposes of robotics. Commercial connectors are available in housings that can withstand harsh environments and are flexible enough to handle the robot’s movement. Connectors can inform robot design and assembly at end effectors and robotic joints, and can affect the speed of data transfer.

Powering the robot

The power supply is a major manufacturing challenge, particularly for mobile robots. Stationary robots may connect directly to an industrial power source, while mobile robots are usually powered with rechargeable lithium-ion batteries. Batteries may require custom design and manufacturing based on robot Wattage requirements, mobility, weight restrictions, charging source, and environmental factors.

The power source for charging the robot battery is another important consideration. It may be necessary to design a specialised battery charger that accounts for the battery’s power requirements, power source limitations, and environmental factors. The charger may be installed directly on the robot itself or the charger may be designed as standalone equipment. Some power sources include:

- Standard industrial power from a municipal power station. For mobile robotics, a standard power source usually powers a standalone battery charger rather than the robot itself.

- Solar panels, either installed on the robot or at a separate charging station. Solar panels can also be installed on the robot to provide backup power during remote operation.

- A portable power generator, often fueled by petrol, propane, or bio-diesel. Advances in generator technology increase the portability and fuel-efficiency of generators, and expand the possibilities for fuel sources.

Another design consideration for battery-operated robots is battery hot-swapping during robot operation. A robot with hot-swapping capabilities is powered with multiple batteries. When one battery is discharged, it can be replaced while the robot is in operation. The discharged battery is then recharged for future use.

Robotics hardware challenges

Resource shortages

Global shortages of computer chips and commercial off-the-shelf hardware have a major impact on robotics manufacturing.[11] Hardware shortages are outside of the control of robotics manufacturers, and may result in design compromises, increased costs, missed deadlines, and inaccurate projections.

Environmental conditions

The robot operating environment is a consideration for every piece of robot hardware. Robots work in hazardous environments, are exposed to weather, dust, pressure, or may be submerged in water. This means that IP rating and chemical tolerance limit the hardware that can be incorporated into the robot design. Custom housing for hardware and the robot chassis can mitigate some of these limitations, but may also result in additional manufacturing challenges. Software can also be leveraged to minimise contact with hazards. For example, an autonomous system might be programmed so that the robot can recognize and intelligently navigate hazardous environments.

Increasing computational power

As robotic applications expand from controlled environments to uncontrolled there is a natural increase in the computational load. In combination with software advances, computing platforms that enable efficient and fast computation to solve complex perceptual and control tasks will be essential. Key advances in this area include:

Open research and development platforms

Open platforms for research and development that encompass robot hardware, software, computing platforms, and simulation are supported by many academics. These open platforms facilitate comparison and benchmarking of various methodologies while allowing researchers to concentrate on the algorithmic progress. Standardisation is necessary to hasten the development of open research because it enables efficient exchange and integration of algorithmic, hardware, and software components.

Computational solutions inspired by insect brains

Uncrewed aerial vehicles (UAV) require a robotic system that is particularly resource-constrained because UAVs must be fast, light, and energy efficient. Some computational solutions for UAVs are inspired by insect brains, such as obstacle avoidance, object targeting, altitude control, and landing. Elegant robotic solutions are based on insect biology, including insect embodiment, tight sensorimotor coordination, swarming, and parsimony. In UAVs, these solutions take the form of algorithms and multi-purpose circuits.

An important insight from these solutions is that close integration of algorithms and hardware leads to extremely compact, powerful, and efficient systems. Hardware platforms from microcontrollers to neuromorphic chips can support these bio-inspired algorithms. The interface between computing and movement-generating hardware is a particularly important area of development.[12]

Artificial skin: combining sensors with materials

An emerging area of robotics is artificial skin, or smart skin, which integrates robot sensor hardware with the actual robot form. While this area of research has multiple applications, one of the initial areas of interest is smart skin that enables closer robot-human interaction. The smart skin is used to sense unexpected physical interactions. There is an opportunity for fabric with computing capabilities to be integrated directly into the sensor.[1] [2] [12]

Neuromorphic computing hardware

Neuromorphic computing is a method of computer engineering in which elements of a computer are modelled after systems in the human brain and nervous system. It uses specialised computing architectures that reflect the morphology of neural networks from the bottom up: dedicated processing units emulate the behaviour of neurons directly in hardware, and a web of physical interconnections (bus systems) facilitates the rapid exchange of information.

Neuromorphic computing hardware is expected to take its place among other platforms – the central processing unit (CPU), graphics processing unit (GPU), and field programmable gate array (FPGA). Neuromorphic hardware systems are promising in robotics thanks to their modularity and flexibility. These systems have a high degree of parallelism and asynchronous event-driven implementation, meaning that multiple computations can be performed quickly, simultaneously, and automatically. Neuromorphic systems incorporate in-hardware learning by combining processing and memory, unlike traditional systems that have separate components for these functions. This type of machine learning is supported by software algorithms that are based on synaptic plasticity and neural adaptation.[13]

Summary

Robots have many applications that extend human capabilities, and robot hardware requirements vary depending on the tasks that a robot is built to perform. Common parts of a robot are sensors, the CPU, motors, actuators, end effectors, and connectors. All of these components pose hardware considerations individually and as part of a system.

Outside of the robot’s working parts, there are other factors that raise hardware challenges for robot manufacturers. Resource shortages can result in design changes and manufacturing delays, while environmental conditions limit the materials and components used on the robot, and may require software-based solutions.

Recent innovations in robotics technology include increased computational power, advanced software solutions, as well as the development of biomimicry, such as software based on insect brains, smart skin, neuromorphic computing and other emerging technology. These innovations extend the capabilities of robots and advance the field of robotics hardware.

Read the full report now

The 2023 Manufacturing Robotics Report

Introduction chapter: The 2023 Manufacturing Robotics Report

The 2023 Manufacturing Robotics Report: Materials

The 2023 Manufacturing Robotics Report: Robotic Projects and Tech Specs

The 2023 Manufacturing Robotics Report: Hardware

The 2023 Manufacturing Robotics Report: Manufacturing

Reference list

1. Gabriel Aguiar Nour, State of the Art Robotics, Ubuntu Blog,

May and June 2022 edition

2. Sven Parusel,Sami Haddadin, Alin Albu-Schaffer, Modular state-based behaviour control for safe human-robot interaction: A lightweight control architecture for a lightweight robot, IEEE International Conference on Robotics and Automation, 2011

3. Mathanraj Sharma, Introduction to Robotic Control Systems, Medium Newsletter, Sept 2020

4. Ravi Teja, Blog on Raspberry Pi vs Arduino, www.electronicshub.org, April 2021

5. Mats Tage Axelsson, Top 5 Advanced Robotics Kits, www.linuxhint.com, September 2022

6. Techopedia contributor, Actuator, www.techopedia.com, Jan 2022

7. Tiffany yeung, What Is Edge AI and How Does It Work?, https://blogs.nvidia.com/blog/2022/02/17/what-is-edge-ai/, Feb 2022

8. NT Desk, The state has the potential to grow as an electronic manufacturing centre, www.navhindtimes.in, Oct 2019

9. Dr Matthew Dyson, Printed Electronics: Emerging Applications Accelerate Towards Adoption,IDTechEx, Nov 2021

10. Advanced network professionals blog, www.getanp.com/blog/35/hardware-your-business-needs-to-succeed.php, Dec 2019

11. Advanced Mobile Group, The Latest Technology and Supply Chain Trends in Robotics for 2022 and Beyond. https://www.advancedmobilegroup.com/blog/the-latest-technology-and-supply-chain-trends-in-robotics-for-2022-and-beyond, Oct 2022.

12. Moncourtois, Alyce. Can Insects Provide the "Know-How" for Advanced Artificial Intelligence? AeroVironment, 2019. https://www.avinc.com/resources/av-in-the-news/view/microbrain-case-study

13. Yirka, Bob. Biomimetic elastomeric robot skin has tactile sensing abilities. Tech Xplore. June 2022. https://techxplore.com/news/2022-06-biomimetic-elastomeric-robot-skin-tactile.html