The Convergence of Edge AI and Cloud: Making the Right Choice for Your AI Strategy

Weighing Latency, Privacy, and Scalability to Optimize Your AI Strategy

This article was first published on

www.edgeimpulse.comAcross industries, artificial intelligence (AI) stands as a pivotal force driving innovation. From revolutionizing healthcare diagnostics to transforming financial services and Industry 4.0, AI's impact is profound and far-reaching. However, as the capabilities of AI continue to expand, a new debate emerges: edge AI vs. cloud computing.

While cloud computing has been the backbone of AI development and deployment, the future of innovation is increasingly being shaped at the edge. Edge AI, with its promise of real-time processing and reduced latency, offers unparalleled opportunities for advancements in smart devices and the Internet of Things (IoT).

Edge vs. Cloud

The debate between edge AI and cloud computing has gained significant traction as the demands for real-time data processing and low-latency responses grow. Cloud computing offers immense computational power, scalable resources, and centralized data storage. However, the centralized nature of cloud computing introduces latency issues and dependency on stable internet connectivity, which can be limiting for applications requiring immediate, real-time responses.

Edge AI is a game-changer, addressing many of the limitations inherent in cloud computing via its ability to process data locally on devices. This decentralized approach ensures faster decision-making and enhances data privacy by keeping sensitive information closer to the source.

According to industry projections, 75% of data will be processed at the edge by 2025, underscoring the growing importance of edge AI in the future of technology. As the debate continues, it is becoming increasingly clear that the future of AI may not lie in choosing between edge and cloud, but rather in integrating both to harness their respective strengths for a more versatile and efficient AI ecosystem.

Understanding Edge AI and Cloud Computing

What is edge AI?

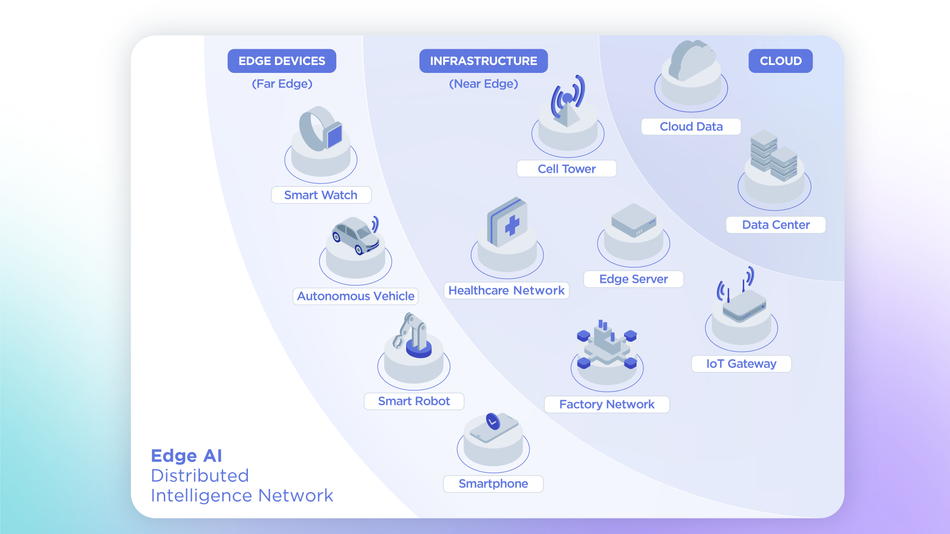

Edge AI is the process of running artificial intelligence (AI) algorithms on devices at the edge of the network, instead of the cloud. The “edge” refers to the periphery of a network, which includes end-user devices and equipment that connects devices to larger networking infrastructure, such as the internet.

Learn more with this free Introduction to Edge AI course.

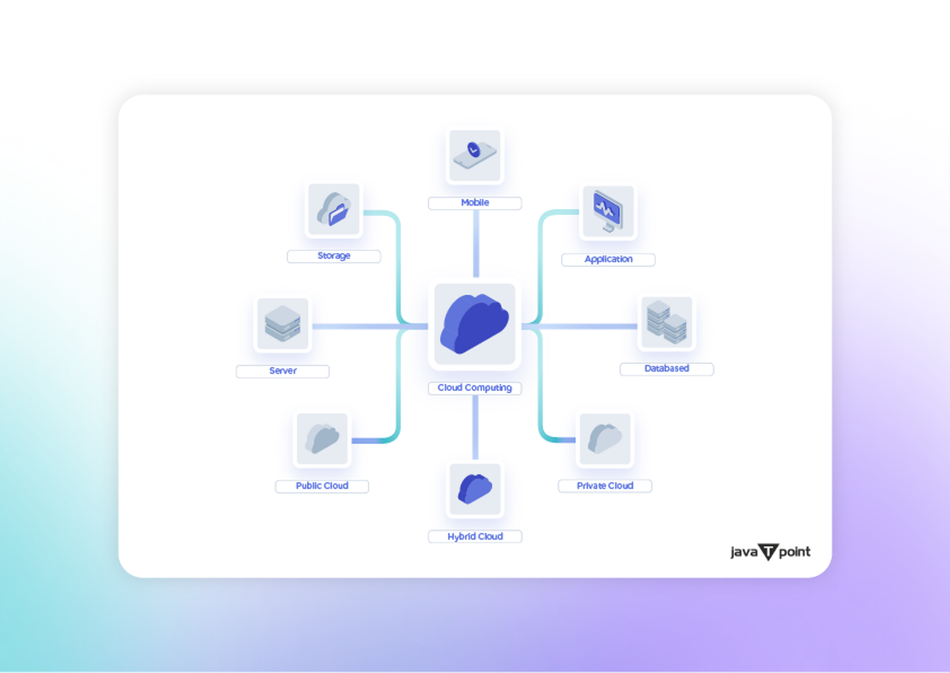

What is cloud computing?

Cloud computing is a technology that allows the access and use of computing resources such as servers, storage, and applications over the internet. Instead of storing data and running software on local computers, users can utilize powerful remote servers that enable vast amounts of computing power and storage without the need for expensive hardware. Processing large amounts of data on the cloud requires a robust connection.

Key differences in architecture and processing

The architectural and processing differences between edge AI and cloud computing are fundamental to understanding their unique advantages and applications. Cloud computing architecture relies on centralized data centers that provide vast computational power and storage.

Data from various devices and applications is sent to these centralized servers, where it is processed and analyzed. This centralization allows for significant scalability and resource sharing, making it ideal for large-scale data analysis, machine learning model training, and applications that do not require real-time processing. However, the reliance on continuous, high-speed internet connections can introduce latency and potential bottlenecks, especially in scenarios demanding immediate data processing.

In contrast, edge AI decentralizes processing by bringing computation closer to the data source, often on local devices such as smartphones, IoT sensors, or industrial machines. This architecture reduces the need to transfer large amounts of data to and from the cloud, enabling real-time analytics and decision-making.

By processing data locally, edge AI minimizes latency and ensures that applications can function effectively even with limited or intermittent internet connectivity. This is particularly advantageous in environments where immediate responses are critical, such as autonomous vehicles, remote healthcare monitoring, and industrial automation.

For technology decision-makers, understanding these architectural differences is crucial for deploying the right AI solutions. Cloud computing offers powerful, scalable resources ideal for comprehensive data analysis and long-term storage, while edge AI provides the speed, efficiency, and security needed for real-time, on-site processing. By leveraging the strengths of both approaches, businesses can create a more versatile and resilient AI ecosystem tailored to their specific needs.

The Cloud Computing Paradigm

Advantages of cloud-based AI

- Scalability and flexibility — Cloud-based AI offers unparalleled advantages in scalability and flexibility. Companies can start with minimal resources and expand their computational capacity as needed without investing in expensive hardware. The flexibility of cloud-based AI also means that companies can quickly adapt to changing market demands, deploy new AI models, and integrate emerging technologies with minimal disruption.

- Powerful computing resources — Another key advantage of cloud-based AI is access to powerful computing resources. Leading cloud providers such as Amazon Web Services (AWS) offer vast amounts of processing power, storage, and advanced AI tools that are otherwise prohibitively expensive for most organizations to maintain in-house. This access allows businesses to perform complex computations, run sophisticated machine learning algorithms, and manage large datasets with ease.

- Centralized data processing — Centralized data and analysis further enhance the value of cloud-based AI. By aggregating data in a centralized cloud environment, businesses can perform comprehensive analyses and derive insights that drive strategic decision-making.

Limitations of cloud-based AI

- Latency issues — Despite its many advantages, cloud AI also presents several limitations that businesses must consider. One of the primary challenges is latency issues. Since data must travel from the user's device to the cloud server for processing and then back again, this round-trip time can introduce delays, which can be problematic for applications requiring real-time responses. Latency remains a critical issue in cloud-based applications, particularly in scenarios where milliseconds can make a significant difference.

- Connectivity requirements — Another considerable limitation of cloud AI is its dependency on stable and high-speed internet connectivity. For remote or rural areas with unreliable internet access, relying on cloud AI can lead to inconsistent performance and service disruptions. This connectivity requirement can also hinder the deployment of AI solutions in regions where infrastructure is not robust, limiting the potential reach and effectiveness of cloud-based AI systems. As reported by the International Telecommunication Union (ITU), around 37% of the global population still lacks internet access, highlighting the connectivity gap that can affect cloud AI adoption.

- Data privacy and security concerns — Data privacy and security concerns are also paramount when considering cloud AI. Storing sensitive information in the cloud raises the risk of data breaches and unauthorized access. Despite stringent security measures implemented by cloud providers, the centralized nature of cloud storage can make it an attractive target for cyberattacks.

- Ongoing operational costs — While cloud AI offers scalability, the costs associated with continuous data transfer, storage, and computational power can add up over time. Cloud infrastructure continues to be one of the fastest growing business expenditures, growing by as much as 35% year over year.

The Rise of Edge AI

Edge AI brings numerous advantages that address many of the limitations associated with cloud-based AI.

- Real-time processing and lower latency— By processing data locally on devices such as smartphones and IoT sensors, edge AI eliminates the latency issues inherent in cloud computing.

- Enhanced data privacy and security— The local processing minimizes the risk of data breaches and unauthorized access, providing a higher level of data security.

- Reduced bandwidth usage— There is less need to send large volumes of data to the cloud.

- Cost savings— The reduction in data transmission not only lowers operational costs but also improves the efficiency and sustainability of AI applications, particularly in scenarios with limited or expensive connectivity options.

By addressing the limitations of cloud computing and leveraging the unique advantages of local processing, edge AI is already playing a pivotal role in the next wave of technological innovation.

Limitations of edge AI

- Computational constraints — While edge AI offers numerous advantages, it's important to acknowledge its potential limitations, particularly in terms of computational constraints. Edge devices typically have more limited processing power, memory, and storage capabilities. As a result, developers and product designers must carefully optimize their AI algorithms and models for edge deployment.

- Initial hardware investment — Deploying edge AI solutions involves equipping devices with advanced processing capabilities, sufficient memory, and specialized components like GPUs or TPUs to handle AI workloads.

- Challenges in maintaining and updating distributed systems — Edge AI requires updates and management to be performed across numerous devices in various locations.

- Data privacy and security— While generally improved by keeping data local, can also present challenges in edge AI. Each edge device becomes a potential point of vulnerability, and ensuring that every device is secure from cyber threats can be daunting.

Edge AI advantage over cloud

While both edge AI and cloud computing offer significant benefits, edge AI presents unique advantages that are crucial for certain applications, particularly those requiring real-time data processing and immediate decision-making.

In environments where latency is critical, such as autonomous vehicles, industrial automation, and healthcare monitoring, the ability to process data locally at the edge drastically reduces response times. For tech decision-makers, this means enhanced performance and a competitive edge in markets where speed and precision are paramount.

Use Cases — Where Does Edge AI Shine?

Edge AI has found its niche in several industries, demonstrating superior performance in scenarios where real-time processing, data privacy, and reliability are crucial.

Industrial IoT and predictive maintenance

In manufacturing and industrial settings, edge AI is revolutionizing predictive maintenance. By processing data directly on machines or nearby edge devices, potential issues can be detected and addressed in real-time, minimizing downtime and improving overall equipment effectiveness (OEE). A study by Deloitte found that predictive maintenance can reduce maintenance planning time by 20%–50% and increase equipment uptime and availability by 10%–20%.

Healthcare and medical devices

Edge AI is making significant strides in healthcare, particularly in medical imaging and patient monitoring. For instance, researchers at Stanford University developed an edge AI system for detecting pneumonia from chest X-rays with accuracy comparable to radiologists.

Anomaly detection

Edge AI excels in anomaly detection across various sectors. In cybersecurity, for example, edge AI can detect and respond to threats in real-time without sending sensitive data to the cloud. Moreover, engineers can now train visual anomaly detection models, which are crucial in various applications, including industrial inspection, medical imaging, and logistics.

Explore the dynamic world of edge AI applications across industries in the 2024 State of Edge AI Report.

The Convergence of Edge and Cloud — A Hybrid Approach

The convergence of edge and cloud computing is leading to a powerful hybrid approach that allows companies to leverage the strengths of both paradigms. This synergy is creating new opportunities for innovation and efficiency across various industries.

Leveraging the strengths of both paradigms:

- Complementary Processing: Edge devices handle real-time, latency-sensitive tasks, while the cloud manages complex, resource-intensive computations. This division of labor optimizes overall system performance.

- Intelligent Data Management: Edge devices can preprocess and filter data, sending only relevant information to the cloud. This reduces bandwidth usage and cloud storage costs while maintaining comprehensive data analysis capabilities.

- Continuous Learning and Model Updates: While edge devices run inference locally, the cloud can aggregate data from multiple sources to train and improve AI models. Updated models can then be pushed to edge devices, creating a continuous improvement cycle.

- Scalability and Flexibility: The hybrid approach allows companies to scale their AI capabilities dynamically. They can add edge devices for local processing or leverage cloud resources for temporary computational needs.

- Enhanced Security and Compliance: Sensitive data can be processed locally at the edge, adhering to data privacy regulations, while less sensitive data can be analyzed in the cloud for broader insights.

Future Trends in Edge AI

Advancements in edge AI hardware:

Edge GPUs: NVIDIA has developed AI-specific chips like NVIDIA’s Jetson series to run optimized models for edge devices. Production ready devices from Advantech, Lexmark, and Seeed use these NVIDIA chips in designs that have the right specifications to operate in industrial spaces.

Edge AI Accelerators: Devices from MemryX, Hailo, and Syntiant are AI coprocessors that run models in an accelerated manner, thereby freeing the host processor from computationally heavy tasks.

Neuroprocessing Units (NPUs): These are accelerated coprocessors integrated on the same silicon as the host processor. ARM’s Ethos IP can be easily added alongside Cortex-based IP devices for accelerated inferencing. Alif’s Ensemble, Himax’s WiseEye2, and Renesas’s RA8 all have Ethos IP integrated.

Microcontrollers and Microprocessors: As these devices become faster and more efficient, their ability to run small AI models grows without the need for dedicated onboard AI-accelerated hardware. Nordic, Infineon, Arduino, Espressif, Renesas, Silicon Labs, Sony, TI, NXP, ST, and Microchip all have devices with demonstrated AI capabilities.

AI model optimization for edge devices:

Researchers are developing advanced techniques to leverage large AI models for aiding in edge AI model creation. The latest large language models (LLMs), like the newly released ChatGPT-4o, are astonishing, with major implications for edge AI. New functionality in Edge Impulse, for example, allows users to harness GPT-4o's intelligence to automatically label visual data, resulting in an AI model 2,000,000x smaller that runs directly on device.

5G and its Impact on edge computing:

Enhanced connectivity: 5G's high-speed, low-latency connectivity will enable more sophisticated edge AI applications, particularly in IoT and smart city scenarios. It allows for faster data transfer between edge devices and nearby edge data centers.

Improved real-time applications: 5G's ultra-low latency will enhance real-time Edge AI applications like autonomous vehicles and augmented reality, allowing for faster decision-making and more immersive experiences.

Embrace the Edge for a Competitive Advantage

The choice between edge AI and cloud computing isn’t simply a technical decision — it's a strategic one that can significantly impact product performance, user experience, and ultimately, your bottom line.

It's still important to remember however, that for some businesses, edge and cloud aren't’ mutually exclusive. Many forward-thinking companies are adopting a hybrid approach, leveraging the strengths of both paradigms to create more robust, efficient and responsive AI systems.