The shortest path to performance requires a new approach

Jonathan Ross, Founder, and CEO of Groq delivered a keynote speech as part of the AI Summit on September 29, 2020, describing insights on the industry, the market, and the unique Groq architecture capable of producing the world’s first PetaOp chip

Groq Keynote - 2020 AI Hardware Summit

Introduction

Groq is on a mission to build the computer for the next generation of high-performance machine learning. Founded by former Google engineer, Johnathan Ross the company has doubled in size since its inception and is on track to double its headcount again by the end of 2021. In late September, Ross presented as part of the online AI summit 2020. His keynote gave key insights on the industry, the market, and the Groq architecture capable of producing the world’s first PetaOp chip. We’ve pulled out some of the highlights from the presentation.

An answer to the end of Moore’s Law

In 1971, Intel co-founder Gordon Moore predicted that the number of transistors we can pack into a microchip would double every 18-24 months. A statement that has proved itself true for a time, but as Moore himself noted “the nature of exponentials is that you push them out and eventually disaster happens”. Computing now finds itself on a precipice where cost, power, and efficiency compete for priority to deliver the computing ambitions of the next generation.

Rock’s Law or Moore’s second law states that the cost of a semiconductor chip fabrication plant doubles every four years. In line with this prediction, next-generation factories are estimated to cost more than $20 billion.

Realizing the enormity of what these two laws mean for the future of computing placed Jonathon Ross, CEO, and Founder of Groq, on his current path.

Ross recounts when working for Google back in 2012, Jeff Dean gave a presentation to the leadership team, containing just two slides. The first slide was good news; machine learning finally works. The second slide was bad news; It would require another 20 to 40 data centers to be able to afford it - and that was just for speech recognition. At the end of the presentation, Ross started the TPU (Tensor Processing Unit) at Google.

Fast forward several years and Ross, now the head of Groq, unveils the world’s first and only PetaOp chip. The chip’s performance is equivalent to one quadrillion operations per second, or 1e15 ops/s. Groq's architecture is also capable of up to 250 trillion floating-point operations per second (FLOPS).

Architecture over speed

“The industry often focuses on faster speeds,” begins Ross. “That’s what so many chip makers are focused on today. Everyone in this field is trying to make a faster chip. That approach of throwing more transistors onto a die means cost increases with incremental performance improvements. Following a trend that is running out of steam isn't a win for customers who are planning for the future. What we've done is come up with something revolutionary. I call this deterministic or predictable computing. A radically simplified approach which is scalable for customers. This is what enabled us to create the first and only PetaOp processor.”

The shortest path to performance

“How did we get there?” Ross reflects at the start of his keynote address. “We got there by doing things differently. A good example of this, we built on a 14-nanometer process when others were building on a 7-nanometer process. We simply didn't need the bleeding edge lithography to produce the fastest chip. Instead of adding more transistors, our performance came from our architecture. Everyone here believes Moore's law will run out. We talk about it and no one is acting any differently than they did before.”

Ross asserts that the real question is not the end of Moore’s law but what happens if ML accelerator hardware doesn't get any better. The answer, Ross believes, is in designing and delivering a new architecture not based on the familiar systems of FPGA, CPU, or GPU. “It’s something completely different,” says Ross, “we call it the TSP or the tensor streaming processor.”

Compute as power

“Compute is a utility like power,” states Ross. “The country that controls compute will be in an advantaged position.” The future of computing is likely to need a careful mix of private enterprise, government, and academic input, research, and funding to ensure both its continued development and shared access. It isn’t only owning or controlling the technology that will give a nation an advantage but rather the overall deliberate direction and ambition around legislation, use, access, and dominance.

“The future isn't guaranteed,” says Ross, “but you do get the future that you stand up for.” For Ross being deliberate about your approach is key to success. But he also understands this needs to happen across the industry to be effective for as many as possible.

“At Groq we believe in the shortest path to performance,” continues Ross. “We have to stand up for the future we want by creating new trends with our approach. Trends like:

- Inference being different than training and being bigger than training.

- Batch Size 1

- Determinism and predictability, and

- Performance and software first, which to us means the complexity and intelligence of a task is managed in the software stack.

“Defining these trends originated with what we’re accomplishing at Groq but more importantly, these are going to enable customers' AI applications in production, that today are simply too costly.”

Hopes and Challenges for the Future of AI

Key investors and stakeholders in the AI field joined Ross in the presentation to share their hopes and challenges for the future. Each speaker pointed to where their priorities are for the development of the technology and gave insights into where their organization is directing resources. Speakers included Nicolas Savage, the Managing Director of TDK ventures the, corporate VC arm of TDK, CEO of Canadian quantum computing company, 1QBit, Andrew Fursman, Teddy Gleeser, a partner at D1 Capita and CTO of the ASIC business unit at Marvell, Igor Arsovski. Their full remarks can be viewed in the video excerpts below:

Predictability and reliability

“I’m personally excited about future advancements like bioengineering, finance, government applications, and especially autonomous vehicles because it will save lives,” shared Ross. “My cousin was a passenger in a car that got into an accident. The driver of the other car had been drinking and he slammed into the side of my cousin's car. This didn't have to happen and if we had autonomous cars it wouldn't have happened. I want our chip in those cars because we need the level of predictability that I know our chip can bring but there's something else that AI brings you. When cars can make deliveries to you when auto-editing makes you a better photographer, when auto-sorting your inbox or when speech recognition keeps an EMT's hands-free so they can focus on their patient in an emergency.”

“One major challenge in today's AI's systems is when you look at AI accelerators which typically push both performance but at the same time try to drop the voltage as low as possible so they can achieve really high energy efficiency. This tops per watt metric is kind of critical. Furthermore, these accelerators typically get integrated into data centers and automotive applications that have critical uptime and reliability requirements. I know reliability is not as sexy as the tops per watt metric and because of that it's taken a backseat to many AI discussions and many AI conferences but reliability is really critical to deliver useful systems.”

Time is a Limited Resource

“Silicon Valley has gotten a lot of criticism for focusing on convenience but I’m not going to apologize for that whether it's a safety issue or convenience time is a limited resource and we only get so much of it. What are the obstacles keeping us from that new reality? You’re probably thinking about speed but that's only part of the equation. Performance is a big challenge but reliability, predictability, and certainty in the real world is key. Chip makers like us need to deliver that performance not just in the lab but also in the real world,” details Ross.

“Training cost isn't an issue. Train the model once and use it as much as you want. With inference, each response has to be real-time and it costs money and energy that's a scaling issue. Now instead of scaling with the number of ML researchers, you're scaling with the number of users and the number of queries. Inference at scale is harder than training.”

“When I was at google roughly 80% of the ML models that were trained ended up having to be abandoned because they were too expensive to run in production. AI success is hinged on speed for inference as well as training but for the workloads of the future total cost of ownership is dependent on scalability. Just like when the team and I created the TPU at Google and it saved billions of dollars, we as an industry have just begun tackling these challenges.”

The future isn't guaranteed

One of the guiding mantras at Groq is that the ‘future isn’t guaranteed’. This is essentially a guiding principle that the future is both unknown yet directable. The intention within the development of technologies such as AI and computing must be deliberate and understood within a wider socio-economic context. Ross takes the responsibility that he and his company, and others like it have the power to change the future, very seriously. It's a way of thinking that is embedded in the company's methods and products.

“At Google, the team and I created the TPU for existing workloads that we could make better as well as new and emerging workloads,” says Ross. “To plan for the future means understanding those workloads. Those were yesterday's challenges and we have new challenges today like power efficiency. To Groq those obstacles are the path and that's where we're going.”

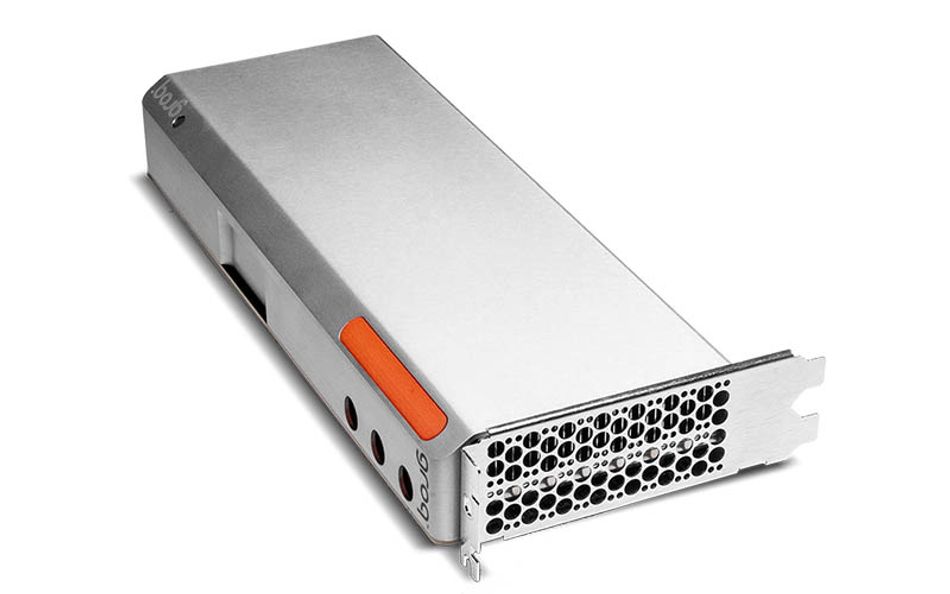

“Today, we're shipping to our customers both as individual PCIe cards and systems with eight cards each and there's even more on the roadmap to come. I know 2020 has been a crazy year. Together we're pushing through and making great strides. Groq is going to grow our headcount by 4X by the end of next year. We’re not going to lose who we are because it's scrappy maneuverability that lets us innovate faster and more affordably than everyone else. That’s how we built the first PetaOp chip and that's how we're going to build what's next.”

Conclusion

The power of computing is at a tipping point where major players are making decisions about the direction they will pursue that will have huge impacts on the future of technological innovation and potential. Groq is one of these companies that understands it has the potential to completely change the direction of computing and massively influence the potential of AI. With that potential comes responsibility - something Ross and his team have demonstrated they are ready to shoulder. Interested in learning more about Groq? Head to their website www.groq.com to learn more about the future of computing and review their multiple career opportunities.

About the sponsor: Groq

Building the computer for the next generation of high performance machine learning. Groq hardware is designed to be both high performance and highly responsive. Groq’s new simplified architecture drives incredible performance at batch size 1. Whether you have one image or a million, Groq hardware responds faster.