TinyML for Good: where machine learning meets edge computing

The article covers the basics of TinyML, a technology that improves the privacy, energy efficiency, affordability and reliability of devices utilising artificial intelligence. Be a part of TinyML for good, an online event and showcase of ideas that aims at exploring the topic in more detail.

Introduction

Artificial Intelligence (AI) refers to the ability of a system to learn new things. There are several approaches to do so, but Machine Learning (ML) is the one that is the centre of attraction these days. It involves training a neural network with a lot of data to make it understand a situation and make some decisions.

Traditionally, an AI-powered system acquires analog signals with the help of a transducer. This can be acoustic, visual, chemical, thermal or any signal available in nature the transducer is developed for. In the next step, the data is conditioned by the microprocessor and sent to the cloud servers with the help of a wireless transmission link. Cloud servers powered by multiple interconnected CPUs and GPUs process this data and share the results back.

While most ML and training used to happen on powerful cloud servers, over the last couple of years, the experiments have led us to a point where some part of the ML can be performed on the edge, right where the data source is.

In this article, we are going to discuss the fundamentals, key advantages and applications of TinyML. Through this article, we have tried to give readers a basic idea of the TinyML project development flow and how the technology holds the potential to revolutionise the way data is processed by electronic systems.

But what exactly led us to a point where we started trying to fit machine learning algorithms running on clouds into edge computing devices, bringing together such isolated fields? Let’s find out.

What is TinyML good for?

By taking machine learning to the edge, TinyML aims to improve the products on four fronts. They are:

- Privacy: Consider, for example, smart home devices that always listen to the conversation you have at home while they wait patiently to listen to the hot word that activates them. One wouldn’t prefer to share their private conversations that take place at home/office with third-party entities. By running machine learning programs on the edge, it is possible to eliminate the risk of such a privacy incident.

- Power: Transmission of data in Internet of Things (IoT) devices is probably the operation that consumes most of the energy. Processing data on the device cuts off a large portion of the energy consumed in the data communication layer.

- Cost: By not transmitting data, the cost of setting up servers and the on-device radio is cut, which greatly brings down the overall price of the device.

- Reliability: Not sending data anywhere means that the response time is significantly reduced. Processing on the edge also means that the least amount of data is lost during transmission. The problem of devices not being able to work during server maintenance or outrage is also solved.

The case with embedded systems (especially the ones interacting with us) is that we expect these devices to respond to our actions instantly. Any delay in the response has a direct effect on user experience. Embedded systems are almost always powered by batteries. This means that the device should be able to function in an extremely energy-constrained environment. Embedded Systems also have very limited memory and a lot of sensors.

So how can the issue of designing a system that can process so much data in such a constrained environment be solved? The answer is TinyML.

What is TinyML?

TinyML stands for Tiny Machine Learning, a field of study lying at an intersection of Embedded Systems and AI that involves developing systems that run ML models on ultra-low-power microcontrollers. The idea is to push the implementation to where the information source is.

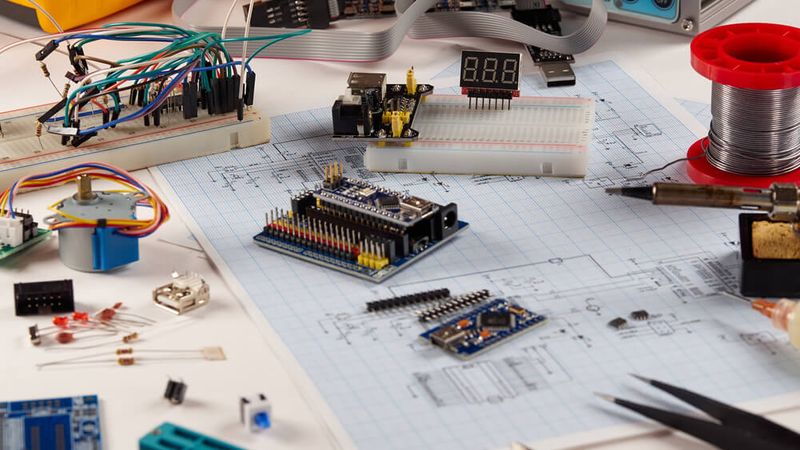

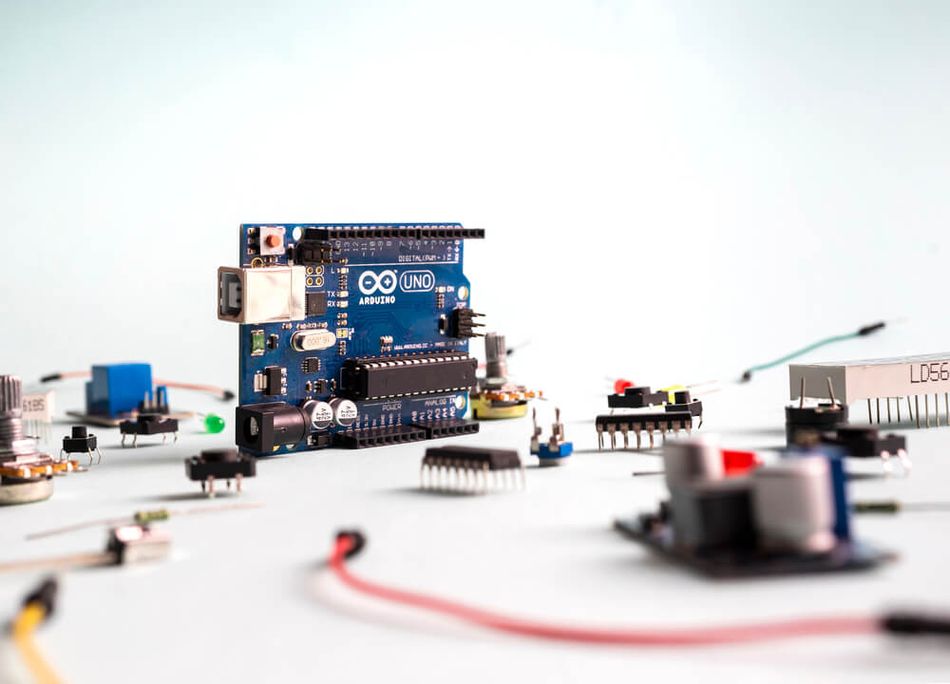

There was a time when microcontrollers used proprietary programming languages and tools, which made it too intimidating for beginners and hobbyists to even start with the technology. Things have changed a lot since then. With the development of the Arduino platform, microcontrollers have become more accessible than ever. The introduction of ARM’s Cortex M series chips is also considered a prominent event since it made 32-bit microcontrollers mainstream.

Collaborations between companies like Google, Arduino, ARM, Qualcomm, Edge Impulse, etc, is making it increasingly simpler with each passing day to get started with TinyML devices.

Overview of the development flow of TinML projects

The initial stage of development involves designing and training a machine learning model in frameworks like TensorFlow. Google has introduced a special version of the tool called TensorFlow Lite, which comes with a binary footprint of less than 16 KB and doesn’t have any library dependencies.

The generated model from TensorFlow Lite is combined with the application code with the help of Integrated Development Environments (IDEs) like Arduino IDE, Keil MDK and Mbed studio. Next up, the software compiles the code into a binary file before deploying it on the appropriate hardware. Many development boards like Arduino Nano 33 BLE, Sparkfun Edge, STM32F746NG MCU discovery kit, ESP32-DevKitC, Sony Spresense etc., can be used to develop TinyML projects.

Don’t forget to check out the video links in the references section to find out more about getting started with TinyML projects.

Applications of TinyML

TinyML can be used wherever conventional ML is required. But there are some specific areas where there’s no alternative to TinyML. Real-time applications, applications involving extremely personal data or applications where a continuous power source is not available are especially suited for TinyML. Here is a list of the most common applications of TinyML in domestic and industrial environments:

Computer Vision: TinyML can be used for face recognition, text recognition and various applications where cameras are involved. It is possible to make TinyML devices that can respond to you when you look at them or wave at them. Appliances that detect the presence of someone, smartphones that use face unlock and all other devices which take a decision based on visual cues can be built with TinyML.

Audio recognition & Natural Language Processing: Small neural networks of size in the order of a few KBs are trained to detect wake words. These algorithms are deployed on a dedicated DSP chip and are used to process audio continuously, looking for the wake words.

The chip requires just a few milliWatts (mWs) of power which is far less compared to what a typical smartphone processor uses. And since the data doesn’t leave the device, privacy concerns are largely addressed. Google Assistant’s implementation of “OK Google” wake word detection remains one of the most iconic TinyML applications ever.

Time-Frequency Signal analysis: ML, in general, performs well at finding patterns in messy data. By training models with proper data sets, it is possible to monitor the condition of electrical machines.

Motors and Drives can be fitted with TinyML devices that monitor the vibrations and other electromechanical parameters to detect an anomaly in operation for intelligent and predictive maintenance.

TinyML applications can potentially be applied to all the sectors in some way or the other. Energy, retail, financial services, travel, tourism, hospitality, manufacturing, healthcare, life sciences are just some fields where the technology can bring massive improvements.

TinyML for good

TinyML for good is an online conference showcasing some inspirational thinkers that used TinyML to develop innovative solutions and products in the field of education, healthcare and the environment sectors.

Visit the event page of TinyML for good to know more about it.

Alternatively, you can directly head over to the registration page to get yourself enrolled for the event. Dive into the world of TinyML and learn about the possibilities of the technology on 17th November 2021. No prior technical experience is required.

About TinyML Foundation

With its workshops, webinars, expert talks, research conferences, competitions, and a plethora of other events, TinyML Foundation enables students, researchers, and industry personnel to come together and share their experiences about the technology. It is the incredibly collaborative nature of the community that allows it to advance rapidly.

Conclusion

We can arrive at a consensus that the research on TinyML devices and algorithms is fueled by the growing applications of the technology. It wouldn’t be an understatement to say that in the next couple of years, we can see this technology going to every portable electronic device there is. It is really exciting to witness how things change in unique and unexpected ways.

The introductory article familiarised the readers with the concept of tinyML.

The first article explains some possibilities of tinyML applications in sustainable technologies.

The second article is about tinyML applications aiding healthcare and medical research.

The third and final article shall cover the tinyML applications in pedagogy and education.

References

[1] M. Shafique, T. Theocharides, V. J. Reddy and B. Murmann, "TinyML: Current Progress, Research Challenges, and Future Roadmap," 2021 58th ACM/IEEE Design Automation Conference (DAC), 2021, pp. 1303-1306, DOI: 10.1109/DAC18074.2021.9586232.

[2] Banbury et al. (2020). Benchmarking TinyML Systems: Challenges and Direction.

[3] TensorFlow Lite team. (2021). On-device training in TensorFlow Lite. Available from: https://blog.tensorflow.org/2021/11/on-device-training-in-tensorflow-lite.html

[4] "Get Started with TinyML Webinar". 2021. YouTube. Uploaded by: Hackster.io. https://www.youtube.com/watch?v=7OCIm_gh55s