Why Can't Robots Just Get a Grip?

Why a robot’s reach exceeds its grasp

The last few weeks we’ve been tip-toeing around one of the biggest bottlenecks in robotics - picking up and placing random objects. Last week, we discussed Moravec’s Paradox: how tasks that seem very easy for humans can be incredibly challenging for robotics. Nothing epitomises this more than universal grasping -

Universal grasping is the ability to pick up and manipulate a previously unknown object the first time it's seen, without any mistakes.

Grasping is one of the last steps required to bring robotics into parity with humans. Today, the majority of interest in grasping has been driven by E-commerce: an industry where almost every stage has been automated except picking and packing. This stage is currently very challenging due to the sheer variety of products. For example, Amazon has around 12 million unique product variants. But it’s not just e-commerce: Dyson is interested in universal picking for home robotics, and Google has a spinout company which is targeting pretty much every industry imaginable.

Universal picking is such an important topic that we’re going to spend the next few weeks reviewing its challenges and opportunities -

- This week we’re setting the scene and understanding the scale of the challenge.

- Next week we’ll explore the state of the art in robot picking + suggest where the future is trending.

How should universal grasping work?

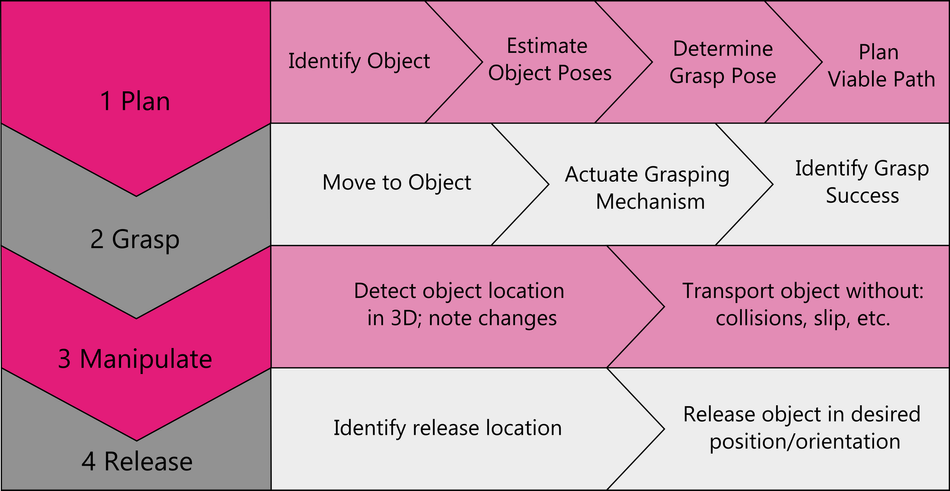

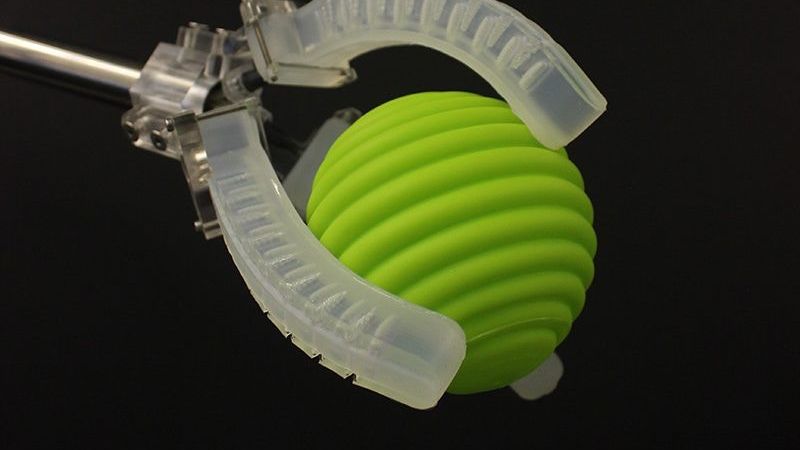

We’re talking about robot systems, so no surprise universal gripping is tackled by the usual suspects - a robot, grippers, sensors and a control system. We’ll discuss the dominant technologies for each next week. If we abstract away from specific technological solutions, we can say there are 4 stages to grasping: planning the route, grasping the object, manipulating it through space and successfully placing it in the desired location. Although simple in principle, in practice an autonomous system must perform numerous complex tasks in each stage. We’ve broken down the main ones in this chart [The 4 Stages of robot grasping (according to Remix)] -

Why it often doesn't work

It may seem like we're overcomplicating things, but in industry (or any dynamic environment) major issues can emerge in all four stages. Generally, the problems originate from limitations in perception and control. Outside of a lab, systems struggle to capture and process the level of detail required in real-time. There is always a difference between the controller's view of reality and reality itself. It turns out that the grasping of objects is actually very sensitive to change, and so these small (in the best case scenario) differences compound into a large impact on success rates.

To match a human, a system needs to hit < 2 sec cycle times for the PnP of a never-been-seen-before (NBSB? - ok we'll stop) object. Currently, this isn't enough time to accurately -

- Identify an object's properties - e.g. centre of mass, friction, hardness, stiffness, number of moving components etc etc

- Model an object’s behaviour due to its properties - will it slide on the surface it's being held on if touched, will it deform when gripped, can it support its own weight etc etc

- Map the environment and keep track of it as the object moves in real time

As a result, in Stage 1 perception systems are still pretty poor at identifying new objects from backgrounds/other objects, automatically determining the best type of gripper to use, the best pick location for the gripper and how to move into position without collisions.

If Stage 1 goes well, Stage 2 is usually pretty smooth. Any mistakes though, and all bets are off. Issues can crop up if the gripper collides with other objects or accidentally unbalances the object it's trying to pick.

The first two stages are where researchers have focused the majority of their efforts, and — as we’ll see over the next few weeks — where there has been the greatest success to date. Unfortunately, the problems really start in the last two stages.

In Stage 3, systems can struggle to transport an object without collisions with the robot itself and other aspects of the environment. Any changes in the object’s positioning within the grips can throw the system off, and while solutions for avoiding the entanglement of objects exist, no general solution has been proposed to unhook complex object geometries.

Finally, in Stage 4, simply dropping objects, or even throwing them is straightforward. However, accurate placement is tricky on a flat surface, let alone into a container. The friction between a part and the grippers can change how an item falls, as can its centre of mass etc. Ensuring collisions are avoided and delicate objects are not crushed in real-time has not received sufficient attention to date, and so remains a large barrier to industrial deployment of intelligent pick and place for NBSB objects.

How humans solve the issue

To put the challenge into context, let's look at how the human body is shaped to deal with the challenge. Try to reframe all of these “features” as if you had to design an electromechanical system with the same capabilities.

Sensing

Human fingertips are probably the most sensitive skin areas in the animal world; they can feel the difference between a smooth surface and one with a pattern embedded just 13 nm deep. they’re able to differentiate between a wide range of textures, materials, temperatures, and pressures. They can sense pressure as light as a feather while being robust enough to handle loads of 20kg, high temperatures, abrasives etc. Our sense of touch is so responsive it can bypass our brain with the reaction to touch stimuli occurring in just 15 milliseconds.

Cameras are actually very similar to eyes (which makes sense - we copied them) but the resolution of the human eye is 576 megapixels in 3D - hard to beat. Even if standard machine vision cameras reach this level it's our brains that really set human vision apart. And speaking of brains…

Control

To understand our brains it's useful to contrast them with computers:

Speed - the brain can perform at most about a thousand basic operations per second… about 10 million times slower than then an average computer.

Precision - A computer can represent numbers with much higher precision. For example, a 32-bit number has a precision of 1 in 2^32 or 4.2 billion. In contrast, evidence suggests that most brains have a precision of 1 in 100 at best, which is millions of times worse than a computer. We also have a short-term working memory of around seven digits - Ok Computer, you win this one.

That said, a professional tennis player’s brain can follow the trajectory of a tennis ball at 160 miles per hour, move to the perfect spot on the court, position their arm and return the ball to the opponent, all within a few hundred milliseconds. And it can do this using ten times less power than a personal computer.

How does the brain achieve this while being so computationally limited? Computers perform tasks largely in a sequential flow. High precision is required at each step, as errors accumulate and amplify in successive steps. The brain also uses serial steps for information processing. In the tennis return example, information flows from the eye to the brain and then to the spinal cord to control muscles in the body. What’s key though is that the brain can undertake parallel processing at a scale that is hard to comprehend. First, each hemisphere can process independently. Secondly, each neuron collects inputs from — and sends output to — nearly 1000 other neurons. In contrast, a transistor has only three nodes for input and output. This parallel information processing capability means that humans are incredibly good at course correction: we can take information ‘on-the-fly’ and calculate how to adjust our movement strategy much, much faster than computational simulations.

It allows us to undertake all of the calculations and planning of incredibly complex tasks — like picking up a cup — without even realising.

The Manipulator

Our brains may be impressive, but we knew that already. The complexity of the human ‘end effector’ is what’s really stunning. On each hand, our wrist, palm, and fingers consist of 27 bones, 27 joints, 34 muscles, and over 100 ligaments and tendons. This achieves 27 degrees of freedom: 4 in each finger (3 for extension and flexion and one for abduction and adduction); the thumb is more complicated and has 5 DOF, leaving 6 DOF for the rotation and translation of the wrist. This flexibility gives us 33 highly optimised grip types - each for different situations and objects based on the mass, size and shape, rigidity, and force and precision required.

This sets the bar pretty high and may make the universal grasping problem feel insurmountable. Don’t let this put you off though — real progress is starting to be made.