NPU vs GPU: Understanding the Key Differences and Use Cases

GPUs excel in parallel processing for graphics and AI training with scalability, while NPUs focus on low-latency AI inference on edge devices, enhancing privacy by processing data locally. Together, they complement each other in addressing different stages of AI workloads efficiently.

Modern computing demands specialized processors to handle complex tasks and algorithms efficiently. Graphics Processing Units (GPUs) and Neural Processing Units (NPUs) are designed to address these needs, each excelling in different domains. GPUs leverage parallel processing for high-performance tasks like graphics rendering, scientific simulations, and AI model training. NPUs, on the other hand, are purpose-built for AI inference, enabling low-latency and power-efficient execution of neural network tasks directly on devices. These specialized chips enhance performance and efficiency by offloading repetitive and resource-intensive operations from general-purpose Central Processing Units (CPUs). This article aims to equip you with a detailed understanding of these technologies by exploring their evolution, architectures, capabilities, real-world applications, and challenges, helping you choose the right processor for your needs.

Evolution of GPUs and NPUs

Computers, at their core, are math machines designed to perform calculations on numbers. Initially, all computational tasks, regardless of complexity or type, were handled by CPUs. However, as computing evolved, it became clear that not every operation required the flexibility and precision of a general-purpose computing processor. Repetitive, specialized tasks, such as graphics rendering or audio and video encoding, could be bottlenecks for traditional processors and be handled more efficiently by dedicated hardware accelerators. This realization gave rise to specialized chips like GPUs for graphics and NPUs for AI, among others, each tailored to excel in specific computational domains, offloading and optimizing tasks from the CPU.

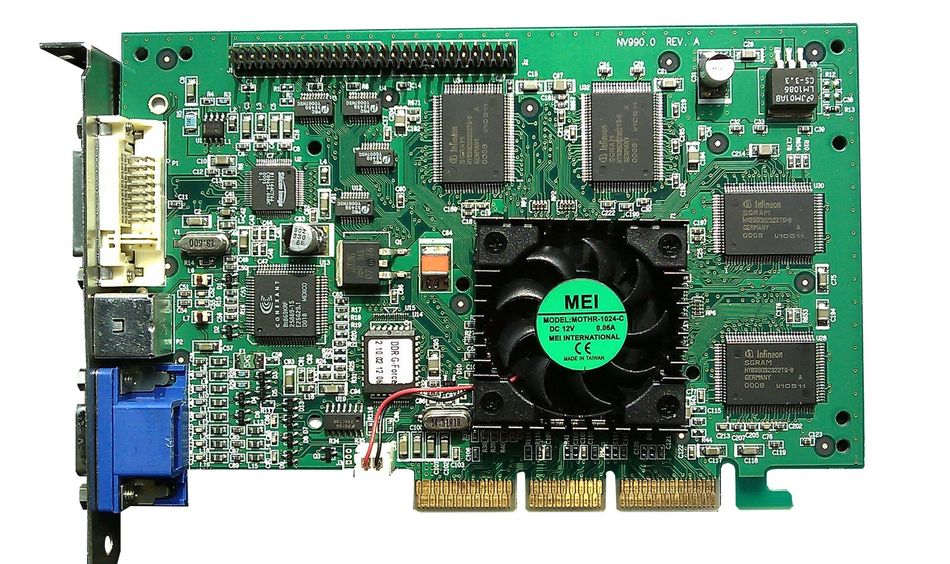

Both NPUs and GPUs represent two distinct advancements in specialized hardware for computational tasks. GPUs were introduced in the late 1990s, initially designed for rendering graphics in gaming and visual applications. Their ability to execute thousands of parallel operations efficiently made them ideal for handling image processing, 3D modeling, and rendering pipelines.

By the mid-2000s, graphics cards began to be used beyond graphics, thanks to frameworks like CUDA, enabling their adoption for general-purpose parallel computing tasks. This marked their shift into areas like scientific simulations, financial modeling, and neural network training, where massive computational throughput is critical.

Recommended reading: Understanding Nvidia CUDA Cores: A Comprehensive Guide

NPUs, developed much later, address the growing demand for dedicated hardware optimized for neural network computations. Unlike GPUs, which are general-purpose parallel processors, NPUs are tailored for AI workloads, with specialized circuits for matrix multiplication, tensor operations, and low-latency execution. Early adoption of NPUs occurred in edge devices such as smartphones, enabling features like natural language processing and object detection with less power consumption.

Today, GPUs dominate large-scale AI model training and high-performance computing, while NPUs are increasingly used for real-time inference in constrained environments.

What Are NPUs and GPUs?

NPUs and GPUs are specialized processors designed to handle specific computational tasks that are beyond the capabilities of traditional CPUs with high efficiency.

GPUs are built for parallel processing, excelling at tasks that involve handling multiple operations simultaneously. This capability originally made them useful in rendering graphics and performing image and video processing. NPUs, in contrast, are designed with a specific focus on efficiently executing operations commonly found in machine learning tasks and deep learning workloads. Their architecture is optimized to handle the mathematical computations required for neural networks.

Recommended reading: Large Language Model (LLM) Training: Mastering the Art of Language Model Development

Both processors play critical roles in modern computing, addressing challenges where general-purpose hardware falls short, but their unique design philosophies cater to different types of workloads.

Architecture and Design: A Technical Comparison

NPUs and GPUs have distinct architectures tailored to their specialized functions. NPUs are optimized for artificial intelligence tasks, with designs focused on executing neural network computations efficiently.

GPUs are designed around a large number of parallel processing cores, often numbering in the thousands. These cores are grouped into clusters, with shared access to high-bandwidth memory and a unified scheduler to manage tasks. This architecture is highly efficient for tasks that require concurrent processing, such as rendering graphics, running simulations, or training large-scale machine learning models. GPUs typically feature:

Streaming Multiprocessors (SMs): Core clusters that handle multiple threads simultaneously.

High Memory Bandwidth: To accommodate the large datasets required for graphical and computational tasks.

Flexible Instruction Sets: Allowing GPUs to handle a wide variety of workloads beyond graphics, such as AI model training or scientific computations.

NPUs, in contrast, are built specifically to accelerate neural network operations like matrix multiplications and convolutions. Their design focuses on minimizing latency and maximizing efficiency for tasks commonly encountered in AI inference. Key architectural features of NPUs include:

Tensor Cores or Neural AI Accelerators: Dedicated hardware units optimized for matrix and tensor operations, which form the backbone of neural network computations.

Low-Latency Pipelines: Optimized for real-time inference tasks in power-constrained environments, such as smartphones or IoT devices.

On-Chip Memory: Integrated memory closer to the processing units, reducing data transfer delays and improving efficiency.

Customizable Layers: Support for AI-specific optimizations, such as quantization and pruning, to further accelerate performance.

Recommended reading: Tensor Cores vs CUDA Cores: The Powerhouses of GPU Computing from Nvidia

Performance Metrics That Matter

Performance metrics such as Floating Point Operations Per Second (FLOPs), latency, and power efficiency are critical for evaluating the capabilities of NPUs and GPUs. These metrics are essential because they determine the suitability of a processor for various scenarios. For instance, FLOPs are crucial for tasks that require heavy computational power like AI model training, while latency is a decisive factor in real-time applications such as autonomous driving. Power efficiency becomes a primary concern in edge devices like smartphones and IoT gadgets, where battery life is important.

FLOPs: Measures computational power, especially relevant for AI and graphics workloads. Higher FLOPs indicate the ability to handle complex calculations quickly.

Latency: Represents the time taken to complete a task, crucial for real-time applications like autonomous driving or video processing. NPUs often have lower latency for specific AI tasks.

Power Efficiency: Indicates the energy consumption per operation, an important metric for edge and mobile devices. NPUs often excel here, making them suitable for devices with limited power resources.

As these devices are expected to operate for extended periods without frequent recharging, energy-efficient processors ensure consistent performance while conserving power. Additionally, the growing reliance on portable and wearable technologies has amplified the need for processors that balance high computational demands with minimal energy usage. These metrics help define the ideal processor for a given workload and environment.

Real-World Applications: Where They Shine

The deployment of NPUs and GPUs spans diverse industries, with each processor excelling in specific domains based on its architectural strengths.

Key Use Cases for NPUs

NPUs are integral in accelerating AI-centric apps, particularly in image recognition and language processing. Their specialized architecture enables efficient handling of complex neural network computations across diverse fields.

Consumer Electronics and Edge Devices

- Smartphones and Tablets: NPUs power features like facial recognition, voice assistants, real-time language translation, real-time photo processing, battery optimizations, fitness tracking, and even privacy features.

- Internet of Things (IoT) Devices: NPUs enable real-time AI in smart home devices, security cameras (object detection), and smart assistants (natural language understanding).

NPUs enhance user privacy by processing sensitive data directly on the device, eliminating the need to send it to cloud servers. Tasks like voice recognition, facial recognition, and spam filtering are performed locally, reducing the risk of data exposure and ensuring greater control over personal information.

Healthcare:

- Medical imaging: NPUs accelerate image analysis in CT scans, MRIs, and X-rays, enabling faster and more accurate diagnostics.

- AI-Assisted Diagnosis: Real-time analysis of large datasets for predictive modeling in personalized medicine, improving patient outcomes.

Automotive:

- Autonomous Vehicles: NPUs handle object detection, path planning, and decision-making in real-time for autonomous driving.

- Driver Assistance Systems: Applications like adaptive cruise control, lane departure warning, and collision avoidance leverage NPUs for low-latency decision-making.

Robotics:

Industrial Robots: NPUs facilitate machine vision and decision-making for tasks like sorting, assembly, and navigation in dynamic environments.

Drones: Real-time AI inference allows drones to detect objects, map environments, and avoid obstacles.

Real-Time AI Solutions:

- Retail: Automated checkout systems use NPUs for recognizing products and processing transactions.

- Finance: NPUs support fraud detection systems by analyzing vast datasets in real-time.

These examples showcase the versatility of NPUs across diverse industries where real-time AI solutions are critical for innovation.

Key Use Cases for GPUs

GPUs have evolved beyond their traditional roles to power a wide range of applications, thanks to their unparalleled parallel processing capabilities. Their impact spans gaming, video rendering, scientific research, and more.

Gaming and Graphics Rendering:

- Video Games: GPUs power high-resolution gaming experiences, enabling realistic visuals, ray tracing, and smooth frame rates.

- Visual Effects and Animation: GPUs are integral to creating 3D animations, rendering special effects, and editing high-resolution video.

AI and Machine Learning:

- Model Training: GPUs are the industry standard for training deep learning models. Their ability to perform massive parallel computations makes them indispensable in AI research.

- Scientific Research: GPUs accelerate simulations in areas like climate modeling, particle physics, and genome analysis, where large-scale parallelism is required.

Data Centers, Cloud Computing and AI training:

- AI Workloads: GPUs handle specific tasks like natural language processing (NLP), recommendation systems, and image recognition in cloud environments.

- Big Data Analytics: GPUs are used to process and analyze massive datasets in real-time.

Professional Workstations:

- Engineering and Design: GPUs support CAD (Computer-Aided Design) tools for 3D modeling, product design, and architectural rendering.

- Video Production: GPUs accelerate video editing workflows, enabling smooth playback and real-time rendering of effects.

Cryptocurrency Mining:

- GPUs dominate cryptocurrency mining due to their ability to perform repetitive, resource-intensive computations efficiently. This makes them essential for blockchain verification and creating new digital currencies.

Key Players in the NPU and GPU Market

The NPU and GPU market is shaped by a few dominant players, each driving innovation to address distinct computational needs.

GPU Market Leaders

NVIDIA is the undisputed leader in GPU technology, renowned for its groundbreaking CUDA platform and hardware such as the GeForce and Tesla series. The brand has a market cap of over $3.15 trillion[5] (at the time of writing this article) and their GPUs are the backbone of industries ranging from gaming to AI model training and scientific research. NVIDIA’s innovations, like Tensor Cores and real-time ray tracing, make it a critical player in both graphics and AI.

AMD is another significant competitor, offering Radeon GPUs that excel in gaming, Virtual Reality (VR), and content creation. With its ROCm platform, AMD is making strides in high-performance computing (HPC) and deep learning, providing an open-source alternative to NVIDIA’s ecosystem. Meanwhile, Intel has entered the GPU market with its Arc series and Xe architecture, focusing on bridging CPU and GPU workloads, especially in HPC and AI.

NPU Market Innovators

NPUs, being a more recent innovation, have a different set of leaders. Google’s Tensor Processing Units (TPUs) were among the first NPUs designed for large-scale AI workloads, particularly in data centers. They power Google’s cloud services and AI models, offering seamless integration with TensorFlow.

Recommended reading: TPU vs GPU in AI: A Comprehensive Guide to Their Roles and Impact on Artificial Intelligence

Apple, on the other hand, leads in on-device AI with its Neural Engine, a key part of its A-series and M-series chips, enabling features like FaceID, Siri, and computational photography.

Qualcomm and MediaTek dominate the mobile NPU market. Qualcomm integrates NPUs into its Snapdragon processors, enhancing real-time AI features like voice assistants and augmented reality. MediaTek focuses on cost-effective AI enhancements in its Dimensity series, targeting mid-range and flagship devices. Huawei has also positioned itself as a major player with its Ascend and Kirin NPUs. The Ascend NPUs target enterprise applications and AI training, while the Kirin processors are optimized for smartphones and edge devices.

Emerging Players

Tesla’s custom-designed Dojo processors are specialized NPUs for training AI models used in its autonomous driving systems. Arm’s Ethos NPUs are making an impact in embedded AI and IoT, while Alibaba’s Hanguang 800 focuses on cloud-based AI inference.

Choosing Between NPUs and GPUs

The choice between NPUs and GPUs depends on the specific requirements of the application. Each has its strengths, making them suitable for different scenarios. Several critical trade-offs must be considered, including power efficiency, latency, overall cost, scalability, and compatibility.

- Power Efficiency: NPUs are designed with power efficiency as a core feature, which is why they became popular first on mobile platforms. NPUs handle AI inference tasks with minimal energy consumption, ensuring long battery life in devices like smartphones and IoT gadgets. GPUs, while more powerful, consume significantly more energy in general due to their high computational throughput. This makes GPUs better suited for workstations, data centers, etc. where power constraints are less critical.

Latency: For real-time applications, NPUs provide lower latency compared to GPUs. Their architecture is optimized to process AI tasks such as object detection or speech recognition with minimal delay. This is why NPUs are commonly used in applications like autonomous driving and robotics, where fast decision-making is crucial. GPUs, though versatile, may introduce higher latency, especially in scenarios requiring frequent data movement between memory and compute units.

Cost: NPUs are often integrated into SoCs (System-on-Chip) in consumer devices, making them a cost-effective choice for edge AI tasks. GPUs, particularly high-performance models, can be expensive due to their versatility and computational power. For enterprises, the cost of GPUs is often justified by their ability to handle a broad range of workloads, but for specialized AI tasks, NPUs can provide a more economical solution.

Scalability: GPUs excel in scalability, particularly in cloud and data center environments. Multiple GPUs can be used together to handle large-scale workloads, such as AI model training or complex simulations. NPUs, while efficient, are typically designed for single-device deployments and may not scale as easily for large, distributed workloads.

Software Ecosystem: GPUs benefit from a mature software ecosystem, with frameworks like NVIDIA CUDA, TensorFlow, and PyTorch offering robust support for a wide range of applications. NPUs, on the other hand, often require specialized software and toolchains, such as TensorFlow Lite or vendor-specific SDKs. While this provides optimized performance for AI inference, it can limit flexibility and compatibility compared to GPUs.

While NPUs are well-suited for tasks like voice recognition, image enhancement, and real-time translation, generative AI presents a more demanding challenge. Applications such as creating new media—like AI-generated stories or artwork—require significantly larger models and computational power than what NPUs in current smartphones can handle efficiently. For instance, advanced generative AI features like Magic Editor in Google’s Pixel phones often rely on cloud servers to deliver results, as these workloads exceed the on-device capabilities of today’s NPUs.

Recommended reading: Leveraging Edge Computing for Generative AI

However, as NPU technology advances, manufacturers are pushing to enable more generative AI functions directly on devices, balancing performance, power efficiency, and latency. This shift could pave the way for features like real-time AI-driven content creation or advanced personalization without relying heavily on the cloud, offering enhanced privacy and faster response times.

Aspect | GPU | NPU |

Primary Purpose | Parallel processing for graphics and AI training | Optimized for AI inference tasks |

Workload Focus | Large-scale AI model training, graphics rendering, scientific simulations | AI inference, real-time processing on edge devices |

Power Efficiency | High power consumption, suited for gaming PCs, workstations and data centers | Highly energy-efficient, ideal for mobile and IoT devices |

Latency | Higher latency compared to NPUs | Low latency, ideal for real-time tasks |

Scalability | Highly scalable, especially in data centers | Limited scalability, designed for single-device deployments |

Software Ecosystem | Mature ecosystem with CUDA, TensorFlow, PyTorch | Specialized toolchains like TensorFlow Lite, vendor-specific SDKs |

Real-World Applications | Gaming, AI training, data centers, professional workstations | Smartphones, IoT, autonomous systems, healthcare |

Cost | Higher cost due to versatility and computational power | Cost-effective for specialized AI tasks in edge devices |

Emerging Trends in Processor Technologies

The evolution of NPUs and GPUs is shaped by the increasing demand for specialized computing to handle complex workloads efficiently. As technology advances, several key trends have emerged:

- Hybrid Processors: Development of systems combining NPU and GPU functionalities to optimize both AI workloads and graphics processing. For example, hybrid processors are increasingly being used in industries like automotive for autonomous driving systems, where real-time AI processing and advanced graphical displays are both essential. In the gaming industry, these processors are enabling immersive VR environments that require seamless integration of AI and graphics. Similarly, in scientific research, hybrid processors support complex simulations by balancing AI-driven data analysis and visualization tasks effectively.

- On-Device AI Expansion: NPUs are enabling more AI tasks directly on devices, from real-time image enhancement to personalized voice assistants, reducing reliance on cloud computing. This not only improves latency but also enhances user privacy, making on-device AI a significant focus area for mobile and PC manufacturers.

- Energy Efficiency Innovations: With sustainability becoming a priority, both NPUs and GPUs are adopting energy-efficient designs. NPUs continue to push boundaries in low-power inference, while GPUs are incorporating power-saving technologies for large-scale computing, especially in AI model training.

- Generative AI Integration: As generative AI matures, GPUs remain central to large-scale model training. However, NPUs are gradually being adapted to handle smaller generative tasks, like image editing and real-time content personalization, locally on devices.

- Customizable Architectures: Both NPUs and GPUs are evolving to offer more flexibility. GPUs are integrating specialized cores for AI workloads (e.g., NVIDIA Tensor Cores), while NPUs are being designed with configurable layers to support diverse neural network architectures.

Conclusion

NPUs and GPUs have distinct strengths that cater to different computing needs. GPUs excel at scalable, parallel workloads like AI training and graphics rendering, while NPUs specialize in efficient, low-latency AI inference for edge devices, prioritizing privacy and energy efficiency. Together, they drive innovation across industries, from data centers to smartphones. The future lies in hybrid processors and advanced edge solutions, blending scalability with on-device intelligence. As AI applications grow, these technologies will continue to shape the landscape of efficient and specialized computing.

Frequently Asked Questions

- What is the primary difference between an NPU and a GPU?

NPUs are specially designed for AI-inference tasks, offering low power consumption and latency.

GPUs are versatile and are designed for general-purpose parallel processing, particularly graphics rendering, AI training and gaming. - Can NPUs Replace GPUs?

While NPUs are highly efficient for specific AI applications, they cannot replace GPUs due to their limited scope. GPUs offer broader compatibility and are essential for tasks like graphics rendering and training large-scale machine learning models. - Why are NPUs important for smartphones?

NPUs enable AI features like facial recognition, voice assistants, real-time translation, and photo enhancements directly on the device, improving speed, reducing cloud dependency, and enhancing user privacy. - How do NPUs enhance privacy?

NPUs process sensitive AI tasks, such as speech recognition or facial data analysis, locally on the device, reducing the need to send data to cloud servers and minimizing the risk of exposure. - Can both NPUs and GPUs be used together?

Yes, most systems, including smartphones and laptops, combine NPUs and GPUs for different stages of AI workloads. For example, GPUs handle AI model training in data centers or on high-performance laptops, while NPUs run inference tasks efficiently in edge devices.

References

[1] Doyle Stephanie. AI 101: GPU vs TPU vs NPU [Internet]. Backblaze; 2023 Aug 2. Available from: https://www.backblaze.com/blog/ai-101-gpu-vs-tpu-vs-npu/

[2] IBM. NPU vs GPU: What's the Difference? [Internet]. IBM. Available from: https://www.ibm.com/think/topics/npu-vs-gpu

[3] Fortune Business Insights. Neural Processor Market Size, Share & COVID-19 Impact Analysis [Internet]. Fortune Business Insights. Available from: https://www.fortunebusinessinsights.com/neural-processor-market-108102

[4] Chockalingam A. Microsoft and NVIDIA supercharge AI development on RTX AI PCs [Internet]. NVIDIA Blog; 2024 Nov 19. Available from: https://blogs.nvidia.com/blog/ai-decoded-microsoft-ignite-rtx/

[5] Macrotrends. NVIDIA - Market Cap [Internet]. Macrotrends. Available from: https://www.macrotrends.net/stocks/charts/NVDA/nvidia/market-cap

Table of Contents

Evolution of GPUs and NPUsWhat Are NPUs and GPUs?Architecture and Design: A Technical ComparisonPerformance Metrics That MatterKey Use Cases for NPUsKey Use Cases for GPUsKey Players in the NPU and GPU MarketGPU Market LeadersNPU Market InnovatorsEmerging PlayersChoosing Between NPUs and GPUsEmerging Trends in Processor TechnologiesConclusionFrequently Asked QuestionsReferences