Third Annual tinyML EMEA Innovation Forum: Looking deeply at real-world ML applications.

From sleep monitoring wearables to face detection models, tinyML is making a big impact on the way we can gather and apply data.

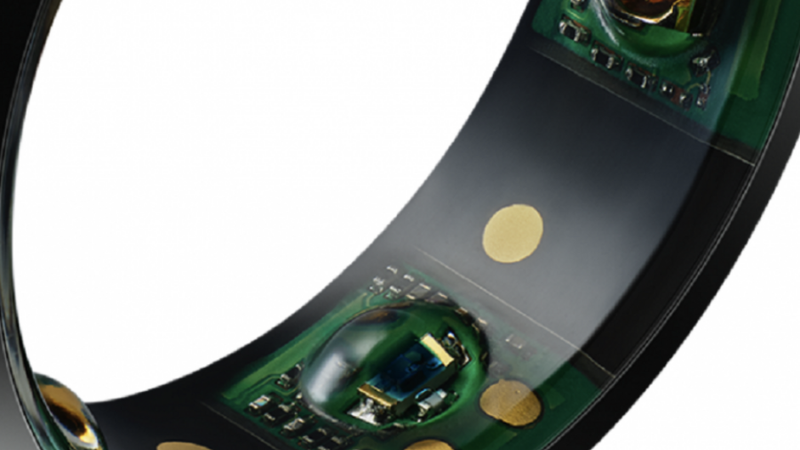

Image credit: Oura Ring

As tinyML technologies and ecosystems are gaining more momentum and maturity, more and more applications are being developed and deployed in different verticals. The “tiny ML in the Real World” track and tinyML/edgeAI product Exhibition of the recent tinyML EMEA Innovation Forum provided a snapshot and valuable insights into how tinyML is changing our world through smart spaces (home, cities) and health and industrial applications.

The tinyML Movement, which started in 2019, has grown into an international ecosystem that brings together various researchers, markets, and time zones. While initial activities were focused on developing key building blocks and capabilities and awareness in the tech sector, the “center of gravity” is currently shifting towards building end-to-end systems and solutions. As a result, the recent three-day event aimed to foster further collaboration and partnerships within the tinyML community and beyond, and to accelerate market adoption and growth.

The forum invited participants to engage with a variety of speakers, grouped through various topic tracks. Of particular interest was The “tiny ML in the Real World” theme and the accompanying exhibition, which featured the latest advancements in embedded machine learning and their real-world applications. We highlight some of the inspiring presentations below.

Monitoring Vital Signs Using Embedded AI

One particulate exciting talk was by Lina Wei from 7 Sensing Software. Lina presented “Monitoring of Vital Signs using Embedded AI in wearable devices.” The focus was on utilizing Photoplethysmography (PPG) signals for Respiratory Rate Monitoring and employing a Tiny ML-based algorithm to handle real-world and complex PPG signals. The performance achieved by this algorithm was comparable to medical-grade devices.

During the presentation, Lina discussed how to monitor vital signs using embedded AI in wearable devices. Vital signs such as respiratory rate, heart rate, and blood pressure are measurements of the body’s basic functions. Monitoring these vital signs allows for the assessment of well-being and early detection of potential health issues. In elderly care homes and hospitals, having contactless, remote monitoring can improve patient care and comfort.

The project uses photoplethysmogram (PPG) signals, which are optical measurements of blood volume changes in the microvascular bed of tissue. These signals are captured using a sensor from AMS OSRAM, which can measure synchronized PPG and ECG signals separately or simultaneously.

The deep learning-based algorithm used to extract information from the PPG signals was trained on real-world data acquired by the sensor. The algorithm was designed to overcome the difficulties caused by data complexity and achieved a performance comparable to that of medical-grade devices. The deep learning model was converted and optimized to be compatible with low-end microcontrollers.

The algorithm was trained using data from 40 subjects of different genders, ages, and skin colours. To augment the training data volume and improve robustness, various innovative data augmentation techniques were used, such as adding noise, flipping signals, scaling, and magnitude warping.

The deep learning model was integrated into a Cortex M4 and executed within a custom AI framework. The model has only 35,000 parameters, making it easy to update by simply updating the weights. The solution achieved a performance comparable to medical devices used in hospitals.

The entire solution, including the deep learning model, was designed to be compatible with low-end microcontrollers. The solution uses only 8.5% of the computing capacity of a Cortex M4, making it power-efficient and suitable for low-power devices like wearables.

Embedded Temperature Pressure Monitoring (TPM) Sensor

CEO of Polyn Technology, Alexander Timofeev, gave a compelling presentation on data pre-processing on sensor nodes for predictive maintenance.

The exponential growth of sensor data threatens to overwhelm available global resources, with sensor technologies experiencing exponential growth with forecasts of ~45 trillion sensors in 2032 that will generate >1 million zettabytes (1027 bytes) of data per year.[1]

Timofeev explained how their platform, which focuses on efficient preprocessing of raw data from various sensors, can extract useful information from raw data in an efficient way. They have developed a compiler that converts digital models to an analog neuromorphic core, enabling ultra-low power consumption and latency. One practical application is an embedded Temperature Pressure Monitoring (TPM) Sensor with integrated 3-axis accelerometer and VibroSense chip, used to monitor the health of automobile tires before they fail.

The sensor performs vibration signal pre-processing, allowing real-time monitoring of tire conditions on the sensor itself, without the need for data transmission to the cloud. It operates with ultra-low power consumption and provides continuous data on temperature and pressure changes, enabling early detection of potential issues and allowing timely maintenance and prevention of tire failures.[2] This not only enhances safety but also brings financial, performance, and environmental benefits.

tinyML Drones with Neuromorphic Edge Processing

Michele Magno, a senior scientist working at the Center for Project Based Learning in Zurich, introduced ColibriUAV- an ultra-fast, energy-efficient neuromorphic edge processing UAV (Unmanned Aerial Vehicle)-Platform that combines event-based and frame-based cameras for eye-tracking and small-scale drone applications.[3] The platform addresses the need for extreme efficiency and low latency in both fields. It leverages two emerging technologies: the Dynamic Vision Sensor (DVS) for lightweight, fast, and low-latency sparse image processing, and Neuromorphic Hybrid Open Processors based on RISC-V architecture to achieve extreme edge processing.

The system is designed to fit "intelligence" into a 30X smaller payload with 20X lower energy consumption compared to traditional solutions. It enables advanced autonomous drone operations, including 3D mapping, motion planning, object recognition, and obstacle avoidance, while being suitable for indoor and constricted environments.[4]

The close-loop neuromorphic performance of ColibriUAV demonstrates remarkable power efficiency, with end-to-end energy consumption of 9.224mJ and a latency of 163ms. The platform shows promise for various applications, such as research, search and rescue missions, and warehouse monitoring, making it more than just a toy for nano-drones. The ongoing work focuses on eye-tracking collaboration, exploring alternative edge neuromorphic platforms, and data collection for obstacle avoidance and object detection.

ML-Powered Doorbell notifier

Sandeep Mistry from Arm presented “How to build an ML-powered doorbell notifier” which discussed an end-to-end solution incorporating Machine Learning and Microcontroller technologies. The solution emphasized privacy-preserving on-device ML inferencing and included a live demonstration.

In the presentation, Sandeep explains how to build a machine learning-powered doorbell notifier using a microcontroller. The project was initiated to solve a problem faced by his co-worker, who was unable to hear the doorbell from his separate home office. The solution involved creating a system that listens for audio data continuously, identifies the sound of the doorbell using a machine learning model, and sends a notification to a phone via a cloud service when the doorbell rings.

The machine learning model was trained using the FSD 50k dataset, which contains 144 doorbell sounds along with other sounds like music, domestic home sounds, human voices, and hand clapping. Sandeep used TensorFlow Lite to build the model, which was then trained in two phases using the ESC-50 dataset and the FSD 50k dataset.

The hardware platform used for this project was the SparkFun Azure wave things Plus board, which is based on a Realtek SoC. This board provides a Cortex M33 compatible CPU, ample RAM, flash storage, built-in Wi-Fi connectivity, and a hardware audio codec. The board was programmed using the Arduino IDE, and several libraries were used for audio processing, Wi-Fi connectivity, TensorFlow Lite, DSP steps, and the Twilio API for sending SMS messages.

The system was designed to process audio data in real-time, with the Mel power spectrum calculation taking 3.4 milliseconds and the model inferencing taking 14 milliseconds. This processing time was well within the 32-millisecond limit required for real-time processing.

The SoC’s built-in Wi-Fi connectivity is used only when the model detects audio sounds of interest. In the video of the presentation below you are able to see how Realtek’s RTL8721DM SoC’s compute, and resources leave ample room to explore more complex model architectures for other tinyML audio classification use cases. All the code used in the project is available on GitHub.

Smart and Connected Soft Biomedical Stethoscope

Dr. Hong Yeo, associate professor and Woodruff faculty fellow at Georgia Tech, presented his exciting research on developing soft biomedical wearable stethoscopes and embedded motion learning for automated real-time disease diagnosis.[5] His research group focuses on developing soft biosensors and bioelectronics using printing-based manufacturing technology to create ultra-thin and flexible devices with better contact to the skin for continuous data recording.

Over 15 million Americans are affected by pulmonary diseases, resulting in more than 150,000 deaths annually in the US.[6] Current auscultation methods for monitoring these diseases have limitations, such as subjectivity, motion artifacts, noise, and lack of continuous monitoring due to the rigidity and bulkiness of existing technologies.

Dr. Hong Yeo and his team have developed a small wearable stethoscope that can monitor heart and lung sounds in real-time for diagnosing pulmonary disorders. The device utilizes machine learning and AI components to compare real-time data with abnormal datasets, providing doctors with instant classification outcomes and allowing continuous, wireless monitoring for patients.[7] The stethoscope demonstrates higher sensitivity and noise reduction compared to conventional rigid devices. Dr. Yeo's goal is to advance healthcare through better health monitoring, diagnosis, therapeutics, and human-machine interfaces. The device has shown promising results in clinical trials, achieving 95% accuracy in classifying different lung diseases.

Discover more

The event hosted a rich array of presentations that can be watched or read about on the tinyML homepage (www.tinyML.org). Our favorite highlights included Edge Impulse founder, Jan Jongboom discussing commercial use cases, including the Oura ring, which has shipped over one million devices to date. Stephan Schoenfeldt from Infineon outlining low power radar, and Simone Moro from ST Microelectronics explains face recognition with a hybrid binary network.

Looking ahead

The third edition of the tinyML EMEA Innovation Forum was a valuable platform for inspiring innovation and fostering collaborations, marking another milestone in the journey towards a brighter future powered by tinyML.

The tinyML forums and symposiums are rich learning and networking opportunities for engineers, developers, managers, executives, and founders developing sensors, silicon, software, machine learning tools, or systems for the tiny ML market.

Edge AI Technology Report

The tinyML foundation recently partnered with Wevolver to launch the Edge AI Technology Report, this report, contributed by leading industry experts and researchers, aims to provide a thorough overview of the current status of AI.

Click through to read each of the report's chapters.

Chapter I: Overview of Industries and Application Use Cases

Chapter II: Advantages of Edge AI

Chapter III: Edge AI Platforms

Chapter IV: Hardware and Software Selection

Chapter V: Tiny ML

Chapter VI: Edge AI Algorithms

Chapter VII: Sensing Modalities

Chapter VIII: Case Studies

Chapter IX: Challenges of Edge AI

Chapter X: The Future of Edge AI and Conclusion

References

[1] https://cms.tinyml.org/wp-content/uploads/ew2023/tinyML-EMEA_Alexander-Timofeev.pdf

[2] https://www.youtube.com/watch?v=BwI75Zm4g-4&ab_channel=tinyML

[3] https://www.youtube.com/watch?v=DLnSbtN-BOU&t=3s&ab_channel=tinyML

[4] https://cms.tinyml.org/wp-content/uploads/ew2023/tinyML-EMEA_Michele-Magno-new.pdf

[5] https://www.youtube.com/watch?v=Xc9fknhiDGU&t=6s&ab_channel=tinyML

[6] https://cms.tinyml.org/wp-content/uploads/ew2023/tinyML-EMEA_Yeo.pdf

[7] https://cms.tinyml.org/wp-content/uploads/ew2023/tinyML-EMEA_Yeo.pdf