Ultra-efficient machine learning offers on-device inference powered solely by sound - under the sea

Designed to bring machine learning to the Earth's last great frontier, a prototype smart sensor platform users on-device inference to classify marine mammal calls — powering itself exclusively from the sounds it captures.

While most machine learning takes place in high-power data centers, these researchers are trying to deploy the technology beneath the waves.

This article was discussed in our Next Byte podcast.

The full article will continue below.

Energy efficiency and machine learning don’t always go hand-in-hand. While low-power sensor networks can make great use of deep-learning systems for processing data, they typically do so by transmitting said data to remote servers equipped with powerful processors and plenty of memory.

On-device machine learning at the microprocessor scale — known as “tinyML” — looks to shift the work from data centers into the field, and a team of researchers at Imperial College London and the Massachusetts Institute of Technology (MIT) are hoping to go still further: The development and deployment of a machine learning system so efficient it can perform inference on energy-harvesting sensors deployed under the sea, operating entirely without batteries.

Fundamental challenges

The team, including first author Yuchen Zhao, aimed to deliver an answer to a simple question: Whether or not it is possible to design and build battery-free devices capable of machine learning and on-device inference, operable in underwater environments.

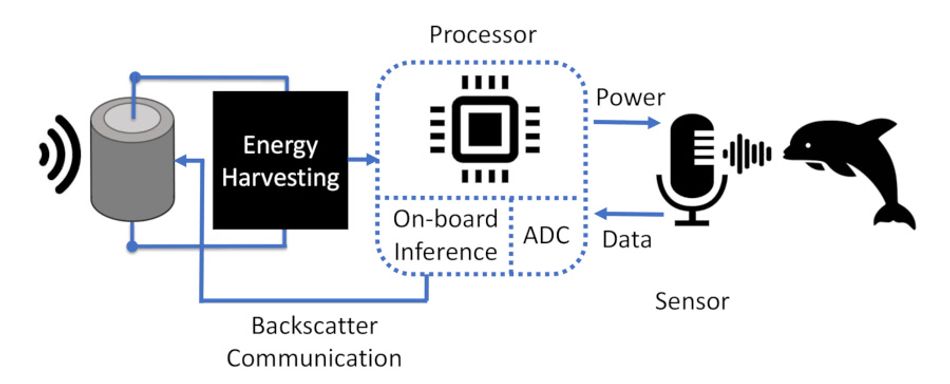

Building on earlier works on battery-free sensing and energy-efficient machine learning systems, the team built a prototype: A device, built around an off-the-shelf microcontroller, which could harvest energy, capture sounds, process them through a lightweight deep neural network to identify particular marine species, and transmit the results back to a receiver unit — and all without needing a battery.

The idea of an ultra-low-power sensor, underwater or otherwise, which is powered by harvested energy and communicates via energy-efficient backscatter isn’t new, but the team points out the majority of previous work has been focused on land rather than sea operation, and that most simply power their sensors but rely on battery- or mains-powered devices with more computational capabilities to process captured data.

Performing computation on-device, meanwhile, would not only increase the flexibility of the deployed sensor systems but also work around significant issues with current approaches — including the low-bandwidth nature of underwater communications introducing large delays to the process, and the need to store captured data in-memory until a receiver is available.

“To overcome these challenges,” the team writes, “our node performs on-board inference. This results in a lower-power, time-and-resource efficient, and more scalable system design.”

Three-part hardware harmony

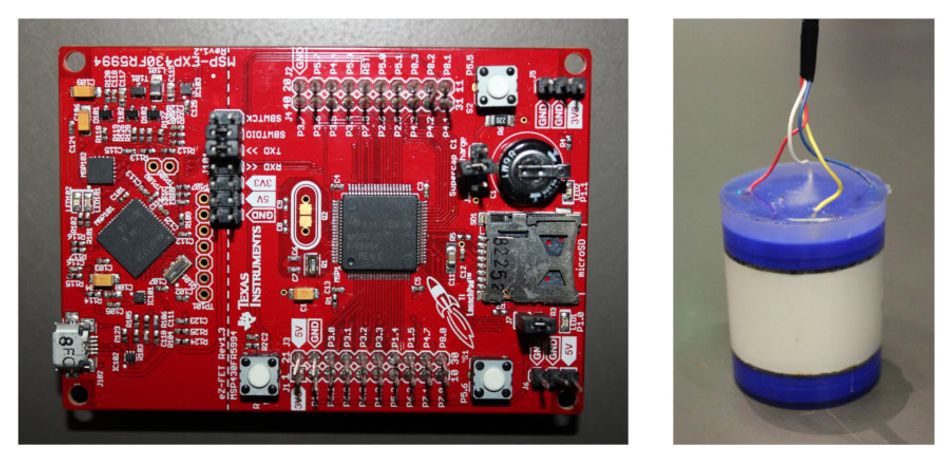

The team’s prototype boasts very modest specifications. At its heart is a Texas Instruments MSP430FR5994 microcontroller development board, connected to an omnidirectional hydrophone for capturing undersea sounds. The same sound energy as monitored by the sensor node also serves as its power source, being converted by a transducer into electrical energy.

Despite the harvested energy being minimal at best, and the device lacking an integrated battery for energy accumulation over time, the prototype delivers on the team’s goal: As energy is harvested, the microcontroller powers up, samples audio from the sensor, performs inference on-device, and then transmits the resulting classification via backscatter uplink.

Impressively, everything is handled in just 5.4mJ of power — with the inference stage taking three seconds to complete at a draw of 1.3mW. While by far the highest draw of the process, the researchers claim it represents a considerable saving over the typical approach of simply transmitting the raw data for later analysis — estimating that backscatter transmission of audio data would consume over 30 per cent more power than performing the inference on-device and simply transmitting the result, while cutting the time taken considerably.

“This result,” the team notes, “demonstrates that battery-free edge inference is feasible, and that it makes on-board inference both more efficient and faster, even with this preliminary inference model. We envision that the gains can be significantly improved as the research evolves.”

Training day

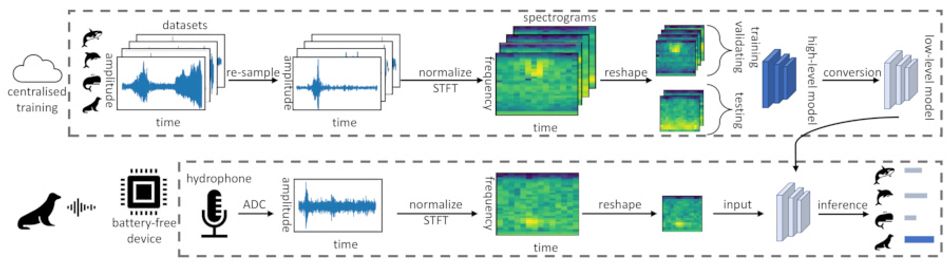

The secret to the project’s success is a highly-efficient machine learning model, trained off-device on a more powerful system using the Watkins Marine Mammal Sounds Database. The audio data was resampled to match the sample rate of the microcontroller’s analog to digital converter (ADC) before being used to train high-level Keras models via TensorFlow.

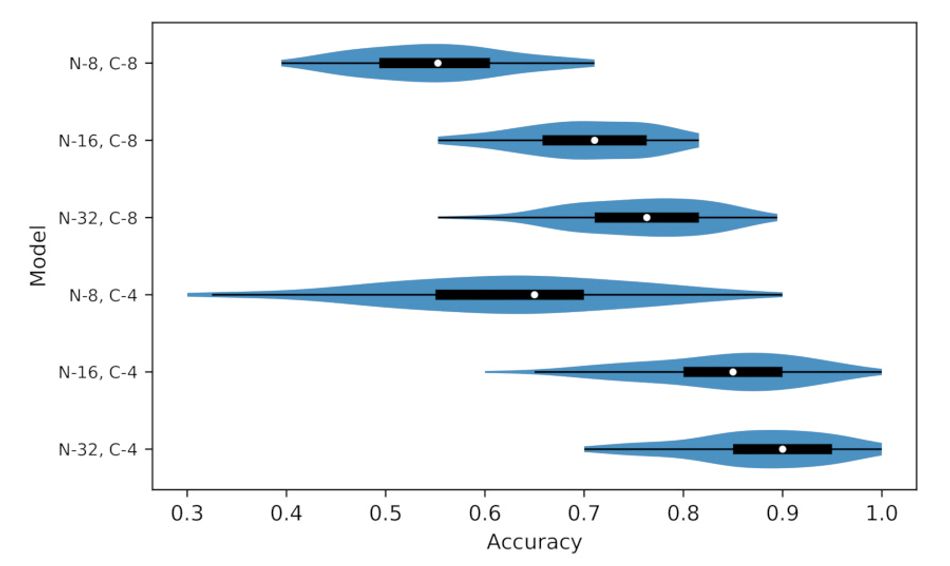

To generate smaller microcontroller-compatible models from these high-level Keras models, the team turned to Keras2C. “This library generates smaller DNN models than TensorFlow Lite does,” the researchers explain, “and requires fewer third-party libraries when generating models.”

In simulated testing, based on samples held-back from the Watkins dataset, performance of the resulting microcontroller-deployed on-device inference model showed promise: The team reports an 84 per cent success rate for offline single-mammal classification, though switching to eight-mammal classification dropped accuracy down to 76 per cent while increasing the model size beyond that which could be deployed on the prototype.

Online accuracy, using data captured by the prototype’s analog to digital converter based on recordings of four marine mammals, proved lower: 63 per cent, a drop the team attributes to the relatively low number of testing samples and a loss of data resolution during the sampling process.

“Nonetheless,” the team notes, “this result demonstrates that our prototype was successful in on-board classification of four marine animals with a reasonable accuracy at extremely low power, and this accuracy may be improved as the research evolves and with more comprehensive online evaluation.”

The researchers suggest a range of directions for future study, including on-device model personalization to boost accuracy, a model-optimized hardware/software co-design approach to boost performance and efficiency, and the investigation of methods to allow training, rather than just inference, to occur on-device.

The team’s work has been made available under open-access terms on Cornell’s arXiv preprint server. The researchers also made their underwater backscatter system available under the permissive Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International license upon its development two years ago, with code and design files available on GitHub.

Reference

Yuchen Zhao, Sayed Saad Afzal, Waleed Akbar, Osvy Rodriguez, Fan Mo, David Boyle, Fadel Adib, and Hamed Haddadi: Towards Battery-Free Machine Learning and Inference in Underwater Environments. DOI arXiv:2202.08174 [cs.LG].