Edge AI: A Comprehensive Guide to its Engineering Principles and Applications

Gain a comprehensive understanding of Edge AI by exploring the fundamental concepts, hardware components, software tools, and real-world applications.

Edge AI Hardware

Introduction

Edge AI is an emerging technology that combines the power of artificial intelligence (AI) with the advantages of edge computing, enabling the processing of data and execution of AI algorithms directly on devices at the edge of the network, rather than relying on centralized cloud-based systems. This approach offers several benefits, including reduced latency, increased privacy, and lower data transmission costs. As a result, Edge AI is becoming increasingly important in a wide range of applications, from smart home automation, autonomous vehicles and industrial IoT to healthcare and wearable devices.

This guide aims to provide a comprehensive understanding of the engineering principles and applications of Edge AI. By exploring the fundamental concepts, hardware components, software tools, and real-world applications, you will gain valuable insights into the world of Edge AI and its impact on modern technology. With a focus on the engineering aspects of Edge AI, this guide will equip you with the knowledge needed to design and develop innovative Edge AI solutions that can transform industries and improve our daily lives.

Suggested reading: Autonomous Vehicle Technology Report

Fundamentals of Edge AI

Edge AI is a technology that combines artificial intelligence (AI) with edge computing, allowing data processing and AI algorithm execution to occur directly on devices located at the edge of the network. By bringing AI closer to the source of data generation, Edge AI enables more efficient and responsive decision-making in a wide range of applications.

Here are some noteworthy advantages of leveraging the Edge AI.

Real-time processing: data processing and analysis happens directly on the edge devices without relying on cloud computing or remote servers to enable faster response time and immediate decision-making

Privacy and data security: reduces the need to transmit sensitive information to the cloud, enhancing privacy and reducing the risk of data breaches

Reduced latency: eliminates the need to send data to remote servers for processing, resulting in significantly lower latency where quick response times are critical

Bandwidth optimization: optimizes bandwidth utilization and reduces network congestion by performing computations locally

Scalability: enables distributed processing across multiple edge devices, allowing for scalable and decentralized architectures

Further Reading: Advantages of AI

Now, let’s take a look at some fundamental aspects of this technology.

Edge Computing

Edge computing is a distributed computing paradigm that brings computation and data storage closer to the devices where data is generated, rather than relying on centralized data centers or cloud-based systems.

In the context of Edge AI, edge computing provides the necessary infrastructure for running AI algorithms on edge devices, such as IoT sensors, smartphones, and embedded systems. By processing data locally on these devices, Edge AI systems can make real-time decisions and respond to changing conditions more quickly than cloud-based AI systems, which require data to be transmitted to and from remote data centers. Additionally, edge computing can help address privacy concerns by keeping sensitive data on the device, reducing the risk of data breaches and unauthorized access.

Edge computing also plays a crucial role in the Internet of Things (IoT), as it enables the efficient processing and analysis of data generated by a vast number of connected devices. By implementing AI at the edge, IoT devices can become more intelligent, adaptive, and autonomous, paving the way for new applications and use cases across various industries.

AI Models and Algorithms

In Edge AI systems, various AI models and algorithms are employed to perform tasks such as image recognition, natural language processing, and anomaly detection. These models and algorithms are typically designed and trained on powerful cloud-based systems before being deployed on edge devices. However, due to the resource constraints of edge devices, such as limited processing power, memory, and energy, it is often necessary to adapt and optimize these models for efficient execution at the edge.

Further Reading: Edge AI algorithms

One common approach to adapting AI models for edge devices is model compression, which involves reducing the size and complexity of the model while maintaining its performance. Techniques such as pruning, quantization, and knowledge distillation can be used to achieve model compression. Pruning involves removing redundant or less important connections and neurons from the model, while quantization reduces the precision of the model's weights and activations. Knowledge distillation involves training a smaller, more efficient model to mimic the behavior of a larger, more accurate model.

Another challenge in deploying AI models on edge devices is the selection of appropriate algorithms that can efficiently execute on resource-constrained hardware. Some popular AI algorithms used in Edge AI systems include convolutional neural networks (CNNs) for image recognition, recurrent neural networks (RNNs) for sequence data processing, and reinforcement learning algorithms for decision-making tasks. These algorithms can be optimized for edge devices by leveraging hardware-specific features, such as parallel processing capabilities and specialized AI accelerators.

Further Reading: Challenges of Edge AI

In addition to model optimization and algorithm selection, Edge AI systems may also employ techniques such as federated learning and transfer learning to improve their performance and adaptability. Federated learning enables edge devices to collaboratively train AI models without sharing raw data, thereby preserving privacy and reducing data transmission costs. Transfer learning allows edge devices to leverage pre-trained models and fine-tune them for specific tasks, reducing the amount of training data and computational resources required.

Let’s summarize. By understanding the various AI models and algorithms used in Edge AI systems, as well as the techniques for adapting and optimizing them for edge devices, engineers can design and develop efficient and effective Edge AI solutions that meet the unique requirements and constraints of edge computing environments.

Edge AI Hardware

The hardware components used in Edge AI systems play a critical role in determining the performance, power consumption, and overall capabilities of the system. These components must be carefully selected and optimized to meet the specific requirements and constraints of Edge AI applications. Some of the key hardware components used in Edge AI systems include microcontrollers, microprocessors, and AI accelerators, each with its own set of advantages and trade-offs.

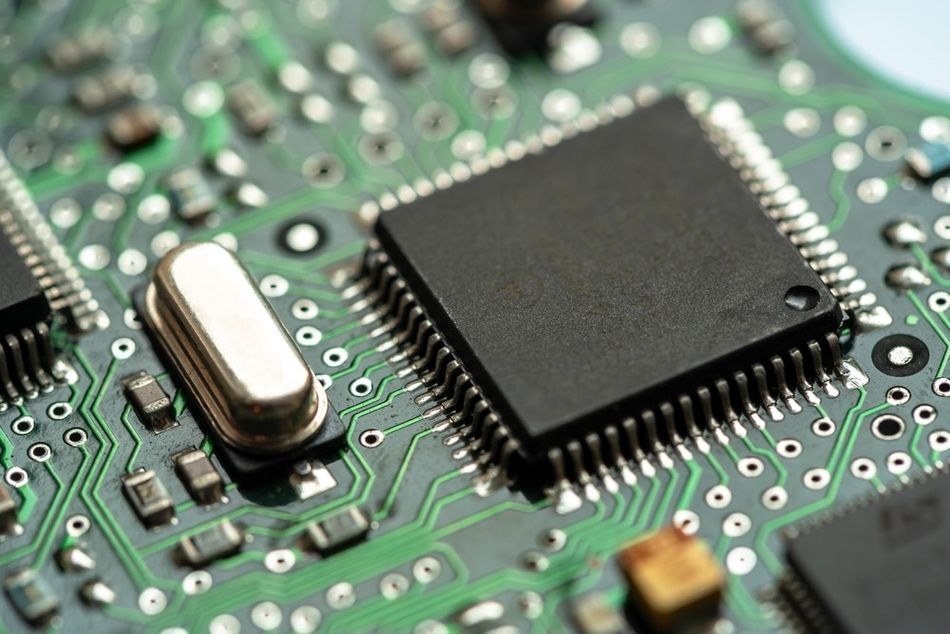

Microcontrollers and Microprocessors

Microcontrollers and microprocessors provide the computational power needed to execute AI algorithms and process data. While both types of components are capable of performing complex calculations, they differ in terms of their architecture, functionality, and power consumption.

Microcontrollers are small, low-power integrated circuits that contain a processor, memory, and input/output (I/O) peripherals on a single chip. They are designed for embedded applications and are typically used in simple, low-power Edge AI devices, such as IoT sensors and wearable devices. Microcontrollers offer several advantages for Edge AI applications, including low cost, low power consumption, and ease of integration. However, their limited processing power and memory resources can constrain the complexity of the AI algorithms that can be executed on them.

Microprocessors are more powerful and versatile than microcontrollers, as they contain a central processing unit (CPU) that can execute a wide range of instructions and perform complex calculations. Microprocessors are typically used in more advanced Edge AI devices, such as smartphones, tablets, and edge servers, where higher processing power and memory resources are required. While microprocessors offer greater performance and flexibility compared to microcontrollers, they also consume more power and can be more expensive, making them less suitable for low-power, cost-sensitive applications.

Further Reading: Edge AI Case Studies

When selecting a microcontroller or microprocessor for an Edge AI application, it is important to consider factors such as processing power, memory capacity, power consumption, and cost, as well as the specific requirements of the AI algorithm and the target device. By carefully balancing these factors, engineers can design and develop Edge AI systems that deliver the desired performance and functionality while minimizing power consumption and cost.

AI Accelerators

AI accelerators are specialized hardware components designed to accelerate the execution of AI algorithms, particularly deep learning models, on edge devices. These accelerators can significantly improve the performance and energy efficiency of Edge AI systems, enabling more complex and computationally demanding AI tasks to be performed on resource-constrained devices.

AI accelerators can be implemented as dedicated chips, such as application-specific integrated circuits (ASICs) or field-programmable gate arrays (FPGAs), or as integrated components within general-purpose processors, such as graphics processing units (GPUs) or digital signal processors (DSPs). These accelerators are optimized for the parallel processing of AI workloads, such as matrix multiplications and convolutions, which are common operations in deep learning models.

One of the key advantages of AI accelerators is their ability to offload AI computations from the main processor, freeing up resources for other tasks and reducing power consumption. This is particularly important for edge devices, which often have limited processing capabilities and strict power constraints. By using AI accelerators, Edge AI systems can achieve higher performance and lower energy consumption compared to running AI algorithms on general-purpose processors.

There are several AI accelerators available in the market, each with its own set of features and performance characteristics. Some popular AI accelerators include the NVIDIA Jetson series, Google's Edge TPU, and Intel's Movidius Myriad X. These accelerators vary in terms of processing capabilities, power consumption, and supported AI frameworks, making it essential to choose the right accelerator for the specific requirements of the Edge AI application.

In addition to dedicated AI accelerators, several processor manufacturers are integrating AI acceleration capabilities directly into their chips, such as Apple's Neural Engine in the A-series chips and Qualcomm's AI Engine in the Snapdragon processors. These integrated AI accelerators offer a more seamless and efficient solution for Edge AI applications, as they can leverage the existing processor infrastructure and software ecosystem.

Further reading: TPU vs GPU in AI: A Comprehensive Guide to Their Roles and Impact on Artificial Intelligence

Edge AI Software

The development and deployment of Edge AI systems require a variety of software components and tools, including AI frameworks, libraries, operating systems, and development environments. These software tools enable engineers to design, implement, and optimize AI algorithms for edge devices, taking into account the unique constraints and requirements of edge computing environments. Additionally, Edge AI software must be compatible with the diverse range of hardware components used in edge devices, such as microcontrollers, microprocessors, and AI accelerators.

Edge AI Frameworks and Libraries

Edge AI frameworks and libraries are software tools that provide a set of pre-built functions and modules for implementing AI algorithms on edge devices. These tools simplify the development process by abstracting the underlying hardware and software complexities, allowing engineers to focus on designing and optimizing their AI models and algorithms.

Some popular Edge AI frameworks and libraries include TensorFlow Lite, ONNX Runtime, and OpenCV. TensorFlow Lite is an open-source framework developed by Google for deploying machine learning algorithms on mobile and embedded devices. It offers a lightweight and efficient runtime for executing TensorFlow models, as well as tools for model optimization and conversion. ONNX Runtime is a cross-platform inference engine developed by Microsoft that supports the Open Neural Network Exchange (ONNX) format, enabling the deployment of AI models from various frameworks, such as PyTorch, TensorFlow, and scikit-learn. OpenCV is an open-source computer vision library that provides a wide range of functions for image and video processing, as well as machine learning and deep learning capabilities.

When selecting an Edge AI framework or library, it is important to consider factors such as hardware compatibility, high-performance, ease of use, ideal workflow, and community support. The choice of framework or library can have a significant impact on the development process and the performance of the final Edge AI system, so it is crucial to choose a tool that meets the specific requirements of the application and the target hardware platform.

Edge AI Operating Systems

Edge AI operating systems are specialized software platforms designed to manage the execution of AI applications and the allocation of resources on edge devices. These operating systems provide a range of features and services tailored for Edge AI systems, such as real-time processing, power management, and hardware abstraction. By using an Edge AI operating system, engineers can develop and deploy AI applications more efficiently and effectively, while ensuring the optimal use of the device's resources.

Further Reading: Edge AI Platforms

Some popular Edge AI operating systems

TinyML: It is an open-source software platform designed for running machine learning models on microcontrollers and other resource-constrained devices. It provides a lightweight runtime environment and a set of tools for model optimization and deployment, making it suitable for low-power, low-cost Edge AI applications.

EdgeX Foundry: It is an open-source edge computing platform developed by the Linux Foundation, which provides a flexible and extensible framework for building and deploying Edge AI applications. EdgeX Foundry supports a wide range of hardware and software components, including AI accelerators, IoT protocols, and cloud services, enabling the development of scalable and interoperable Edge AI systems.

Azure RTOS: This real-time operating system has been developed by Microsoft. It offers a range of features and services tailored for Edge AI applications, such as real-time scheduling, power management, and support for heterogeneous hardware architectures. Azure RTOS is designed to work seamlessly with Microsoft's Azure IoT services, providing a comprehensive solution for developing, deploying, and managing Edge AI applications in the cloud.

When selecting an Edge AI operating system, it is important to consider factors such as hardware compatibility, real-time performance, power management, and ease of use.

Further Reading: Hardware and software selection

Edge AI Applications

Edge AI has a wide range of applications across various industries, enabling more efficient, responsive, and intelligent systems that can adapt to changing conditions and make real-time decisions. By implementing AI at the edge, these systems can overcome the limitations of traditional cloud-based AI solutions, such as latency, bandwidth constraints, and privacy concerns. Some of the most promising applications of Edge AI include smart home and building automation, industrial IoT and manufacturing, and healthcare and wearables.

Smart Home and Building Automation

Edge AI plays a significant role in smart home and building automation systems, enabling the intelligent control of various devices and systems, such as lighting, heating, ventilation, and security. By processing data locally on edge devices, such as sensors, actuators, and controllers, Edge AI systems can make real-time decisions and respond to changing conditions more quickly than cloud-based systems, which require data to be transmitted to and from remote data centers.

Here are some key applications of Edge AI in smart home and building automation.

Energy Management

One of the key benefits of using Edge AI in smart home and building automation systems is energy management. By analyzing sensor data and applying AI algorithms, Edge AI systems can optimize the operation of heating, cooling, and lighting systems, reducing energy consumption and lowering utility costs. For example, an Edge AI-enabled thermostat can learn the occupants' preferences and habits, adjusting the temperature settings accordingly to maximize comfort and energy efficiency.

Security

Edge AI-enabled security cameras and sensors can analyze video and audio data in real-time, detecting potential threats and triggering alarms or notifications when necessary. By processing data locally, these systems can maintain privacy and reduce the risk of data breaches, while also reducing the amount of data that needs to be transmitted over the network.

End-user Experience

By analyzing user behavior and preferences, Edge AI systems can provide personalized recommendations and automate routine tasks, such as adjusting lighting levels or playing music. This can improve the overall user experience and make smart home and building automation systems more intuitive and user-friendly.

Industrial IoT and Manufacturing

Edge AI plays a significant role in industrial IoT and manufacturing applications, where real-time data processing and decision-making are crucial for optimizing processes, reducing downtime, and improving overall efficiency. By implementing AI at the edge, industrial systems can become more intelligent, adaptive, and autonomous, enabling new levels of productivity and performance.

Here are some key applications of Edge AI in industrial IoT and manufacturing sector.

Predictive Maintenance

Predictive maintenance involves monitoring the condition of equipment and predicting potential failures before they occur. By analyzing sensor data from machines and applying AI algorithms, Edge AI systems can identify patterns and anomalies that indicate potential issues, allowing maintenance to be scheduled proactively and reducing the risk of unexpected downtime.

Quality Control

Another important application of Edge AI in manufacturing is quality control, where AI algorithms can be used to analyze images, sensor data, and other inputs to detect defects or deviations from the desired specifications. By processing this data locally on edge devices, manufacturers can achieve real-time feedback and make adjustments to the production process, ensuring consistent product quality and reducing waste.

Process Optimization

By analyzing data from sensors and other sources, AI algorithms can identify inefficiencies and bottlenecks in the production process, enabling manufacturers to optimize their operations and reduce costs. Edge AI can also be used to control and coordinate the operation of multiple machines and systems, ensuring that they work together efficiently and effectively.

In addition to these specific applications, Edge AI can also enable new levels of automation and autonomy in industrial IoT and manufacturing systems. By integrating AI algorithms with robotics, vision systems, and other technologies, manufacturers can create more flexible and adaptable production lines that can respond to changing demands and requirements, paving the way for the next generation of smart factories and Industry 4.0.

Healthcare and Wearables

Edge AI has the potential to revolutionize the healthcare industry and wearable devices by enabling real-time monitoring, diagnostics, and personalized treatment plans. By processing and analyzing data directly on the device, Edge AI systems can provide immediate feedback and insights, improving patient outcomes and enhancing the overall healthcare experience.

Let’s discuss these key applications.

Healthcare Applications

In healthcare applications, Edge AI can be used for tasks such as patient monitoring, diagnostics, and personalized medicine. For example, Edge AI-enabled devices can continuously monitor vital signs, such as heart rate, blood pressure, and oxygen saturation, and alert healthcare providers to any abnormalities or potential issues. Additionally, Edge AI systems can analyze medical images, such as X-rays or MRIs, to assist in the diagnosis of diseases and conditions, reducing the workload on medical professionals and improving the accuracy of diagnoses.

Wearable devices

Wearable devices, such as smartwatches and fitness trackers, can also benefit from Edge AI technology. By incorporating AI algorithms directly into the device, wearables can provide real-time feedback on various health and fitness metrics, such as activity levels, sleep patterns, and stress levels. This information can be used to develop personalized recommendations and interventions, helping users improve their overall health and well-being.

Some examples of Edge AI-enabled healthcare and wearable devices include smartwatches with built-in ECG monitors, AI-powered hearing aids, and wearable glucose monitors for diabetes management. These devices leverage the power of Edge AI to provide real-time insights and recommendations, improving patient outcomes and enhancing the overall healthcare experience.

Further Reading: Overview of Industries and Application Use cases

Conclusion

Throughout this guide, we have explored the engineering principles and applications of Edge AI, covering the fundamental concepts, hardware components, software tools, and real-world applications. By understanding the unique requirements and constraints of edge computing environments, engineers can design and develop efficient and effective Edge AI solutions that deliver the desired performance and functionality.

Frequently Asked Questions

1. What are the main components of an Edge AI system?

An Edge AI system typically consists of hardware components, such as microcontrollers, microprocessors, and AI accelerators, as well as software components, such as AI frameworks, libraries, and operating systems. These components work together to execute AI algorithms and process data on edge devices.

2. What are some common Edge AI applications?

Edge AI has a wide range of applications across various industries, including smart home automation, industrial IoT, healthcare, and wearable devices. By implementing AI at the edge, these systems can become more intelligent, adaptive, and autonomous, paving the way for new applications and edge AI use cases.

3. What are the challenges of developing and deploying Edge AI systems?

Developing and deploying Edge AI systems can be challenging due to the resource constraints of edge devices, such as limited processing power, memory, and energy. Engineers must carefully select and optimize the hardware and software components of the system to meet these constraints while still delivering the desired performance and functionality. Additionally, Edge AI systems must be compatible with a diverse range of hardware components and software tools, which can add complexity to the development process.

References

Singh R, Gill SS. Edge AI: A survey. Internet of Things and Cyber-Physical Systems [Internet]. 2023;3:71–92. Available from: https://www.sciencedirect.com/science/article/pii/S2667345223000196

Aug S. Edge AI: The Future of Artificial Intelligence [Internet]. Softtek.com. [cited 2023 Jul 11]. Available from: https://blog.softtek.com/en/edge-ai-the-future-of-artificial-intelligence

Glover E. What is edge AI? [Internet]. Built In. 2022 [cited 2023 Jul 11]. Available from: https://builtin.com/artificial-intelligence/edge-ai

Boesch G. Edge AI - driving next-gen AI applications in 2023 [Internet]. viso.ai. 2023 [cited 2023 Jul 11]. Available from: https://viso.ai/edge-ai/edge-ai-applications-and-trends/

Labor Policy. On the Edge - How Edge AI is reshaping the future [Internet]. Samsung Semiconductor Global. 2023 [cited 2023 Jul 11]. Available from: https://semiconductor.samsung.com/news-events/tech-blog/on-the-edge-how-edge-ai-is-reshaping-the-future/

Table of Contents

IntroductionFundamentals of Edge AIEdge ComputingAI Models and AlgorithmsEdge AI HardwareMicrocontrollers and MicroprocessorsAI AcceleratorsEdge AI SoftwareEdge AI Frameworks and LibrariesEdge AI Operating SystemsEdge AI ApplicationsSmart Home and Building AutomationIndustrial IoT and ManufacturingHealthcare and WearablesConclusionFrequently Asked QuestionsReferences