TinyML: What is It and Why Does It Matter?

ABI Research forecasts that TinyML unit volumes will explode from 15 million units in 2020 to 2.5 billion units in 2030. Find out about this machine-learning technique now.

Learn about the advantages of TinyML

This article was first published on

macrofab.comAs a machine learning technique, Tiny Machine Learning (TinyML) combines reduced and optimized machine learning applications that require hardware, system, and software components. These operate at the edge of the cloud in real-time while converting data with minimal energy and cost.

The size requirements and computation capabilities of TinyML make it ideally suited for environmental sensors, but use cases are wide and varied. At the edge, where the sensing takes place, or in the cloud, where more powerful computations happen, sensed data is processed only after transmission. To handle the massive data volumes produced by this process, edge and cloud processing needs to be intelligently partitioned. This is where TinyML shines.

What is TinyML?

Machine learning applications usually use large power-hungry processors without considering memory requirements or transmission rates. Rather than focusing on efficiency, the goal is to process data as quickly as possible to decrease latency. However, decreasing latency can result in higher power consumption and bandwidth demands.

Machine learning is also usually customized for unique targeted application requirements. As a result, sharing algorithms between platforms can become challenging since their architectures are likely to differ. TinyML, however, is specifically designed to perform a wide range of tasks.

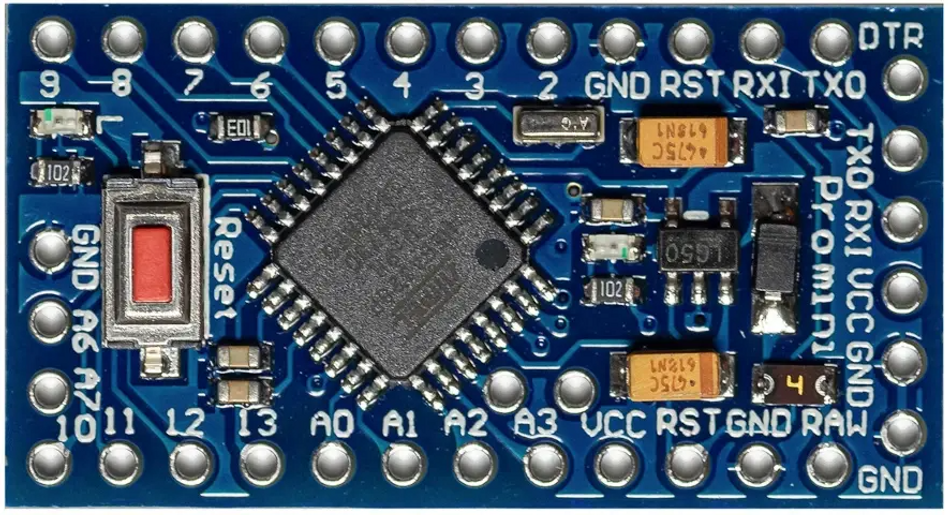

The field of TinyML is a broad, fast-growing field of machine learning technologies and applications that include hardware, algorithms, and software. These developments converge to allow the capability of performing on-device sensor data analytics that consumes very low power. Often, TinyML can integrate into PCBA design using hardware that is already familiar.

Through TinyML, you can develop applications that are always on, especially for battery-operated sensors. With embedded artificial learning available on hardware that has a relatively small form factor, it ushers in a new era of development.

Advantages of TinyML

Several architectural improvements result from moving the initial processing closer to the source of the data. Each of these contributes to the value of an IoT solution, and one cannot exist without the other:

- Latency–Closer computations minimize feedback latency to the smallest extent possible.

- Connection independent–By eliminating the need for a fixed connector, a wide range of new options are offered.

- Energy savings–TinyML offers targeted solutions with the lowest power consumption possible. Microcontrollers with minimal capabilities offer the horsepower necessary for computations while consuming very little power.

- Data security–By keeping data and processing local, there’s a higher barrier to spatial hacking at the sensor level.

- Size–Many of the hardware implementations available are the size of a small stick of gum, measuring 45x18mm.

The Role of TensorFlow Lite

Development tools for TinyML include not only the hardware but software platforms that allow algorithms from common building blocks. TensorFlow Lite is an example of a tool for developing code to be used in the TinyML environment.

TensorFlow Lite is an embedded machine-learning framework that has a subcategory specifically designed for microcontrollers. The library allows developers to deploy models on mobile edge devices that use microcontrollers. Existing models from the library can be used and retrained with new edge data.

In embedded systems, TensorFlow Lite is an excellent option for systems that run on batteries and must adhere to strict energy budgets. Among the things this library offers are pre-trained machine-learning models for

- Smart responses–the system generates intelligent responses similar to those generated by an A.I. chatbot

- Object detection–identifies multiple elements in an image, accommodating 80 different objects.

- Recommendations–the recommendation system provides personalized recommendations based on the user’s behavior.

Alternatively, developers can use new models. The chosen TensorFlow model can compress into a flat buffer with the TensorFlow Lite conversion process loaded into the edge device. The processor efficiency can then get optimized by selecting the most appropriate floating-point number or integer math for the required data.

Growth expectations for TinyML

With the wide acceptance of the TinyML development platform, the expectations for growth are phenomenal. ABI Research forecasts TinyML unit volumes will explode from 15 million units in 2020 to 2.5 billion units in 2030. This is an incredible compound annual growth rate of 167% for 10 years.

However, even if those projections are accurate, TinyML will still represent less than 10% of all IoT devices despite the rapid growth rate. Nevertheless, TinyML’s widespread use will likely lead to its use in many future projects. To improve your design skills now, discover how TinyML can be integrated with familiar devices.

Conclusion

TinyML market growth will be inevitable. Future projects will likely be impacted by TinyML IoT and other peripheral markets in the future. Start your TinyML development with manufacturing in mind to maximize the success of your project.

The right TinyML manufacturing solution starts with your CM partner helping you to understand the requirements and solutions. Developing a plan for manufacturing at the beginning of the development process can mitigate release-to-production concerns.