What is an Edge Data Center: A Comprehensive Guide for Engineering Professionals

The future of data, Edge Data Center, is equivalent to a mini data centre. Its proximity to enterprises eliminates latency and other limitations, giving access to real-time data. In this article, we will delve into its core concepts, advancements, operational challenges, and real-time applications.

Introduction

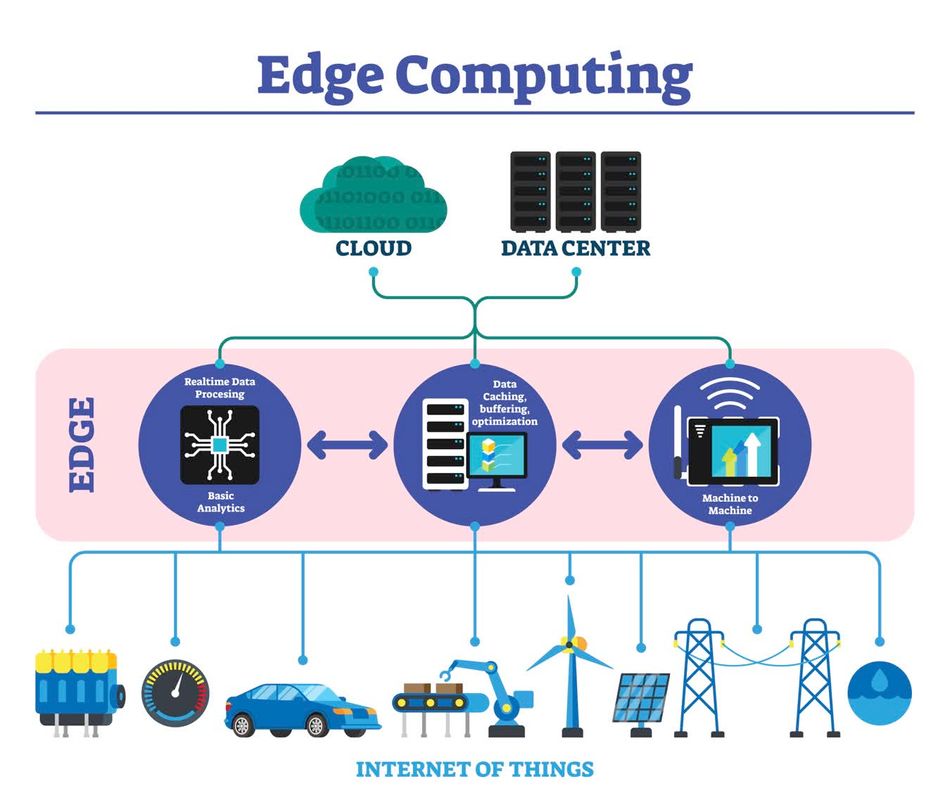

Edge data centers are localized data centers that bring computation and data storage closer to the location. Unlike traditional centralized data centers [1][2], which often suffer from latency and bandwidth limitations, edge data centers provide significant improvements by processing data near the source. Edge data centers are becoming commonplace in the colocation industry.

Traditional data centers were centralized to optimize resources and simplify management. However, the rapid growth of IoT devices and the advent of 5G technology has created an urgent need for faster localized data processing solutions. Edge data centers meet this need by reducing latency and bandwidth usage, which is necessary for applications requiring real-time data processing, such as autonomous vehicles and industrial automation. The ecoscale of these data centers is expanding rapidly.

Edge centers play a crucial role in today’s tech-driven world. They manage enormous amounts of data that modern applications and devices generate. By speeding up data processing and response times, they drive various engineering fields. They are also instrumental in developing smart cities and improving the performance of robotics and AI systems. Real-world applications, such as real-time traffic management in smart cities and predictive maintenance in manufacturing, show how edge data centers transform modern engineering practices.

Core Concepts of Edge Data Centers

What is an Edge Data Center?

Edge data centers are local facilities that bring computation and storage closer to the where data is generated. This proximity reduces latency and boosts processing speeds, making them perfect for real-time data processing applications. The data processed in milliseconds is a testament to their speed.

Advancements like 5G networks, Content Delivery Networks (CDNs), Network Functions Virtualization (NFV), and Software-Defined Networking (SDN), have made edge data centers possible. Standards such as ETSI's Multi-access Edge Computing (MEC) framework provide guidelines to ensure these solutions work efficiently and seamlessly together. This creates a beneficial interconnection among various edge data centers.

Real-world examples highlight the importance of edge data centers. In healthcare [3], edge data centers support real-time patient monitoring and data analysis, leading to faster and more accurate medical responses. These applications demonstrate how edge data centers are a critical component in modern technology landscapes.

Key Components and Architecture

Edge data centers have several vital components that bring processing power closer to the data source. The main components include:

- Micro Data Centers (MDCs)

- Edge Routers

- Network Connectivity.

Each of these play a critical role in providing top-notch edge computing experience.

- Micro Data Centers: Micro Data Centers (MDCs) are one example of modular edge data centers, small-scale data centers that give access to localized processing and storage capabilities. These facilities are strategically placed near data sources to minimize latency and improve processing speeds. They are often designed to operate autonomously and can be easily deployed in various environments, from urban areas to remote locations.

Edge data centers are often located near telecommunications infrastructure. For instance, they can be found near cell towers to handle the vast amount of data traffic generated by mobile users. Cloud services are another area where edge data centers shine.

For example, retail chains use Micro Data Centers (MDCs) [4] to process sales data in real-time, which takes customer experience to the next level through faster transaction processing.

- Edge Routers: Edge routers are another vital component that manages data traffic between local devices and the broader network. These routers are optimized for high-speed data transfer and low-latency communication, ensuring that data can be processed quickly and efficiently at the edge. They often incorporate advanced features such as Quality of Service (QoS) and traffic prioritization to handle the varying demands of different applications. For instance, in autonomous vehicles, edge routers facilitate rapid data exchange between the vehicle’s sensors and processing units, which helps in real-time decision-making.

- Network Connectivity: Network connectivity is crucial for the seamless operation of edge data centers. High-bandwidth connections, often leveraging fiber optics and 5G technology, are used to link edge data centers with central data centers and other edge locations. This connectivity ensures that data can be transferred swiftly and securely, supporting real-time processing and analysis. Standards like the IEEE 802.3bs for Ethernet [5] and 3GPP's 5G specifications provide the necessary frameworks to achieve high-speed, reliable connections.

The architecture of edge data centers supports a distributed computing model where processing power is decentralized and brought closer to the end-users. This architecture typically includes a mix of hardware and software solutions that work together to provide a cohesive system. Virtualization technologies, such as Network Functions Virtualization (NFV) and Software-Defined Networking (SDN), contribute well to this architecture, allowing for a scalable deployment of network functions and services

The Need for Edge Data Centers

Edge data centers have become essential in modern engineering for several reasons. As the need for real-time data processing and low-latency applications grows, traditional centralized data centers struggle to meet these demands efficiently. This is where hyperscale comes into play, as edge data centers are capable of scaling rapidly to meet increasing demands.

- Latency: Latency issues drive the adoption of edge data centers. When data travels long distances to centralized data centers, it causes significant delays, which are unacceptable for applications needing immediate responses. Edge data centers drastically reduce latency by being closer to the data source, ensuring faster data processing and improved performance.

- Bandwidth: Bandwidth concerns also drive the adoption of edge data centers. The vast amount of data generated by IoT devices, autonomous systems, and other modern applications can overwhelm network bandwidth if sent to centralized data centers for processing. By handling data locally, edge data centers reduce the burden on network infrastructure, optimize bandwidth usage, and minimize congestion.

Edge data centers provide the necessary infrastructure for enabling real-time decision-making. Below are the specific use cases and factors that drive the necessity of edge data centers:

- Autonomous Vehicles: Real-time data processing for sensor data and decision-making.

- Smart Cities: Localized processing for traffic management, energy optimization, and public safety.

- Industrial Automation: Real-time monitoring and control of manufacturing processes.

- Healthcare: Real-time patient monitoring and rapid data analysis for improved medical responses.

- Gaming and AR/VR: Low-latency data processing for immersive user experiences.

Technological advancements such as the proliferation of 5G networks, computing hardware advancements, and data processing algorithms have further paved the way for edge data centers. These technologies provide the necessary infrastructure and capabilities to handle the demands of modern applications, making edge data centers a must for contemporary engineering solutions.

Recommended Readings: Edge AI: A Comprehensive Guide to its Engineering Principles and Applications

Recommended Readings: 2024 State of Edge AI Report

Cutting-Edge Advancements in Edge Data Centers

Innovations in Hardware and Software

Recent advancements in hardware and software have significantly enhanced the capabilities of edge data centers, enabling them to handle increasingly complex and demanding applications. This development has fostered an ecosystem where edge data centers, through the integration of innovative hardware and software, can provide sustainable and efficient data center solutions.Historically, the development of processing units has followed the exponential growth of computational demands, driven by Moore’s Law and the need for higher efficiency and performance.

In terms of hardware, edge data centers have integrated powerful processing units such as Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs). These components are crucial for handling intensive computational tasks required by modern applications.

NVIDIA’s A100 GPU, built on the Ampere architecture, offers exceptional performance with 6912 CUDA cores and 432 Tensor cores, making it ideal for deep learning and artificial intelligence workloads. This GPU exemplifies the transition from general-purpose computing to specialized hardware optimized for specific tasks.

Similarly, Google's TPU v4, designed specifically for machine learning, delivers up to 275 teraflops of performance, significantly accelerating the training and inference of AI models. These advancements have enabled real-time data processing and complex computational tasks at the edge, reducing dependency on centralized data centers.

On the software side, advancements have been equally impressive. Frameworks such as Kubernetes and OpenStack have revolutionized the management and orchestration of edge computing resources [6]. Kubernetes, an open-source container orchestration platform, allows for automated deployment, scaling, and operation of application containers across clusters of hosts. This capability ensures that edge data centers can efficiently manage large-scale, distributed applications with minimal human intervention. OpenStack, another open-source platform, provides a suite of software tools for building and managing cloud computing environments, offering flexible infrastructure as a service (IaaS) solutions that can be tailored to the specific needs of edge deployments. These frameworks support the dynamic nature of edge computing, where resources must be rapidly reallocated based on changing demands and conditions.

These advancements are complemented by enhanced performance metrics that highlight the efficiency and capability of edge data center hardware and software. For example, the A100 GPU boasts a peak performance of 19.5 teraflops for FP64 and 312 teraflops for Tensor operations, showcasing its ability to handle diverse computational tasks. Kubernetes offers high availability, scalability, and self-healing capabilities, ensuring that applications run smoothly even in the face of hardware failures or other issues.

Real-world applications further demonstrate the importance of these innovations. For instance, edge data centers equipped with advanced GPUs and TPUs in autonomous vehicles process vast amounts of sensor data in real time, enabling top-notch navigation. In healthcare, edge data centers leverage Kubernetes to manage patient data and run complex analytics that support diagnostics and treatment plans, all while maintaining compliance with stringent regulatory standards.

The integration of these advanced hardware components and software frameworks not only boosts the performance of edge data centers but also enhances their scalability and reliability. This makes them better equipped to meet the demands of modern, data-intensive applications and drive innovations across various industries.

Integration with 5G Technology

The synergistic combination of Edge data centers and 5G networks holds the potential to revolutionize data processing and transmission [7]. The integration of edge data centers with 5G networks allows for unprecedented speed, efficiency, and reliability in handling data-intensive applications. It has been observed that the evolution from 3G to 4G brought significant improvements in data rates and connectivity. However, the advent of 5G represents a quantum leap, offering ultra-low latency, massive bandwidth, and the capability to connect billions of devices seamlessly.

5G networks, with their ultra-low latency and high bandwidth capabilities, are designed to support a massive number of connected devices. When combined with edge data centers, these networks can process and analyze data locally, drastically reducing the time it takes to transmit data to central data centers. This integration is particularly beneficial for applications that require real-time processing, such as autonomous vehicles, remote surgery, and augmented reality.

The integration mechanisms between edge data centers and 5G networks involve several advanced technologies. Network slicing is one such technology, which allows the creation of multiple virtual networks on a single physical 5G network infrastructure. Each slice can be tailored to meet the specific requirements of different applications, ensuring optimal performance and resource utilization. For instance, a network slice dedicated to autonomous vehicles can be configured to prioritize low latency and high reliability, providing the necessary conditions for safe and efficient operation.

Edge orchestration is another critical mechanism that facilitates the seamless integration of edge data centers with 5G networks. This involves the dynamic management of computing resources at the network edge, ensuring that workloads are efficiently distributed across available resources. Edge orchestration platforms, such as ETSI MEC (Multi-access Edge Computing), provide the framework for deploying and managing applications in a 5G-enabled edge environment. These platforms enable service providers to deliver enhanced services with lower latency and improved quality of service.

Real-world applications further demonstrate the benefits of integrating edge data centers with 5G technology. In smart cities, for example, this integration supports real-time traffic management and public safety systems, leading to more efficient and safer urban environments. In the healthcare sector, 5G-enabled edge data centers allow for advanced telemedicine services, where high-resolution imaging and real-time data analysis are critical for accurate diagnostics and treatment.

The benefits of integrating edge data centers with 5G technology are numerous. It enables faster data processing and decision-making by reducing latency and bandwidth usage. This is crucial for applications like smart manufacturing, where real-time data analysis can lead to significant improvements in productivity and efficiency. Additionally, the enhanced reliability and scalability of 5G networks, coupled with the localized processing capabilities of edge data centers, create a robust infrastructure for supporting the growing demands of the Internet of Things (IoT).

Recommended Readings: An introduction to 5G

Enhanced Data Security Measures

Ensuring data security is essential in edge data centers, given their distributed nature and the sensitivity of the data they handle. The latest security measures implemented in these centers are designed to protect against a wide range of threats, from cyber-attacks to data breaches.

One of the advanced security technologies employed in edge data centers is the use of secure enclaves. Secure enclaves provide a protected area of execution within a processor, ensuring that sensitive data and code are isolated from the rest of the system. This isolation helps prevent unauthorized access and tampering, making it a critical component for securing edge computing environments. Intel’s Software Guard Extensions (SGX) and ARM’s TrustZone are prominent examples of technologies that create secure enclaves, enabling secure data processing and storage.

The adoption of zero-trust security models is another significant advancement in securing edge data centers. Unlike traditional security models that rely on perimeter defenses, zero-trust architecture operates on the principle that no entity, whether inside or outside the network, should be trusted by default. This model requires continuous verification of the identity and integrity of devices, users, and applications attempting to access resources. Implementing zero-trust involves a combination of techniques, including multi-factor authentication (MFA), micro-segmentation, and continuous monitoring, to ensure robust security across the entire network.

Advanced encryption techniques are also pivotal in protecting data within edge data centers. Data encryption ensures that even if data is intercepted, it remains unintelligible to unauthorized parties. Techniques such as homomorphic encryption allow computations to be performed on encrypted data without needing to decrypt it first, thereby maintaining data privacy throughout the processing lifecycle. Furthermore, transport layer security (TLS) and end-to-end encryption (E2EE) are employed to secure data in transit and at rest, providing comprehensive protection against data breaches.

Incorporating these cutting-edge security measures helps safeguard sensitive information and maintain the integrity and confidentiality of data processed at the edge. By leveraging technologies like secure enclaves, zero-trust security models, and advanced encryption techniques, edge data centers can provide a robust security framework that meets the demands of modern, data-driven applications.

Real-World Applications in Engineering

Industrial Automation and Control Systems

Edge data centers play a pivotal role in modern industrial automation by facilitating real-time data processing and decision-making by its proximity to the data source. Historically, industrial automation relied on centralized data processing, which introduced delays and reduced responsiveness. The shift to edge computing addresses these challenges by bringing computational power closer to the operational environment.

In industrial automation, edge data centers are used to monitor and control manufacturing processes, robotics, and other automated systems. For example, in a smart factory, sensors and actuators collect vast amounts of data from machinery and production lines. This data needs to be processed in real time to make instantaneous decisions that optimize production efficiency and reduce downtime. Edge data centers handle this data locally, ensuring rapid processing and immediate response to changing conditions on the factory floor.

A notable example of control systems benefiting from edge computing is the implementation of predictive maintenance. Traditional maintenance approaches are often reactive, addressing issues only after they occur, which can lead to costly downtime. With edge data centers, predictive maintenance systems can analyze data from machinery sensors in real-time to detect anomalies and predict potential failures before they happen. This proactive approach not only minimizes downtime but also extends the lifespan of equipment. For instance, General Electric uses edge computing to monitor and maintain its industrial machines, leading to significant cost savings and operational efficiency.

Technically, edge data centers significantly enhance data processing in industrial automation by reducing the latency associated with sending data to centralized cloud servers. This is crucial for applications that require split-second decisions, such as robotic assembly lines or quality control systems that need to identify defects in real time. The low latency and high bandwidth provided by edge computing ensure that data is processed and acted upon almost instantaneously, which is essential for maintaining high levels of productivity and quality in manufacturing processes.

Furthermore, edge data centers support real-time decision-making by leveraging advanced analytics and machine learning algorithms. These technologies can be deployed at the edge to analyze data as it is generated, providing insights that drive automated decision-making processes. For instance, in a chemical processing plant, edge data centers can analyze sensor data to maintain optimal reaction conditions, ensuring product consistency and safety. Siemens, a global industrial manufacturer, utilizes edge computing to optimize its production processes, resulting in improved product quality and reduced operational costs.

Overall, the integration of edge data centers in industrial automation and control systems transforms how manufacturing processes are managed. By providing localized data processing and immediate insights, edge computing enhances efficiency, reduces latency, and supports the advanced control systems that underpin modern automated industries. This technological evolution not only improves operational efficiency but also paves the way for future advancements in industrial automation.

Autonomous Vehicles and Robotics

Edge data centers are integral to the advancement of autonomous vehicles and robotics, providing the necessary infrastructure for real-time data processing and decision-making. These applications demand high-speed processing and low-latency responses, which traditional centralized data centers cannot effectively provide.

In autonomous vehicles, edge data centers process vast amounts of data generated by sensors, cameras, and LIDAR systems in real time. This data processing at the edge is crucial for making split-second decisions that ensure the safety and efficiency of the vehicle. For example, Tesla's Autopilot system uses edge computing to process data from its sensors and cameras locally, allowing the vehicle to respond to its environment in real time. This local processing reduces the latency that would occur if data had to be sent to a distant central server, enabling faster reaction times and more reliable autonomous driving.

Technical examples further illustrate how edge data centers enhance performance in autonomous systems [8][9]. One critical aspect is the use of machine learning algorithms to interpret sensor data and make driving decisions. Algorithms such as convolutional neural networks (CNNs) are deployed at the edge to process visual data from cameras, identifying objects, and making navigation decisions based on real-time analysis. Additionally, reinforcement learning algorithms enable the vehicle to improve its performance over time by learning from its interactions with the environment.

Real-time processing capabilities are vital in both autonomous vehicles and robotics. In robotics, edge data centers facilitate the immediate processing of sensory input, enabling robots to perform tasks with precision and adaptability. For instance, in a manufacturing setting, robots equipped with edge computing can adapt to changes in the production line swiftly, ensuring continuous operation without delays caused by data transmission to centralized servers. This capability is essential for applications requiring high precision and real-time responsiveness, such as robotic surgery or automated warehouse operations.

Sensor fusion techniques are another key area where edge data centers enhance performance. By combining data from multiple sensors, edge computing systems can create a more accurate and comprehensive understanding of the environment. In autonomous vehicles, sensor fusion integrates inputs from cameras, LIDAR, radar, and ultrasonic sensors to provide a detailed view of the surroundings, which is crucial for safe navigation and obstacle avoidance. The edge data center processes this fused data in real time, ensuring that the vehicle can respond appropriately to dynamic driving conditions.

advanced machine learning algorithms, robust real-time processing capabilities, and effective sensor fusion techniques, edge computing provides the essential infrastructure that supports the sophisticated functions of these technologies.

Suggested Readings: What is an Autonomous Vehicle: A Comprehensive Guide to its Engineering Principles and Applications

Suggested Readings: Sensor Fusion: The Ultimate Guide to Combining Data for Enhanced Perception and Decision-Making

Navigating Challenges and Limitations

Scalability Issues

Scaling edge data centers presents unique challenges that differ from traditional centralized data centers, such as:

- Managing a distributed infrastructure: Unlike centralized data centers, edge data centers are dispersed across various locations, complicating infrastructure management. Effective tools and strategies are essential to monitor and control these distributed resources consistently.

- Network congestion: Edge data centers rely on robust connectivity, and as more devices connect, the risk of congestion increases, potentially degrading performance. This issue is especially pronounced in high-density urban areas and rural locations with limited infrastructure. Advanced networking technologies and optimization techniques are vital to mitigate congestion.

- Resource allocation: Resource allocation presents both technical and logistical challenges. Edge data centers must dynamically allocate computational, storage, and networking resources to handle varying workloads. Real-time resource management is crucial to adapt to changing demands, involving sophisticated algorithms and tools to predict patterns and allocate resources efficiently.

- Varying tools: The heterogeneity of hardware and software across different edge data centers also complicates scalability. Standardizing components and protocols can streamline operations, but compatibility issues and tailored solutions for specific local requirements pose challenges.

Maintenance and Management

Maintaining and managing edge data centers is inherently complex due to their distributed nature and the need for continuous, reliable operation. Unlike traditional centralized data centers, edge data centers are often located in remote or hard-to-reach areas, which complicates physical maintenance and monitoring.

Management Tools and Techniques:

- Remote Monitoring: Remote monitoring tools are essential for managing edge data centers. These tools provide real-time visibility into the operational status of distributed resources, enabling administrators to detect and address issues promptly. Technologies such as IoT sensors and telemetry systems collect data on temperature, power usage, and network performance, which is then analyzed to ensure optimal functioning.

- Predictive Maintenance: Predictive maintenance leverages data analytics and machine learning to anticipate and prevent equipment failures before they occur. By analyzing patterns in sensor data, predictive maintenance systems can identify signs of wear and tear, overheating, or other issues that could lead to failures. This proactive approach minimizes downtime and extends the lifespan of critical components.

- Automated Orchestration: Automated orchestration platforms, such as Kubernetes, facilitate the management of workloads across edge data centers. These platforms automate the deployment, scaling, and operation of applications, ensuring that resources are used efficiently and that services remain available even during peak demand. Automated orchestration reduces the need for manual intervention, which is particularly beneficial for managing multiple, geographically dispersed edge sites.

Maintenance Tips:

- Regularly Update Software and Firmware: Ensure all software and firmware are up to date to protect against vulnerabilities and improve performance.

- Implement Redundancy: Use redundant systems and components to enhance reliability and ensure continuous operation in case of failures.

- Conduct Routine Inspections: Schedule regular physical inspections and maintenance checks to identify and address potential issues early.

- Monitor Environmental Conditions: Continuously monitor environmental conditions such as temperature and humidity to prevent equipment damage.

- Utilize Data Analytics: Leverage data analytics to gain insights into performance trends and optimize maintenance schedules.

Managing edge data centers requires a combination of advanced tools and proactive strategies to ensure they operate efficiently and reliably. By implementing robust remote monitoring, predictive maintenance, and automated orchestration, organizations can overcome the complexities of managing distributed infrastructures and maintain high levels of performance and availability.

Conclusion

Edge data centers are revolutionizing engineering by enabling real-time data processing, reducing latency, and enhancing efficiency and reliability across various applications. From industrial automation to autonomous vehicles, edge data centers drive significant advancements by addressing scalability, maintenance, and security challenges, making them essential in modern engineering infrastructure.

Frequently Asked Questions (FAQs)

What is an edge data center?

An edge data center is a localized facility that brings computation and data storage closer to the data source, reducing latency and improving processing speeds.

How do edge data centers differ from traditional data centers?

Edge data centers are distributed and located near data sources, reducing latency and bandwidth usage. Traditional data centers are centralized, often resulting in higher latency due to data travel distances.

What are the benefits of using edge data centers?

Edge data centers reduce latency, improve processing speeds, enhance data security, and optimize bandwidth utilization. They support real-time data processing, crucial for applications like autonomous vehicles, smart cities, and industrial automation.

What are the challenges of scaling edge data centers?

Scaling edge data centers involves managing distributed infrastructure, mitigating network congestion, and dynamically allocating resources. Standardizing hardware and software components across locations also presents challenges.

How do edge data centers enhance data security?

Edge data centers use secure enclaves, zero-trust security models, and advanced encryption techniques to protect data at rest and in transit, ensuring robust security against unauthorized access and cyber threats.

What role do edge data centers play in autonomous vehicles?

In autonomous vehicles, edge data centers process sensor, camera, and LIDAR data in real time, enabling split-second decisions. They support advanced machine learning algorithms and sensor fusion techniques essential for autonomous driving.

How is predictive maintenance implemented in edge data centers?

Predictive maintenance uses data analytics and machine learning to analyze sensor data in real time, detecting anomalies and predicting failures before they occur. This proactive approach minimizes downtime and extends equipment lifespan.

What are some key management tools for edge data centers?

Key management tools include remote monitoring systems, predictive maintenance platforms, and automated orchestration tools, which help manage distributed resources, optimize performance, and ensure reliability.

How do edge data centers support real-time processing?

Edge data centers minimize latency by processing data close to the source. They use advanced analytics, machine learning algorithms, and sensor fusion techniques to provide immediate data processing and decision-making capabilities.

References

[1] STLTech. What is a data-center? https://stl.tech/blog/data-centers-what-why-and-how/

[2] HPE. What is an Edge Datacenter? https://www.hpe.com/in/en/what-is/edge-datacenter.html#:~:text=Edge%20datacenters%20are%20located%20closer,to%20increase%20computing%20response%20time.

[3] STLPartners. Digital health at the edge: Three use cases for the healthcare industry. https://stlpartners.com/articles/edge-computing/digital-health-at-the-edge/

[4] Spiceworks. Micro Data Centers - the future of edge computing.https://www.spiceworks.com/tech/edge-computing/articles/edge-computing-and-the-rise-of-micro-datacenters/

[5] IEEE. 802.3bs-2017. https://ieeexplore.ieee.org/document/8207825

[6] SpringerLink. Implementation of an Edge Computing Architecture Using OpenStack and Kubernetes. https://link.springer.com/chapter/10.1007/978-981-13-1056-0_66

[7] coresite. 5G’s Impact on Edge Infrastructure. https://23381819.fs1.hubspotusercontent-na1.net/hubfs/23381819/63a5b4026df3551d05e87d1a_hvy-rdng-5gs-impact-on-edge-infrastructure-whitepaper.pdf

[8] ZDNet. Why autonomous vehicles will rely on edge computing and not the cloud. https://www.zdnet.com/article/why-autonomous-vehicles-will-rely-on-edge-computing-and-not-the-cloud/

[9] IEEE. Edge Computing Assisted Autonomous Driving Using Artificial Intelligence.https://ieeexplore.ieee.org/document/9498627

Table of Contents

IntroductionCore Concepts of Edge Data CentersWhat is an Edge Data Center?Key Components and ArchitectureThe Need for Edge Data CentersCutting-Edge Advancements in Edge Data CentersInnovations in Hardware and SoftwareIntegration with 5G TechnologyEnhanced Data Security MeasuresReal-World Applications in EngineeringIndustrial Automation and Control SystemsAutonomous Vehicles and RoboticsNavigating Challenges and LimitationsScalability IssuesMaintenance and ManagementConclusionFrequently Asked Questions (FAQs)What is an edge data center?How do edge data centers differ from traditional data centers?What are the benefits of using edge data centers?What are the challenges of scaling edge data centers?How do edge data centers enhance data security?What role do edge data centers play in autonomous vehicles?How is predictive maintenance implemented in edge data centers?What are some key management tools for edge data centers?How do edge data centers support real-time processing?References